|

Cycle Sort

Cycle sort is an in-place, unstable sorting algorithm, a comparison sort that is theoretically optimal in terms of the total number of writes to the original array, unlike any other in-place sorting algorithm. It is based on the idea that the permutation to be sorted can be factored into cycles, which can individually be rotated to give a sorted result. Unlike nearly every other sort, items are ''never'' written elsewhere in the array simply to push them out of the way of the action. Each value is either written zero times, if it's already in its correct position, or written one time to its correct position. This matches the minimal number of overwrites required for a completed in-place sort. Minimizing the number of writes is useful when making writes to some huge data set is very expensive, such as with EEPROMs like Flash memory where each write reduces the lifespan of the memory. Algorithm To illustrate the idea of cycle sort, consider a list with distinct elements. Give ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sorting Algorithm

In computer science, a sorting algorithm is an algorithm that puts elements of a List (computing), list into an Total order, order. The most frequently used orders are numerical order and lexicographical order, and either ascending or descending. Efficient sorting is important for optimizing the Algorithmic efficiency, efficiency of other algorithms (such as search algorithm, search and merge algorithm, merge algorithms) that require input data to be in sorted lists. Sorting is also often useful for Canonicalization, canonicalizing data and for producing human-readable output. Formally, the output of any sorting algorithm must satisfy two conditions: # The output is in monotonic order (each element is no smaller/larger than the previous element, according to the required order). # The output is a permutation (a reordering, yet retaining all of the original elements) of the input. For optimum efficiency, the input data should be stored in a data structure which allows random access ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Python (programming Language)

Python is a high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation. Python is dynamically-typed and garbage-collected. It supports multiple programming paradigms, including structured (particularly procedural), object-oriented and functional programming. It is often described as a "batteries included" language due to its comprehensive standard library. Guido van Rossum began working on Python in the late 1980s as a successor to the ABC programming language and first released it in 1991 as Python 0.9.0. Python 2.0 was released in 2000 and introduced new features such as list comprehensions, cycle-detecting garbage collection, reference counting, and Unicode support. Python 3.0, released in 2008, was a major revision that is not completely backward-compatible with earlier versions. Python 2 was discontinued with version 2.7.18 in 2020. Python consistently ranks as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sorting Algorithms

In computer science, a sorting algorithm is an algorithm that puts elements of a list into an order. The most frequently used orders are numerical order and lexicographical order, and either ascending or descending. Efficient sorting is important for optimizing the efficiency of other algorithms (such as search and merge algorithms) that require input data to be in sorted lists. Sorting is also often useful for canonicalizing data and for producing human-readable output. Formally, the output of any sorting algorithm must satisfy two conditions: # The output is in monotonic order (each element is no smaller/larger than the previous element, according to the required order). # The output is a permutation (a reordering, yet retaining all of the original elements) of the input. For optimum efficiency, the input data should be stored in a data structure which allows random access rather than one that allows only sequential access. History and concepts From the beginning of computi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Search

In computer science, a linear search or sequential search is a method for finding an element within a list. It sequentially checks each element of the list until a match is found or the whole list has been searched. A linear search runs in at worst linear time and makes at most comparisons, where is the length of the list. If each element is equally likely to be searched, then linear search has an average case of comparisons, but the average case can be affected if the search probabilities for each element vary. Linear search is rarely practical because other search algorithms and schemes, such as the binary search algorithm and hash tables, allow significantly faster searching for all but short lists. Algorithm A linear search sequentially checks each element of the list until it finds an element that matches the target value. If the algorithm reaches the end of the list, the search terminates unsuccessfully. Basic algorithm Given a list of elements with values or record ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

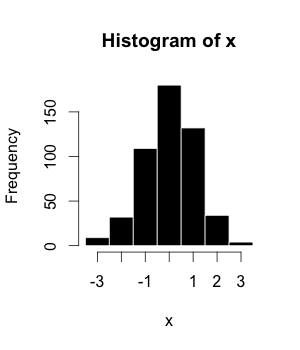

Histogram

A histogram is an approximate representation of the distribution of numerical data. The term was first introduced by Karl Pearson. To construct a histogram, the first step is to " bin" (or "bucket") the range of values—that is, divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping intervals of a variable. The bins (intervals) must be adjacent and are often (but not required to be) of equal size. If the bins are of equal size, a bar is drawn over the bin with height proportional to the frequency—the number of cases in each bin. A histogram may also be normalized to display "relative" frequencies showing the proportion of cases that fall into each of several categories, with the sum of the heights equaling 1. However, bins need not be of equal width; in that case, the erected rectangle is defined to have its ''area'' proportional to the frequency ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perfect Hash Function

In computer science, a perfect hash function for a set is a hash function that maps distinct elements in to a set of integers, with no collisions. In mathematical terms, it is an injective function. Perfect hash functions may be used to implement a lookup table with constant worst-case access time. A perfect hash function can, as any hash function, be used to implement hash tables, with the advantage that no collision resolution has to be implemented. In addition, if the keys are not the data and if it is known that queried keys will be valid, then the keys do not need to be stored in the lookup table, saving space. Disadvantages of perfect hash functions are that needs to be known for the construction of the perfect hash function. Non-dynamic perfect hash functions need to be re-constructed if changes. For frequently changing dynamic perfect hash functions may be used at the cost of additional space. The space requirement to store the perfect hash function is in . The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Complexity

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally expresse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flash Memory

Flash memory is an electronic non-volatile computer memory storage medium that can be electrically erased and reprogrammed. The two main types of flash memory, NOR flash and NAND flash, are named for the NOR and NAND logic gates. Both use the same cell design, consisting of floating gate MOSFETs. They differ at the circuit level depending on whether the state of the bit line or word lines is pulled high or low: in NAND flash, the relationship between the bit line and the word lines resembles a NAND gate; in NOR flash, it resembles a NOR gate. Flash memory, a type of floating-gate memory, was invented at Toshiba in 1980 and is based on EEPROM technology. Toshiba began marketing flash memory in 1987. EPROMs had to be erased completely before they could be rewritten. NAND flash memory, however, may be erased, written, and read in blocks (or pages), which generally are much smaller than the entire device. NOR flash memory allows a single machine word to be written to an era ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EEPROM

EEPROM (also called E2PROM) stands for electrically erasable programmable read-only memory and is a type of non-volatile memory used in computers, usually integrated in microcontrollers such as smart cards and remote keyless systems, or as a separate chip device to store relatively small amounts of data by allowing individual bytes to be erased and reprogrammed. EEPROMs are organized as arrays of floating-gate transistors. EEPROMs can be programmed and erased in-circuit, by applying special programming signals. Originally, EEPROMs were limited to single-byte operations, which made them slower, but modern EEPROMs allow multi-byte page operations. An EEPROM has a limited life for erasing and reprogramming, now reaching a million operations in modern EEPROMs. In an EEPROM that is frequently reprogrammed, the life of the EEPROM is an important design consideration. Flash memory is a type of EEPROM designed for high speed and high density, at the expense of large erase blocks (ty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |