|

Continual Learning

In computer science, incremental learning is a method of machine learning in which input data is continuously used to extend the existing model's knowledge i.e. to further train the model. It represents a dynamic technique of supervised learning and unsupervised learning that can be applied when training data becomes available gradually over time or its size is out of system memory limits. Algorithms that can facilitate incremental learning are known as incremental machine learning algorithms. Many traditional machine learning algorithms inherently support incremental learning. Other algorithms can be adapted to facilitate incremental learning. Examples of incremental algorithms include decision trees (IDE4, ID5R angaenari, decision rules, artificial neural networks ( RBF networks, Learn++, Fuzzy ARTMAP, TopoART,Marko Tscherepanow, Marco Kortkamp, and Marc KammerA Hierarchical ART Network for the Stable Incremental Learning of Topological Structures and Associations from Noisy D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often human-made labels. The training process builds a function that maps new data to expected output values. An optimal scenario will allow for the algorithm to accurately determine output values for unseen instances. This requires the learning algorithm to Generalization (learning), generalize from the training data to unseen situations in a reasonable way (see inductive bias). This statistical quality of an algorithm is measured via a ''generalization error''. Steps to follow To solve a given problem of supervised learning, the following steps must be performed: # Determine the type of training samples. Before doing anything else, the user should decide what kind of data is to be used as a Training, validation, and test data sets, trainin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unsupervised Learning

Unsupervised learning is a framework in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Other frameworks in the spectrum of supervisions include weak- or semi-supervision, where a small portion of the data is tagged, and self-supervision. Some researchers consider self-supervised learning a form of unsupervised learning. Conceptually, unsupervised learning divides into the aspects of data, training, algorithm, and downstream applications. Typically, the dataset is harvested cheaply "in the wild", such as massive text corpus obtained by web crawling, with only minor filtering (such as Common Crawl). This compares favorably to supervised learning, where the dataset (such as the ImageNet1000) is typically constructed manually, which is much more expensive. There were algorithms designed specifically for unsupervised learning, such as clustering algorithms like k-means, dimensionality reduction techniques l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Tree

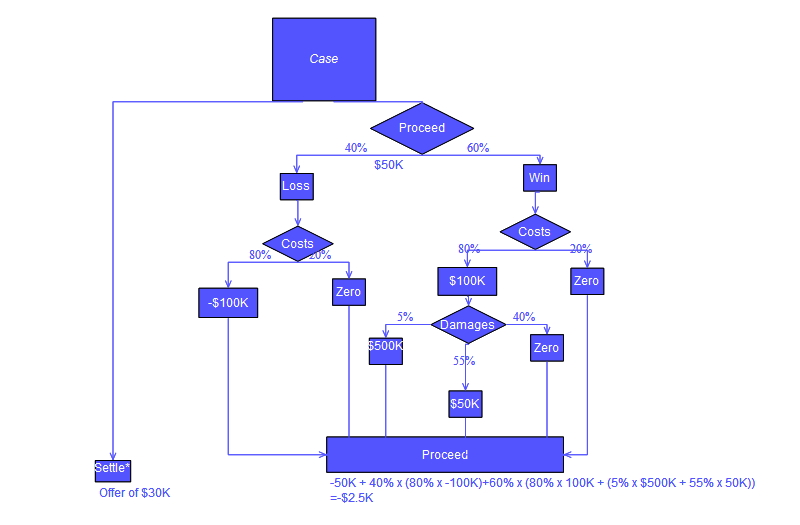

A decision tree is a decision support system, decision support recursive partitioning structure that uses a Tree (graph theory), tree-like Causal model, model of decisions and their possible consequences, including probability, chance event outcomes, resource costs, and utility. It is one way to display an algorithm that only contains conditional control statements. Decision trees are commonly used in operations research, specifically in decision analysis, to help identify a strategy most likely to reach a goal, but are also a popular tool in Decision tree learning, machine learning. Overview A decision tree is a flowchart-like structure in which each internal node represents a test on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules. In decision analysis, a de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Rules

A decision tree is a decision support recursive partitioning structure that uses a tree-like model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility. It is one way to display an algorithm that only contains conditional control statements. Decision trees are commonly used in operations research, specifically in decision analysis, to help identify a strategy most likely to reach a goal, but are also a popular tool in machine learning. Overview A decision tree is a flowchart-like structure in which each internal node represents a test on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules. In decision analysis, a decision tree and the closely related influence diagram are used as a visual and analytical decis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RBF Network

In the field of mathematical modeling, a radial basis function network is an artificial neural network that uses radial basis functions as activation functions. The output of the network is a linear combination of radial basis functions of the inputs and neuron parameters. Radial basis function networks have many uses, including function approximation, time series prediction, classification, and system control. They were first formulated in a 1988 paper by Broomhead and Lowe, both researchers at the Royal Signals and Radar Establishment. Network architecture Radial basis function (RBF) networks typically have three layers: an input layer, a hidden layer with a non-linear RBF activation function and a linear output layer. The input can be modeled as a vector of real numbers \mathbf \in \mathbb^n. The output of the network is then a scalar function of the input vector, \varphi : \mathbb^n \to \mathbb , and is given by :\varphi(\mathbf) = \sum_^N a_i \rho(, , \mathbf-\mathbf_i, , ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lalita Udpa

Lalita Udpa is University Distinguished Professor of Electrical and Computer Engineering at Michigan State University. She was educated in India and the US, and focuses her research on nondestructive testing, including the inspection of aircraft and pipelines. Education Udpa studied physics at the University of Pune in India, earning a bachelor's degree in 1972 and a master's degree in 1974. She came to Colorado State University in the US for continued study in electrical engineering, earned a second master's degree in 1981, and completed her Ph.D. in 1986. Recognition Udpa was named as a Fellow of the American Society for Nondestructive Testing in 2007, and was the 2015 recipient of the society's Advancement of Women in NDT Recognition Award. She was named an IEEE Fellow , the Institute of Electrical and Electronics Engineers The Institute of Electrical and Electronics Engineers (IEEE) is an American 501(c)(3) public charity professional organization for electrical engi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machine

In machine learning, support vector machines (SVMs, also support vector networks) are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories, SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). In addition to performing linear classification, SVMs can efficiently perform non-linear classification using the ''kernel trick'', representing the data only through a set of pairwise similarity comparisons between the original data points using a kernel function, which transforms them into coordinates in a higher-dimensional feature space. Thus, SVMs use the kernel trick to implicitly map their inputs into high-dimensional feature spaces, where linear classification can be performed. Being max-margin models, SVMs are resilient to noisy data (e.g., misclassified examples). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Stream

In connection-oriented communication, a data stream is the transmission of a sequence of digitally encoded signals to convey information. Typically, the transmitted symbols are grouped into a series of packets. Data streaming has become ubiquitous. Anything transmitted over the Internet is transmitted as a data stream. Using a mobile phone to have a conversation transmits the sound as a data stream. Formal definition In a formal way, a data stream is any ordered pair ( s, \Delta ) where: # s is a sequence of tuples and # \Delta is a sequence of positive real time intervals. Content Data Stream contains different sets of data, that depend on the chosen data format. * Attributes – each attribute of the data stream represents a certain type of data, e.g. segment / data point ID, timestamp, geodata. * Timestamp attribute helps to identify when an event occurred. * Subject ID is an encoded-by-algorithm ID, that has been extracted out of a cookie. * Raw Data in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

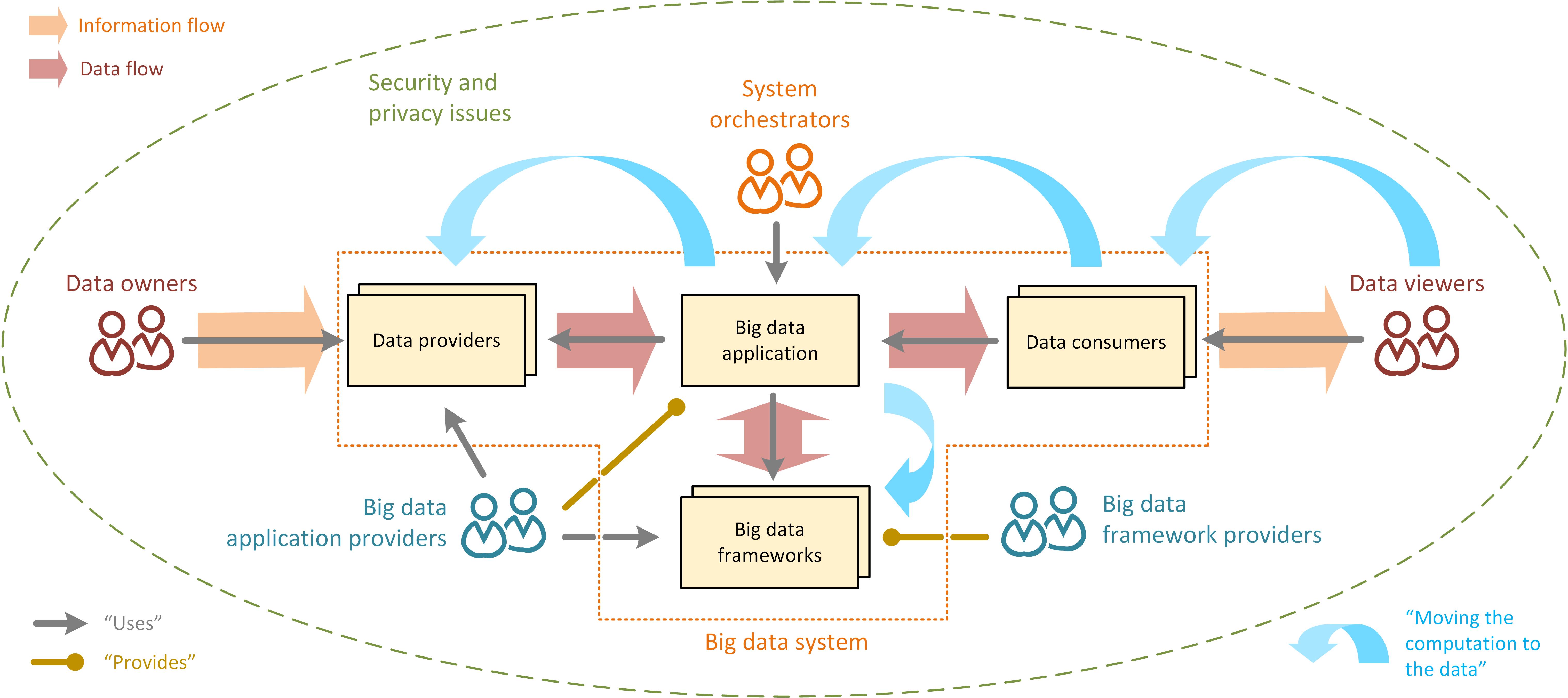

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |