|

Consistency Models

In classical deductive logic, a consistent theory is one that does not lead to a logical contradiction. The lack of contradiction can be defined in either semantic or syntactic terms. The semantic definition states that a theory is consistent if it has a model, i.e., there exists an interpretation under which all formulas in the theory are true. This is the sense used in traditional Aristotelian logic, although in contemporary mathematical logic the term ''satisfiable'' is used instead. The syntactic definition states a theory T is consistent if there is no formula \varphi such that both \varphi and its negation \lnot\varphi are elements of the set of consequences of T. Let A be a set of closed sentences (informally "axioms") and \langle A\rangle the set of closed sentences provable from A under some (specified, possibly implicitly) formal deductive system. The set of axioms A is consistent when \varphi, \lnot \varphi \in \langle A \rangle for no formula \varphi. If there exis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classical Logic

Classical logic (or standard logic or Frege-Russell logic) is the intensively studied and most widely used class of deductive logic. Classical logic has had much influence on analytic philosophy. Characteristics Each logical system in this class shares characteristic properties: Gabbay, Dov, (1994). 'Classical vs non-classical logic'. In D.M. Gabbay, C.J. Hogger, and J.A. Robinson, (Eds), ''Handbook of Logic in Artificial Intelligence and Logic Programming'', volume 2, chapter 2.6. Oxford University Press. # Law of excluded middle and double negation elimination # Law of noncontradiction, and the principle of explosion # Monotonicity of entailment and idempotency of entailment # Commutativity of conjunction # De Morgan duality: every logical operator is dual to another While not entailed by the preceding conditions, contemporary discussions of classical logic normally only include propositional and first-order logics. Shapiro, Stewart (2000). Classical Logic. In Stanford Encyclop ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Emil Post

Emil Leon Post (; February 11, 1897 – April 21, 1954) was an American mathematician and logician. He is best known for his work in the field that eventually became known as computability theory. Life Post was born in Augustów, Suwałki Governorate, Congress Poland, Russian Empire (now Poland) into a Polish-Jewish family that immigrated to New York City in May 1904. His parents were Arnold and Pearl Post. Post had been interested in astronomy, but at the age of twelve lost his left arm in a car accident. This loss was a significant obstacle to being a professional astronomer, leading to his decision to pursue mathematics rather than astronomy. Post attended the Townsend Harris High School and continued on to graduate from City College of New York in 1917 with a B.S. in Mathematics. After completing his Ph.D. in mathematics in 1920 at Columbia University, supervised by Cassius Jackson Keyser, he did a post-doctorate at Princeton University in the 1920–1921 academic year. Pos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peano Arithmetic

In mathematical logic, the Peano axioms, also known as the Dedekind–Peano axioms or the Peano postulates, are axioms for the natural numbers presented by the 19th century Italian mathematician Giuseppe Peano. These axioms have been used nearly unchanged in a number of metamathematical investigations, including research into fundamental questions of whether number theory is consistent and complete. The need to formalize arithmetic was not well appreciated until the work of Hermann Grassmann, who showed in the 1860s that many facts in arithmetic could be derived from more basic facts about the successor operation and induction. In 1881, Charles Sanders Peirce provided an axiomatization of natural-number arithmetic. In 1888, Richard Dedekind proposed another axiomatization of natural-number arithmetic, and in 1889, Peano published a simplified version of them as a collection of axioms in his book, ''The principles of arithmetic presented by a new method'' ( la, Arithmetice ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normalization Property

In abstract rewriting, an object is in normal form if it cannot be rewritten any further, i.e. it is irreducible. Depending on the rewriting system, an object may rewrite to several normal forms or none at all. Many properties of rewriting systems relate to normal forms. Definitions Stated formally, if (''A'',→) is an abstract rewriting system, ''x''∈''A'' is in normal form if no ''y''∈''A'' exists such that ''x''→''y'', i.e. ''x'' is an irreducible term. An object ''a'' is weakly normalizing if there exists at least one particular sequence of rewrites starting from ''a'' that eventually yields a normal form. A rewriting system has the weak normalization property or is ''(weakly) normalizing'' (WN) if every object is weakly normalizing. An object ''a'' is strongly normalizing if every sequence of rewrites starting from ''a'' eventually terminates with a normal form. An abstract rewriting system is ''strongly normalizing'', ''terminating'', ''noetherian'', or has the (stro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

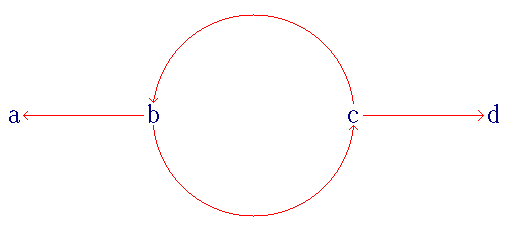

Cut-elimination

The cut-elimination theorem (or Gentzen's ''Hauptsatz'') is the central result establishing the significance of the sequent calculus. It was originally proved by Gerhard Gentzen in his landmark 1934 paper "Investigations in Logical Deduction" for the systems LJ and LK formalising intuitionistic and classical logic respectively. The cut-elimination theorem states that any judgement that possesses a proof in the sequent calculus making use of the cut rule also possesses a cut-free proof, that is, a proof that does not make use of the cut rule. The cut rule A sequent is a logical expression relating multiple formulas, in the form , which is to be read as proves , and (as glossed by Gentzen) should be understood as equivalent to the truth-function "If (A_1 and A_2 and A_3 …) then (B_1 or B_2 or B_3 …)." Note that the left-hand side (LHS) is a conjunction (and) and the right-hand side (RHS) is a disjunction (or). The LHS may have arbitrarily many or few formulae; when the LH ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Incompleteness Theorems

Complete may refer to: Logic * Completeness (logic) * Completeness of a theory, the property of a theory that every formula in the theory's language or its negation is provable Mathematics * The completeness of the real numbers, which implies that there are no "holes" in the real numbers * Complete metric space, a metric space in which every Cauchy sequence converges * Complete uniform space, a uniform space where every Cauchy net in converges (or equivalently every Cauchy filter converges) * Complete measure, a measure space where every subset of every null set is measurable * Completion (algebra), at an ideal * Completeness (cryptography) * Completeness (statistics), a statistic that does not allow an unbiased estimator of zero * Complete graph, an undirected graph in which every pair of vertices has exactly one edge connecting them * Complete category, a category ''C'' where every diagram from a small category to ''C'' has a limit; it is ''cocomplete'' if every such functor ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hilbert's Program

In mathematics, Hilbert's program, formulated by German mathematician David Hilbert in the early part of the 20th century, was a proposed solution to the foundational crisis of mathematics, when early attempts to clarify the foundations of mathematics were found to suffer from paradoxes and inconsistencies. As a solution, Hilbert proposed to ground all existing theories to a finite, complete set of axioms, and provide a proof that these axioms were consistent. Hilbert proposed that the consistency of more complicated systems, such as real analysis, could be proven in terms of simpler systems. Ultimately, the consistency of all of mathematics could be reduced to basic arithmetic. Gödel's incompleteness theorems, published in 1931, showed that Hilbert's program was unattainable for key areas of mathematics. In his first theorem, Gödel showed that any consistent system with a computable set of axioms which is capable of expressing arithmetic can never be complete: it is possible to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proof Theory

Proof theory is a major branchAccording to Wang (1981), pp. 3–4, proof theory is one of four domains mathematical logic, together with model theory, axiomatic set theory, and recursion theory. Jon Barwise, Barwise (1978) consists of four corresponding parts, with part D being about "Proof Theory and Constructive Mathematics". of mathematical logic that represents Mathematical proof, proofs as formal mathematical objects, facilitating their analysis by mathematical techniques. Proofs are typically presented as Recursive data type, inductively-defined data structures such as list (computer science), lists, boxed lists, or Tree (data structure), trees, which are constructed according to the axioms and rule of inference, rules of inference of the logical system. Consequently, proof theory is syntax (logic), syntactic in nature, in contrast to model theory, which is Formal semantics (logic), semantic in nature. Some of the major areas of proof theory include structural proof theory, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

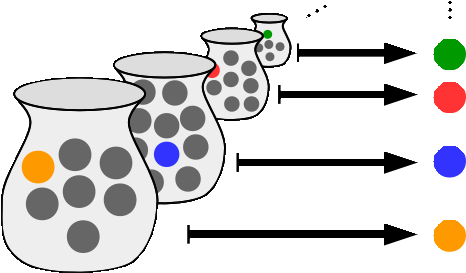

Axiom Of Choice

In mathematics, the axiom of choice, or AC, is an axiom of set theory equivalent to the statement that ''a Cartesian product of a collection of non-empty sets is non-empty''. Informally put, the axiom of choice says that given any collection of sets, each containing at least one element, it is possible to construct a new set by arbitrarily choosing one element from each set, even if the collection is infinite. Formally, it states that for every indexed family (S_i)_ of nonempty sets, there exists an indexed set (x_i)_ such that x_i \in S_i for every i \in I. The axiom of choice was formulated in 1904 by Ernst Zermelo in order to formalize his proof of the well-ordering theorem. In many cases, a set arising from choosing elements arbitrarily can be made without invoking the axiom of choice; this is, in particular, the case if the number of sets from which to choose the elements is finite, or if a canonical rule on how to choose the elements is available – some distinguishin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Proof

A mathematical proof is an inferential argument for a mathematical statement, showing that the stated assumptions logically guarantee the conclusion. The argument may use other previously established statements, such as theorems; but every proof can, in principle, be constructed using only certain basic or original assumptions known as axioms, along with the accepted rules of inference. Proofs are examples of exhaustive deductive reasoning which establish logical certainty, to be distinguished from empirical arguments or non-exhaustive inductive reasoning which establish "reasonable expectation". Presenting many cases in which the statement holds is not enough for a proof, which must demonstrate that the statement is true in ''all'' possible cases. A proposition that has not been proved but is believed to be true is known as a conjecture, or a hypothesis if frequently used as an assumption for further mathematical work. Proofs employ logic expressed in mathematical symbols ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Second-order Logic

In logic and mathematics, second-order logic is an extension of first-order logic, which itself is an extension of propositional logic. Second-order logic is in turn extended by higher-order logic and type theory. First-order logic quantifies only variables that range over individuals (elements of the domain of discourse); second-order logic, in addition, also quantifies over relations. For example, the second-order sentence \forall P\,\forall x (Px \lor \neg Px) says that for every formula ''P'', and every individual ''x'', either ''Px'' is true or not(''Px'') is true (this is the law of excluded middle). Second-order logic also includes quantification over sets, functions, and other variables (see section below). Both first-order and second-order logic use the idea of a domain of discourse (often called simply the "domain" or the "universe"). The domain is a set over which individual elements may be quantified. Examples First-order logic can quantify over individuals, bu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |