|

Conceptual Dependency Theory

Conceptual dependency theory is a model of natural language understanding used in artificial intelligence systems. Roger Schank at Stanford University introduced the model in 1969, in the early days of artificial intelligence. This model was extensively used by Schank's students at Yale University such as Robert Wilensky, Wendy Lehnert, and Janet Kolodner. Schank developed the model to represent knowledge for natural language input into computers. Partly influenced by the work of Sydney Lamb, his goal was to make the meaning independent of the words used in the input, i.e. two sentences identical in meaning, would have a single representation. The system was also intended to draw logical inferences. The model uses the following basic representational tokens:''Language, mind, and brain'' by Thomas W. Simon, Robert J. Scholes 1982 page 105 :* ''real world objects'', each with some ''attributes''. :* ''real world actions'', each with attributes :* ''times'' :* ''locations'' A set ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Understanding

Natural-language understanding (NLU) or natural-language interpretation (NLI) is a subtopic of natural-language processing in artificial intelligence that deals with machine reading comprehension. Natural-language understanding is considered an AI-hard problem. There is considerable commercial interest in the field because of its application to automated reasoning, machine translation, question answering, news-gathering, text categorization, voice-activation, archiving, and large-scale content analysis. History The program STUDENT, written in 1964 by Daniel Bobrow for his PhD dissertation at MIT, is one of the earliest known attempts at natural-language understanding by a computer. Eight years after John McCarthy coined the term artificial intelligence, Bobrow's dissertation (titled ''Natural Language Input for a Computer Problem Solving System'') showed how a computer could understand simple natural language input to solve algebra word problems. A year later, in 1965, J ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Roger Schank

Roger Carl Schank (born 1946) is an American artificial intelligence theorist, cognitive psychologist, learning scientist, educational reformer, and entrepreneur. Beginning in the late 1960s, he pioneered conceptual dependency theory (within the context of natural language understanding) and case-based reasoning, both of which challenged cognitivist views of memory and reasoning. In 1989, Schank was granted $30 million in a 10-year commitment to his research and development by Andersen Consulting, through which he founded the Institute for the Learning Sciences (ILS) at Northwestern University in Chicago. Academic career For his undergraduate degree, Schank studied mathematics at Carnegie Mellon University in Pittsburgh PA, and later was awarded a PhD in linguistics at the University of Texas in Austin and went on to work in faculty positions at Stanford University and then at Yale University. In 1974, he became professor of computer science and psychology at Yale University. I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stanford University

Stanford University, officially Leland Stanford Junior University, is a private research university in Stanford, California. The campus occupies , among the largest in the United States, and enrolls over 17,000 students. Stanford is considered among the most prestigious universities in the world. Stanford was founded in 1885 by Leland and Jane Stanford in memory of their only child, Leland Stanford Jr., who had died of typhoid fever at age 15 the previous year. Leland Stanford was a U.S. senator and former governor of California who made his fortune as a railroad tycoon. The school admitted its first students on October 1, 1891, as a coeducational and non-denominational institution. Stanford University struggled financially after the death of Leland Stanford in 1893 and again after much of the campus was damaged by the 1906 San Francisco earthquake. Following World War II, provost of Stanford Frederick Terman inspired and supported faculty and graduates' entrepreneu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

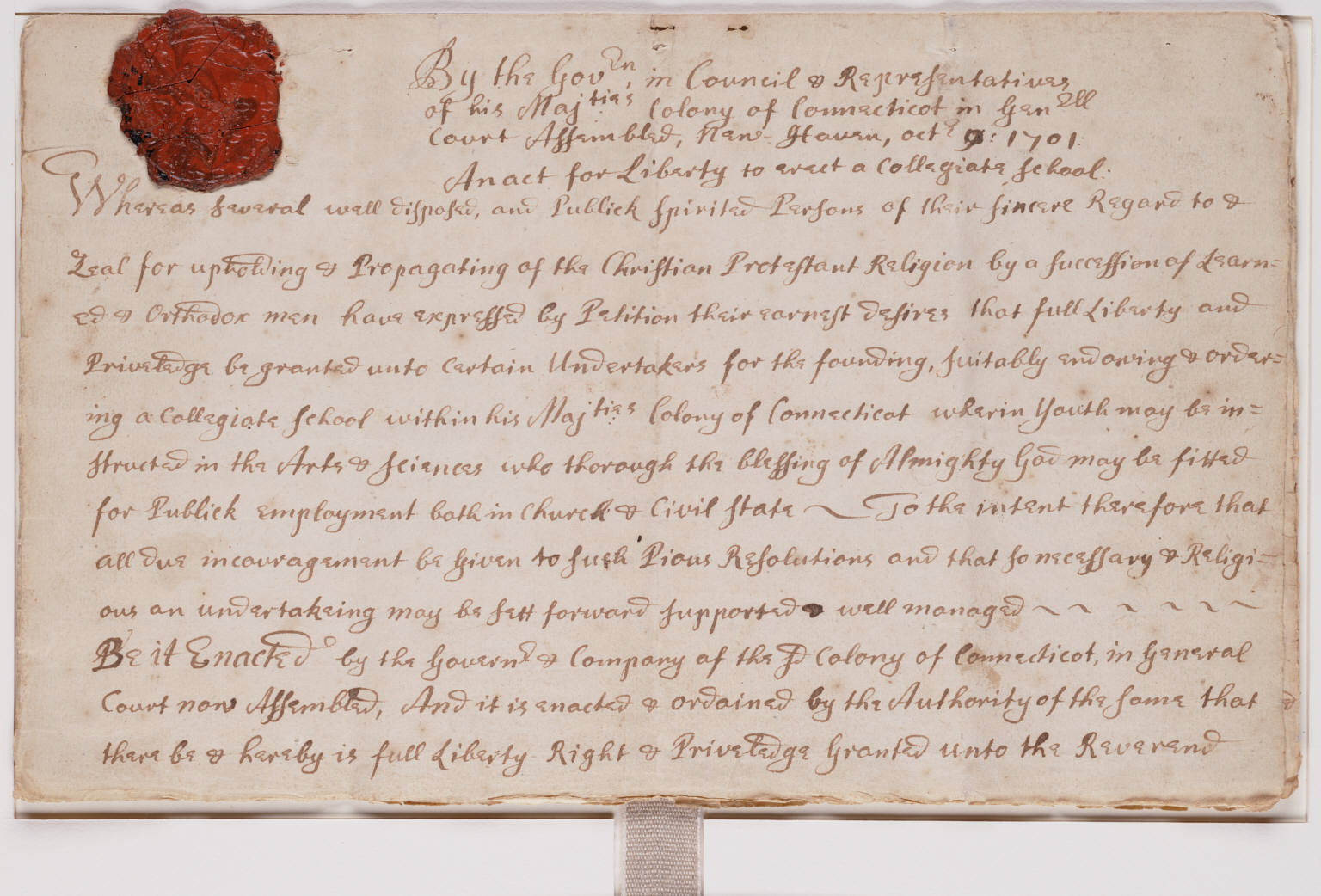

Yale University

Yale University is a private research university in New Haven, Connecticut. Established in 1701 as the Collegiate School, it is the third-oldest institution of higher education in the United States and among the most prestigious in the world. It is a member of the Ivy League. Chartered by the Connecticut Colony, the Collegiate School was established in 1701 by clergy to educate Congregational ministers before moving to New Haven in 1716. Originally restricted to theology and sacred languages, the curriculum began to incorporate humanities and sciences by the time of the American Revolution. In the 19th century, the college expanded into graduate and professional instruction, awarding the first PhD in the United States in 1861 and organizing as a university in 1887. Yale's faculty and student populations grew after 1890 with rapid expansion of the physical campus and scientific research. Yale is organized into fourteen constituent schools: the original undergraduate col ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robert Wilensky

Robert Wilensky (26 March 1951 – 15 March 2013) was an American computer scientist and emeritus professor at the UC Berkeley School of Information, with his main focus of research in artificial intelligence. Academic career In 1971, Wilensky received his bachelor's degree in mathematics from Yale University, and in 1978, a Ph.D. in computer science from the same institution. After finishing his thesis, "Understanding Goal-Based Stories", Wilensky joined the faculty from the EECS Department of UC Berkeley. In 1986, he worked as the doctoral advisor of Peter Norvig, who then later published the standard textbook of the field: '' Artificial Intelligence: A Modern Approach''. From 1993 to 1997, Wilensky was the Berkeley Computer Science Division Chair. During this time, he also served as director of the Berkeley Cognitive Science Program, director of the Berkeley Artificial Intelligence Research Project, and board member of the International Computer Science Institute. In 1997 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Janet Kolodner

Janet Lynne Kolodner is an American cognitive scientist and learning scientist and Regents' Professor Emerita in the School of Interactive Computing, College of Computing at the Georgia Institute of Technology. She was Founding Editor in Chief of ''The Journal of the Learning Sciences'' and served in that role for 19 years. She was Founding Executive Officer of the International Society of the Learning Sciences (ISLS). From August, 2010 through July, 2014, she was a program officer at the National Science Foundation and headed up the Cyberlearning and Future Learning Technologies program (originally called Cyberlearning: Transforming Education). Since finishing at NSF, she is working toward a set of projects that will integrate learning technologies coherently to support disciplinary and everyday learning, support project-based pedagogy that works, and connect to the best in curriculum for active learning. As of July, 2020, she is a Professor of the Practice at the Lynch Schoo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sydney Lamb

Sydney MacDonald Lamb (born May 4, 1929 in Denver, Colorado) is an American linguist and professor at Rice University, whose stratificational grammar is a significant alternative theory to Chomsky's transformational grammar. He has specialized in Neurocognitive Linguistics and a stratificational approach to language understanding. Lamb earned his Ph.D. from the University of California, Berkeley in 1958 and taught there from 1956 to 1964. His dissertation was a grammar of the Uto-Aztecan language Mono, under the direction of Mary Haas and Murray B. Emeneau. In 1964, he began teaching at Yale University before joining the Semionics Associates in Berkeley, California in 1977. Lamb did research in North American Indian languages specifically in those geographically centered on California. His contributions have been wide-ranging, including those to historical linguistics, computational linguistics, and the theory of linguistic structure. His work led to innovative designs of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Augmented Transition Network

An augmented transition network or ATN is a type of graph theoretic structure used in the operational definition of formal languages, used especially in parsing relatively complex natural languages, and having wide application in artificial intelligence. An ATN can, theoretically, analyze the structure of any sentence, however complicated. ATN are modified transition networks and an extension of RTNs. ATNs build on the idea of using finite state machines ( Markov model) to parse sentences. W. A. Woods in "Transition Network Grammars for Natural Language Analysis" claims that by adding a recursive mechanism to a finite state model, parsing can be achieved much more efficiently. Instead of building an automaton for a particular sentence, a collection of transition graphs are built. A grammatically correct sentence is parsed by reaching a final state in any state graph. Transitions between these graphs are simply subroutine calls from one state to any initial state on any graph in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conceptual Space

A conceptual space is a geometric structure that represents a number of quality dimensions, which denote basic features by which concepts and objects can be compared, such as weight, color, taste, temperature, pitch, and the three ordinary spatial dimensions.Kriegeskorte, N., & Kievit, R. A. (2013). Representational geometry: Integrating cognition, computation, and the brain. ''Trends in Cognitive Sciences, 17''(8), 401–412. http://doi.org/10.1016/j.tics.2013.06.007 In a conceptual space, ''points'' denote objects, and ''regions'' denote concepts. The theory of conceptual spaces is a theory about concept learning first proposed by Peter Gärdenfors.Foo, N. (2001). Conceptual Spaces—The Geometry of Thought. ''AI Magazine, 22''(1), 139–140. Retrieved fro/ref> It is motivated by notions such as conceptual Similarity (psychology), similarity and prototype theory. The theory also puts forward the notion that ''natural'' categories are convex regions in conceptual spaces. In that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scripts (artificial Intelligence)

Script theory is a psychological theory which posits that human behaviour largely falls into patterns called "scripts" because they function analogously to the way a written script does, by providing a program for action. Silvan Tomkins created script theory as a further development of his affect theory, which regards human beings' emotional responses to stimuli as falling into categories called " affects": he noticed that the purely biological response of affect may be followed by awareness and by what we cognitively do in terms of acting on that affect so that more was needed to produce a complete explanation of what he called "human being theory". In script theory, the basic unit of analysis is called a "scene", defined as a sequence of events linked by the affects triggered during the experience of those events. Tomkins recognized that our affective experiences fall into patterns that we may group together according to criteria such as the types of persons and places involved ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Parsing

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term ''parsing'' comes from Latin ''pars'' (''orationis''), meaning part (of speech). The term has slightly different meanings in different branches of linguistics and computer science. Traditional sentence parsing is often performed as a method of understanding the exact meaning of a sentence or word, sometimes with the aid of devices such as sentence diagrams. It usually emphasizes the importance of grammatical divisions such as subject and predicate. Within computational linguistics the term is used to refer to the formal analysis by a computer of a sentence or other string of words into its constituents, resulting in a parse tree showing their syntactic relation to each other, which may also contain semantic and other information (p-values). Some parsing algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |