|

Computer Network Programming

Computer network programming involves writing computer programs that enable processes to communicate with each other across a computer network. Connection-oriented and connectionless communications Very generally, most of communications can be divided into connection-oriented, and connectionless. Whether a communication is connection-oriented or connectionless, is defined by the communication protocol, and not by . Examples of the connection-oriented protocols include and , and examples of connectionless protocols include , "raw IP", and . Clients and servers For connection-oriented communications, communication parties usually have different roles. One party is usually waiting for incoming connections; this party is usually referred to as "server". Another party is the one which initiates connection; this party is usually referred to as "client". For connectionless communications, one party ("server") is usually waiting for an incoming packet, and another party ("cli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Programs

A computer program is a sequence or set of instructions in a programming language for a computer to execute. Computer programs are one component of software, which also includes documentation and other intangible components. A computer program in its human-readable form is called source code. Source code needs another computer program to execute because computers can only execute their native machine instructions. Therefore, source code may be translated to machine instructions using the language's compiler. (Assembly language programs are translated using an assembler.) The resulting file is called an executable. Alternatively, source code may execute within the language's interpreter. If the executable is requested for execution, then the operating system loads it into memory and starts a process. The central processing unit will soon switch to this process so it can fetch, decode, and then execute each machine instruction. If the source code is requested for execution, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet Protocol

The Internet Protocol (IP) is the network layer communications protocol in the Internet protocol suite for relaying datagrams across network boundaries. Its routing function enables internetworking, and essentially establishes the Internet. IP has the task of delivering packets from the source host to the destination host solely based on the IP addresses in the packet headers. For this purpose, IP defines packet structures that encapsulate the data to be delivered. It also defines addressing methods that are used to label the datagram with source and destination information. IP was the connectionless datagram service in the original Transmission Control Program introduced by Vint Cerf and Bob Kahn in 1974, which was complemented by a connection-oriented service that became the basis for the Transmission Control Protocol (TCP). The Internet protocol suite is therefore often referred to as ''TCP/IP''. The first major version of IP, Internet Protocol Version 4 (IPv4), is the do ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Programming

Computer programming is the process of performing a particular computation (or more generally, accomplishing a specific computing result), usually by designing and building an executable computer program. Programming involves tasks such as analysis, generating algorithms, profiling algorithms' accuracy and resource consumption, and the implementation of algorithms (usually in a chosen programming language, commonly referred to as coding). The source code of a program is written in one or more languages that are intelligible to programmers, rather than machine code, which is directly executed by the central processing unit. The purpose of programming is to find a sequence of instructions that will automate the performance of a task (which can be as complex as an operating system) on a computer, often for solving a given problem. Proficient programming thus usually requires expertise in several different subjects, including knowledge of the application domain, specialized algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

UNIX Network Programming

Unix Network Programming is a book written by W. Richard Stevens. It was published in 1990 by Prentice Hall and covers many topics regarding UNIX networking and Computer network programming. The book focuses on the design and development of network software under UNIX. The book provides descriptions of how and why a given solution works and includes 15,000 lines of C code. The book's summary describes it as "for programmers seeking an in depth tutorial on sockets, transport level interface (TLI), interprocess communications (IPC) facilities under System V and BSD UNIX." The book has been translated into several languages, including Chinese, Italian, German, Japanese and others. Later editions have expanded into two volumes, Volume 1: The Sockets Networking API and Volume 2: Interprocess Communications. In the movie ''Wayne's World 2 ''Wayne's World 2'' is a 1993 American comedy film directed by Stephen Surjik and starring Mike Myers and Dana Carvey as hosts of a public-acc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DevOps

DevOps is a set of practices that combines software development (''Dev'') and IT operations (''Ops''). It aims to shorten the systems development life cycle and provide continuous delivery with high software quality. DevOps is complementary to agile software development; several DevOps aspects came from the ''agile'' way of working. Definition Other than it being a cross-functional combination (and a portmanteau) of the terms and concepts for "development" and "operations", academics and practitioners have not developed a universal definition for the term "DevOps". Most often, DevOps is characterized by key principles: shared ownership, workflow automation, and rapid feedback. From an academic perspective, Len Bass, Ingo Weber, and Liming Zhu—three computer science researchers from the CSIRO and the Software Engineering Institute—suggested defining DevOps as "a set of practices intended to reduce the time between committing a change to a system and the change being placed i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Infrastructure As Code

Infrastructure as code (IaC) is the process of managing and provisioning computer data centers through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools. The IT infrastructure managed by this process comprises both physical equipment, such as bare-metal servers, as well as virtual machines, and associated configuration resources. The definitions may be in a version control system. The code in the definition files may use either scripts or declarative definitions, rather than maintaining the code through manual processes, but IaC more often employs declarative approaches. Overview IaC grew as a response to the difficulty posed by utility computing and second-generation web frameworks. In 2006, the launch of Amazon Web Services’ Elastic Compute Cloud and the 1.0 version of Ruby on Rails just months before created widespread scaling problems in the enterprise that were previously experienced only at large, multi-n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

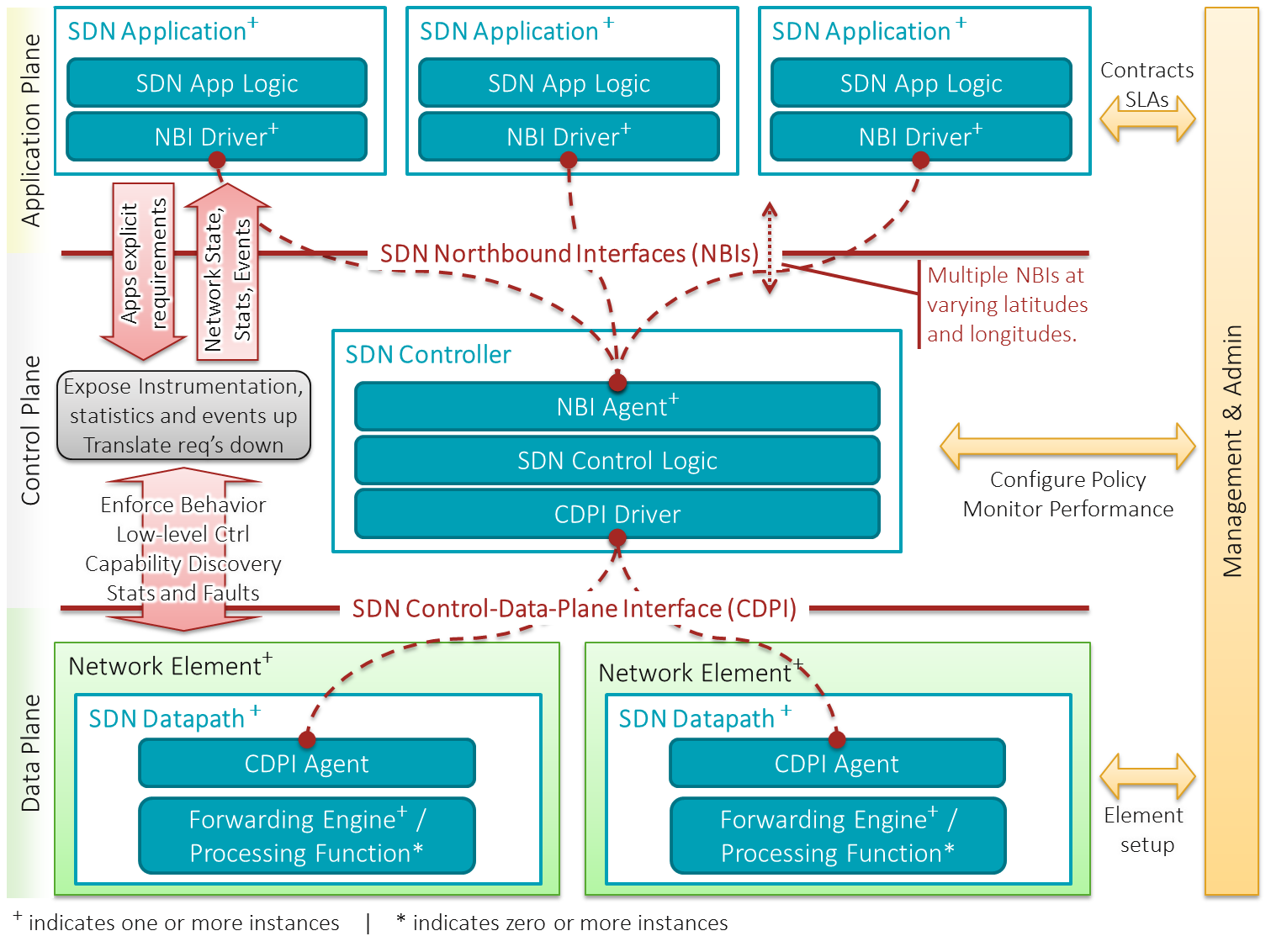

Software-defined Networking

Software-defined networking (SDN) technology is an approach to network management that enables dynamic, programmatically efficient network configuration in order to improve network performance and monitoring, making it more like cloud computing than traditional network management. SDN is meant to address the static architecture of traditional networks. SDN attempts to centralize network intelligence in one network component by disassociating the forwarding process of network packets (data plane) from the routing process (control plane). The control plane consists of one or more controllers, which are considered the brain of the SDN network where the whole intelligence is incorporated. However, centralization has its own drawbacks when it comes to security, scalability and elasticity and this is the main issue of SDN. SDN was commonly associated with the OpenFlow protocol (for remote communication with network plane elements for the purpose of determining the path of network packe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HTTP

The Hypertext Transfer Protocol (HTTP) is an application layer protocol in the Internet protocol suite model for distributed, collaborative, hypermedia information systems. HTTP is the foundation of data communication for the World Wide Web, where hypertext documents include hyperlinks to other resources that the user can easily access, for example by a mouse click or by tapping the screen in a web browser. Development of HTTP was initiated by Tim Berners-Lee at CERN in 1989 and summarized in a simple document describing the behavior of a client and a server using the first HTTP protocol version that was named 0.9. That first version of HTTP protocol soon evolved into a more elaborated version that was the first draft toward a far future version 1.0. Development of early HTTP Requests for Comments (RFCs) started a few years later and it was a coordinated effort by the Internet Engineering Task Force (IETF) and the World Wide Web Consortium (W3C), with work later moving to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenSSL

OpenSSL is a software library for applications that provide secure communications over computer networks against eavesdropping or need to identify the party at the other end. It is widely used by Internet servers, including the majority of HTTPS websites. OpenSSL contains an open-source implementation of the SSL and TLS protocols. The core library, written in the C programming language, implements basic cryptographic functions and provides various utility functions. Wrappers allowing the use of the OpenSSL library in a variety of computer languages are available. The OpenSSL Software Foundation (OSF) represents the OpenSSL project in most legal capacities including contributor license agreements, managing donations, and so on. OpenSSL Software Services (OSS) also represents the OpenSSL project for support contracts. OpenSSL is available for most Unix-like operating systems (including Linux, macOS, and BSD), Microsoft Windows and OpenVMS. Project history The OpenSSL ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transport Layer Security

Transport Layer Security (TLS) is a cryptographic protocol designed to provide communications security over a computer network. The protocol is widely used in applications such as email, instant messaging, and voice over IP, but its use in securing HTTPS remains the most publicly visible. The TLS protocol aims primarily to provide security, including privacy (confidentiality), integrity, and authenticity through the use of cryptography, such as the use of certificates, between two or more communicating computer applications. It runs in the presentation layer and is itself composed of two layers: the TLS record and the TLS handshake protocols. The closely related Datagram Transport Layer Security (DTLS) is a communications protocol providing security to datagram-based applications. In technical writing you often you will see references to (D)TLS when it applies to both versions. TLS is a proposed Internet Engineering Task Force (IETF) standard, first defined in 1999, and the c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Berkeley Sockets

Berkeley sockets is an application programming interface (API) for Internet sockets and Unix domain sockets, used for inter-process communication (IPC). It is commonly implemented as a library of linkable modules. It originated with the 4.2BSD Unix operating system, which was released in 1983. A socket is an abstract representation (handle) for the local endpoint of a network communication path. The Berkeley sockets API represents it as a file descriptor (file handle) in the Unix philosophy that provides a common interface for input and output to streams of data. Berkeley sockets evolved with little modification from a ''de facto'' standard into a component of the POSIX specification. The term POSIX sockets is essentially synonymous with ''Berkeley sockets'', but they are also known as BSD sockets, acknowledging the first implementation in the Berkeley Software Distribution. History and implementations Berkeley sockets originated with the 4.2BSD Unix operating system, released ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |