|

Compositional Pattern-producing Network

Compositional pattern-producing networks (CPPNs) are a variation of artificial neural networks (ANNs) that have an architecture whose evolution is guided by genetic algorithms.Stanley, Kenneth O. "Compositional pattern producing networks: A novel abstraction of development." Genetic programming and evolvable machines 8.2 (2007): 131-162. While ANNs often contain only sigmoid functions and sometimes Gaussian functions, CPPNs can include both types of functions and many others. The choice of functions for the canonical set can be biased toward specific types of patterns and regularities. For example, periodic functions such as sine produce segmented patterns with repetitions, while symmetric functions such as Gaussian produce symmetric patterns. Linear functions can be employed to produce linear or fractal-like patterns. Thus, the architect of a CPPN-based genetic art system can bias the types of patterns it generates by deciding the set of canonical functions to include. Furthermore, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called ''edges''. Neurons and edges typically have a ''weight'' that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sigmoid Function

A sigmoid function is a mathematical function having a characteristic "S"-shaped curve or sigmoid curve. A common example of a sigmoid function is the logistic function shown in the first figure and defined by the formula: :S(x) = \frac = \frac=1-S(-x). Other standard sigmoid functions are given in the Examples section. In some fields, most notably in the context of artificial neural networks, the term "sigmoid function" is used as an alias for the logistic function. Special cases of the sigmoid function include the Gompertz curve (used in modeling systems that saturate at large values of x) and the ogee curve (used in the spillway of some dams). Sigmoid functions have domain of all real numbers, with return (response) value commonly monotonically increasing but could be decreasing. Sigmoid functions most often show a return value (y axis) in the range 0 to 1. Another commonly used range is from −1 to 1. A wide variety of sigmoid functions including the logistic and hype ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

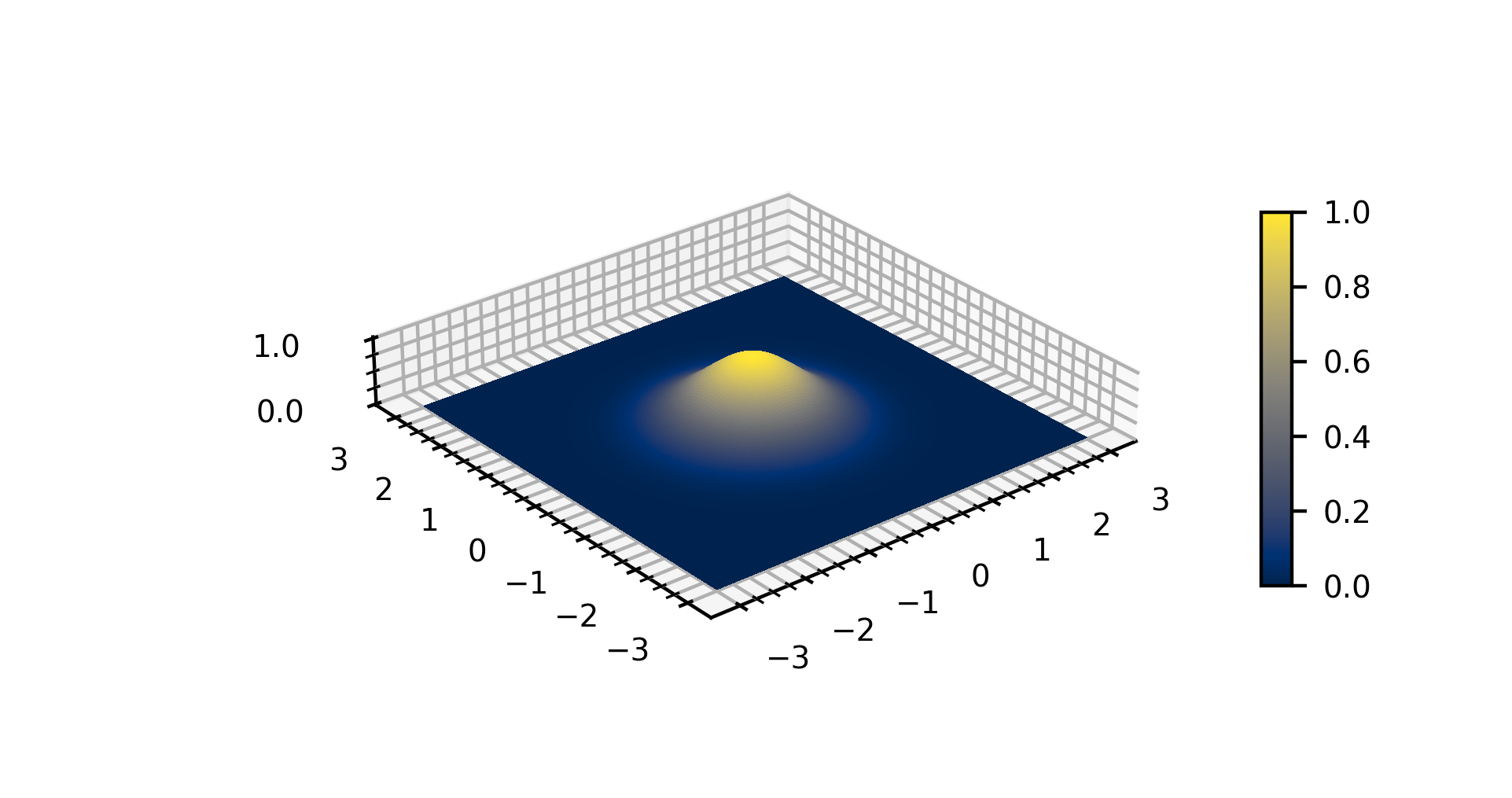

Gaussian Function

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function of the base form f(x) = \exp (-x^2) and with parametric extension f(x) = a \exp\left( -\frac \right) for arbitrary real constants , and non-zero . It is named after the mathematician Carl Friedrich Gauss. The graph of a Gaussian is a characteristic symmetric " bell curve" shape. The parameter is the height of the curve's peak, is the position of the center of the peak, and (the standard deviation, sometimes called the Gaussian RMS width) controls the width of the "bell". Gaussian functions are often used to represent the probability density function of a normally distributed random variable with expected value and variance . In this case, the Gaussian is of the form g(x) = \frac \exp\left( -\frac \frac \right). Gaussian functions are widely used in statistics to describe the normal distributions, in signal processing to define Gaussian filters, in image processing where two-dimensio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sine

In mathematics, sine and cosine are trigonometric functions of an angle. The sine and cosine of an acute angle are defined in the context of a right triangle: for the specified angle, its sine is the ratio of the length of the side that is opposite that angle to the length of the longest side of the triangle (the hypotenuse), and the cosine is the ratio of the length of the adjacent leg to that of the hypotenuse. For an angle \theta, the sine and cosine functions are denoted simply as \sin \theta and \cos \theta. More generally, the definitions of sine and cosine can be extended to any real value in terms of the lengths of certain line segments in a unit circle. More modern definitions express the sine and cosine as infinite series, or as the solutions of certain differential equations, allowing their extension to arbitrary positive and negative values and even to complex numbers. The sine and cosine functions are commonly used to model periodic phenomena such as sound and lig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fractal

In mathematics, a fractal is a geometric shape containing detailed structure at arbitrarily small scales, usually having a fractal dimension strictly exceeding the topological dimension. Many fractals appear similar at various scales, as illustrated in successive magnifications of the Mandelbrot set. This exhibition of similar patterns at increasingly smaller scales is called self-similarity, also known as expanding symmetry or unfolding symmetry; if this replication is exactly the same at every scale, as in the Menger sponge, the shape is called affine self-similar. Fractal geometry lies within the mathematical branch of measure theory. One way that fractals are different from finite geometric figures is how they scale. Doubling the edge lengths of a filled polygon multiplies its area by four, which is two (the ratio of the new to the old side length) raised to the power of two (the conventional dimension of the filled polygon). Likewise, if the radius of a filled sphere i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neuroevolution

Neuroevolution, or neuro-evolution, is a form of artificial intelligence that uses evolutionary algorithms to generate artificial neural networks (ANN), parameters, and rules. It is most commonly applied in artificial life, general game playing and evolutionary robotics. The main benefit is that neuroevolution can be applied more widely than supervised learning algorithms, which require a syllabus of correct input-output pairs. In contrast, neuroevolution requires only a measure of a network's performance at a task. For example, the outcome of a game (i.e. whether one player won or lost) can be easily measured without providing labeled examples of desired strategies. Neuroevolution is commonly used as part of the reinforcement learning paradigm, and it can be contrasted with conventional deep learning techniques that use gradient descent on a neural network with a fixed topology. Features Many neuroevolution algorithms have been defined. One common distinction is between algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neuroevolution Of Augmenting Topologies

NeuroEvolution of Augmenting Topologies (NEAT) is a genetic algorithm (GA) for the generation of evolving artificial neural networks (a neuroevolution technique) developed by Kenneth Stanley and Risto Miikkulainen in 2002 while at The University of Texas at Austin. It alters both the weighting parameters and structures of networks, attempting to find a balance between the fitness of evolved solutions and their diversity. It is based on applying three key techniques: tracking genes with history markers to allow crossover among topologies, applying speciation (the evolution of species) to preserve innovations, and developing topologies incrementally from simple initial structures ("complexifying"). Performance On simple control tasks, the NEAT algorithm often arrives at effective networks more quickly than other contemporary neuro-evolutionary techniques and reinforcement learning methods.Kenneth O. Stanley and Risto Miikkulainen (2002). "Evolving Neural Networks Through Augmenting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HyperNEAT

Hypercube-based NEAT, or HyperNEAT, is a generative encoding that evolves artificial neural networks (ANNs) with the principles of the widely used NeuroEvolution of Augmented Topologies (NEAT) algorithm developed by Kenneth Stanley Kenneth Owen Stanley is an artificial intelligence researcher, author, and former professor of computer science at the University of Central Florida known for creating the Neuroevolution of augmenting topologies (NEAT) algorithm. He coauthored ''W .... It is a novel technique for evolving large-scale neural networks using the geometric regularities of the task domain. It uses Compositional Pattern Producing Networks ( CPPNs), which are used to generate the images foPicbreeder.org and shapes foEndlessForms.com. HyperNEAT has recently been extended to also evolve plastic ANNs and to evolve the location of every neuron in the network. Applications to date * Multi-agent learning * Checkers board evaluation * Controlling Legged Robovideo* Comparing Ge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Evolutionary Art

Evolutionary art is a branch of generative art, in which the artist does not do the work of constructing the artwork, but rather lets a system do the construction. In evolutionary art, initially generated art is put through an iterated process of selection and modification to arrive at a final product, where it is the artist who is the selective agent. Evolutionary art is to be distinguished from BioArt, which uses living organisms as the material medium instead of paint, stone, metal, etc. Overview In common with biological evolution through natural selection or animal husbandry, the members of a population undergoing artificial evolution modify their form or behavior over many reproductive generations in response to a selective regime. In interactive evolution the selective regime may be applied by the viewer explicitly by selecting individuals which are aesthetically pleasing. Alternatively a selection pressure can be generated implicitly, for example according to the leng ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interactive Evolutionary Computation

Interactive evolutionary computation (IEC) or aesthetic selection is a general term for methods of evolutionary computation that use human evaluation. Usually human evaluation is necessary when the form of fitness function is not known (for example, visual appeal or attractiveness; as in Dawkins, 1986) or the result of optimization should fit a particular user preference (for example, taste of coffee or color set of the user interface). IEC design issues The number of evaluations that IEC can receive from one human user is limited by user fatigue which was reported by many researchers as a major problem. In addition, human evaluations are slow and expensive as compared to fitness function computation. Hence, one-user IEC methods should be designed to converge using a small number of evaluations, which necessarily implies very small populations. Several methods were proposed by researchers to speed up convergence, like interactive constrain evolutionary search (user intervention) or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NeuroEvolution Of Augmenting Topologies

NeuroEvolution of Augmenting Topologies (NEAT) is a genetic algorithm (GA) for the generation of evolving artificial neural networks (a neuroevolution technique) developed by Kenneth Stanley and Risto Miikkulainen in 2002 while at The University of Texas at Austin. It alters both the weighting parameters and structures of networks, attempting to find a balance between the fitness of evolved solutions and their diversity. It is based on applying three key techniques: tracking genes with history markers to allow crossover among topologies, applying speciation (the evolution of species) to preserve innovations, and developing topologies incrementally from simple initial structures ("complexifying"). Performance On simple control tasks, the NEAT algorithm often arrives at effective networks more quickly than other contemporary neuro-evolutionary techniques and reinforcement learning methods.Kenneth O. Stanley and Risto Miikkulainen (2002). "Evolving Neural Networks Through Augmenting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Networks

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called ''edges''. Neurons and edges typically have a ''weight'' that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typically, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |