|

Comparison Of Object Pascal And C

The computer programming languages C and Pascal have similar times of origin, influences, and purposes. Both were used to design (and compile) their own compilers early in their lifetimes. The original Pascal definition appeared in 1969 and a first compiler in 1970. The first version of C appeared in 1972. Both are descendants of the ALGOL language series. ALGOL introduced programming language support for structured programming, where programs are constructed of single entry and single exit constructs such as if, while, for and case. Pascal stems directly from ALGOL W, while it shared some new ideas with ALGOL 68. The C language is more indirectly related to ALGOL, originally through B, BCPL, and CPL, and later through ALGOL 68 (for example in case of struct and union) and also Pascal (for example in case of enumerations, const, typedef and booleans). Some Pascal dialects also incorporated traits from C. The languages documented here are the Pascal of Niklaus Wirth, as st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Programming Language

A programming language is a system of notation for writing computer programs. Most programming languages are text-based formal languages, but they may also be graphical. They are a kind of computer language. The description of a programming language is usually split into the two components of syntax (form) and semantics (meaning), which are usually defined by a formal language. Some languages are defined by a specification document (for example, the C programming language is specified by an ISO Standard) while other languages (such as Perl) have a dominant implementation that is treated as a reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being common. Programming language theory is the subfield of computer science that studies the design, implementation, analysis, characterization, and classification of programming languages. Definitions There are many considerations when defini ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Programming Language Syntax

In computer science, the syntax of a computer language is the rules that defines the combinations of symbols that are considered to be correctly structured statements or expressions in that language. This applies both to programming languages, where the document represents source code, and to markup languages, where the document represents data. The syntax of a language defines its surface form. Text-based computer languages are based on sequences of characters, while visual programming languages are based on the spatial layout and connections between symbols (which may be textual or graphical). Documents that are syntactically invalid are said to have a syntax error. When designing the syntax of a language, a designer might start by writing down examples of both legal and illegal strings, before trying to figure out the general rules from these examples. Syntax therefore refers to the ''form'' of the code, and is contrasted with semantics – the ''meaning''. In processing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bitwise Operation

In computer programming, a bitwise operation operates on a bit string, a bit array or a binary numeral (considered as a bit string) at the level of its individual bits. It is a fast and simple action, basic to the higher-level arithmetic operations and directly supported by the processor. Most bitwise operations are presented as two-operand instructions where the result replaces one of the input operands. On simple low-cost processors, typically, bitwise operations are substantially faster than division, several times faster than multiplication, and sometimes significantly faster than addition. While modern processors usually perform addition and multiplication just as fast as bitwise operations due to their longer instruction pipelines and other architectural design choices, bitwise operations do commonly use less power because of the reduced use of resources. Bitwise operators In the explanations below, any indication of a bit's position is counted from the right (least signi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boolean Datatype

In computer science, the Boolean (sometimes shortened to Bool) is a data type that has one of two possible values (usually denoted ''true'' and ''false'') which is intended to represent the two truth values of logic and Boolean algebra. It is named after George Boole, who first defined an algebraic system of logic in the mid 19th century. The Boolean data type is primarily associated with conditional statements, which allow different actions by changing control flow depending on whether a programmer-specified Boolean ''condition'' evaluates to true or false. It is a special case of a more general ''logical data type—''logic does not always need to be Boolean (see probabilistic logic). Generalities In programming languages with a built-in Boolean data type, such as Pascal and Java, the comparison operators such as > and ≠ are usually defined to return a Boolean value. Conditional and iterative commands may be defined to test Boolean-valued expressions. Languages with no exp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pointer (computer Programming)

In computer science, a pointer is an object in many programming languages that stores a memory address. This can be that of another value located in computer memory, or in some cases, that of memory-mapped computer hardware. A pointer ''references'' a location in memory, and obtaining the value stored at that location is known as ''dereferencing'' the pointer. As an analogy, a page number in a book's index could be considered a pointer to the corresponding page; dereferencing such a pointer would be done by flipping to the page with the given page number and reading the text found on that page. The actual format and content of a pointer variable is dependent on the underlying computer architecture. Using pointers significantly improves performance for repetitive operations, like traversing iterable data structures (e.g. strings, lookup tables, control tables and tree structures). In particular, it is often much cheaper in time and space to copy and dereference pointers th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Byte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as The Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The first bit is number 0, making the eighth bit number 7. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signedness

In computing, signedness is a property of data types representing numbers in computer programs. A numeric variable is ''signed'' if it can represent both positive and negative numbers, and ''unsigned'' if it can only represent non-negative numbers (zero or positive numbers). As ''signed'' numbers can represent negative numbers, they lose a range of positive numbers that can only be represented with ''unsigned'' numbers of the same size (in bits) because roughly half the possible values are non-positive values, whereas the respective unsigned type can dedicate all the possible values to the positive number range. For example, a two's complement signed 16-bit integer can hold the values −32768 to 32767 inclusively, while an unsigned 16 bit integer can hold the values 0 to 65535. For this sign representation method, the leftmost bit (most significant bit) denotes whether the value is negative (0 for positive or zero, 1 for negative). In programming languages For most architect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integer

An integer is the number zero (), a positive natural number (, , , etc.) or a negative integer with a minus sign (−1, −2, −3, etc.). The negative numbers are the additive inverses of the corresponding positive numbers. In the language of mathematics, the set of integers is often denoted by the boldface or blackboard bold \mathbb. The set of natural numbers \mathbb is a subset of \mathbb, which in turn is a subset of the set of all rational numbers \mathbb, itself a subset of the real numbers \mathbb. Like the natural numbers, \mathbb is countably infinite. An integer may be regarded as a real number that can be written without a fractional component. For example, 21, 4, 0, and −2048 are integers, while 9.75, , and are not. The integers form the smallest group and the smallest ring containing the natural numbers. In algebraic number theory, the integers are sometimes qualified as rational integers to distinguish them from the more general algebraic integers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parsing

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term ''parsing'' comes from Latin ''pars'' (''orationis''), meaning part (of speech). The term has slightly different meanings in different branches of linguistics and computer science. Traditional sentence parsing is often performed as a method of understanding the exact meaning of a sentence or word, sometimes with the aid of devices such as sentence diagrams. It usually emphasizes the importance of grammatical divisions such as subject and predicate. Within computational linguistics the term is used to refer to the formal analysis by a computer of a sentence or other string of words into its constituents, resulting in a parse tree showing their syntactic relation to each other, which may also contain semantic and other information (p-values). Some parsing algor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Enumeration

An enumeration is a complete, ordered listing of all the items in a collection. The term is commonly used in mathematics and computer science to refer to a listing of all of the elements of a set. The precise requirements for an enumeration (for example, whether the set must be finite, or whether the list is allowed to contain repetitions) depend on the discipline of study and the context of a given problem. Some sets can be enumerated by means of a natural ordering (such as 1, 2, 3, 4, ... for the set of positive integers), but in other cases it may be necessary to impose a (perhaps arbitrary) ordering. In some contexts, such as enumerative combinatorics, the term ''enumeration'' is used more in the sense of ''counting'' – with emphasis on determination of the number of elements that a set contains, rather than the production of an explicit listing of those elements. Combinatorics In combinatorics, enumeration means counting, i.e., determining the exact number of elemen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Type

In computer science and computer programming, a data type (or simply type) is a set of possible values and a set of allowed operations on it. A data type tells the compiler or interpreter how the programmer intends to use the data. Most programming languages support basic data types of integer numbers (of varying sizes), floating-point numbers (which approximate real numbers), characters and Booleans. A data type constrains the possible values that an expression, such as a variable or a function, might take. This data type defines the operations that can be done on the data, the meaning of the data, and the way values of that type can be stored. Concept A data type is a collection or grouping of data values. Such a grouping may be defined for many reasons: similarity, convenience, or to focus the attention. It is frequently a matter of good organization that aids the understanding of complex definitions. Almost all programming languages explicitly include the notion of data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

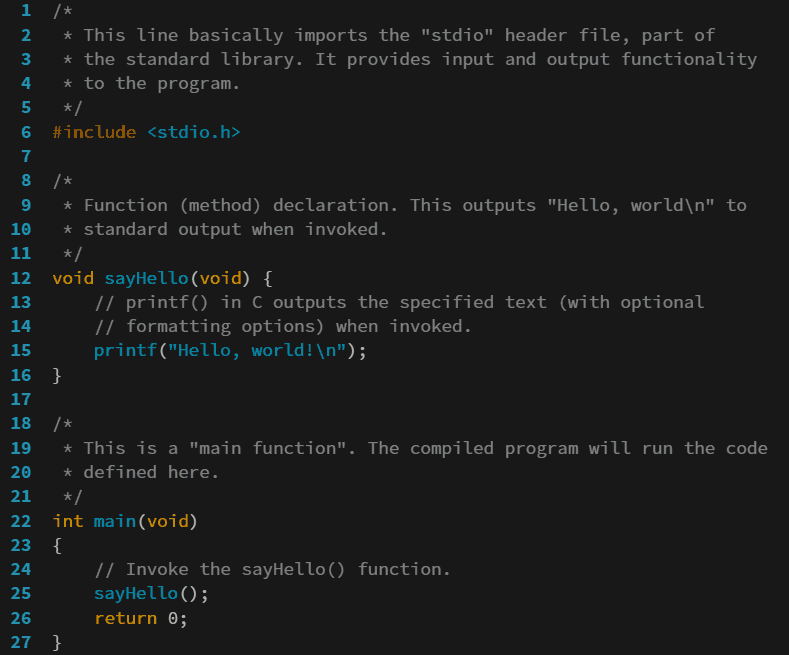

Subroutine

In computer programming, a function or subroutine is a sequence of program instructions that performs a specific task, packaged as a unit. This unit can then be used in programs wherever that particular task should be performed. Functions may be defined within programs, or separately in libraries that can be used by many programs. In different programming languages, a function may be called a routine, subprogram, subroutine, method, or procedure. Technically, these terms all have different definitions, and the nomenclature varies from language to language. The generic umbrella term ''callable unit'' is sometimes used. A function is often coded so that it can be started several times and from several places during one execution of the program, including from other functions, and then branch back (''return'') to the next instruction after the ''call'', once the function's task is done. The idea of a subroutine was initially conceived by John Mauchly during his work on ENIAC, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |