|

Cerebellar Model Articulation Controller

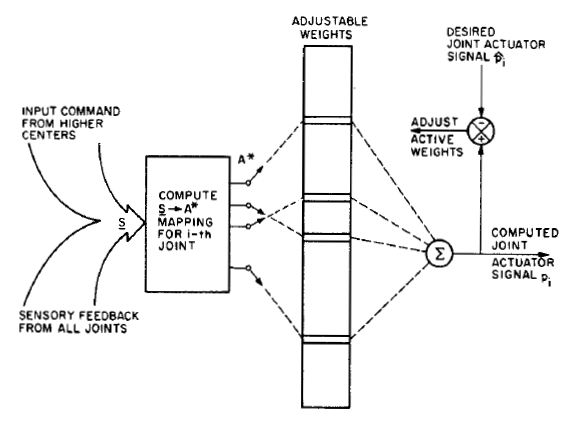

The cerebellar model arithmetic computer (CMAC) is a type of neural network based on a model of the mammalian cerebellum. It is also known as the cerebellar model articulation controller. It is a type of associative memory. The CMAC was first proposed as a function modeler for robotic controllers by James Albus in 1975 (hence the name), but has been extensively used in reinforcement learning and also as for automated classification in the machine learning Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine ... community. The CMAC is an extension of the perceptron model. It computes a function for n input dimensions. The input space is divided up into hyper-rectangles, each of which is associated with a memory cell. The contents of the memory cells are the weights, which are adjusted du ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Networks

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called ''edges''. Neurons and edges typically have a '' weight'' that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typicall ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Neuroscience

Computational neuroscience (also known as theoretical neuroscience or mathematical neuroscience) is a branch of neuroscience which employs mathematical models, computer simulations, theoretical analysis and abstractions of the brain to understand the principles that govern the development, structure, physiology and cognitive abilities of the nervous system. Computational neuroscience employs computational simulations to validate and solve mathematical models, and so can be seen as a sub-field of theoretical neuroscience; however, the two fields are often synonymous. The term mathematical neuroscience is also used sometimes, to stress the quantitative nature of the field. Computational neuroscience focuses on the description of biologically plausible neurons (and neural systems) and their physiology and dynamics, and it is therefore not directly concerned with biologically unrealistic models used in connectionism, control theory, cybernetics, quantitative psychol ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be Supervised learning, supervised, Semi-supervised learning, semi-supervised or Unsupervised learning, unsupervised. Deep-learning architectures such as #Deep_neural_networks, deep neural networks, deep belief networks, deep reinforcement learning, recurrent neural networks, convolutional neural networks and Transformer (machine learning model), Transformers have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, Climatology, climate science, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance. Artificial neural networks (ANNs) were inspired by information processing and distr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recursive Least Squares Filter

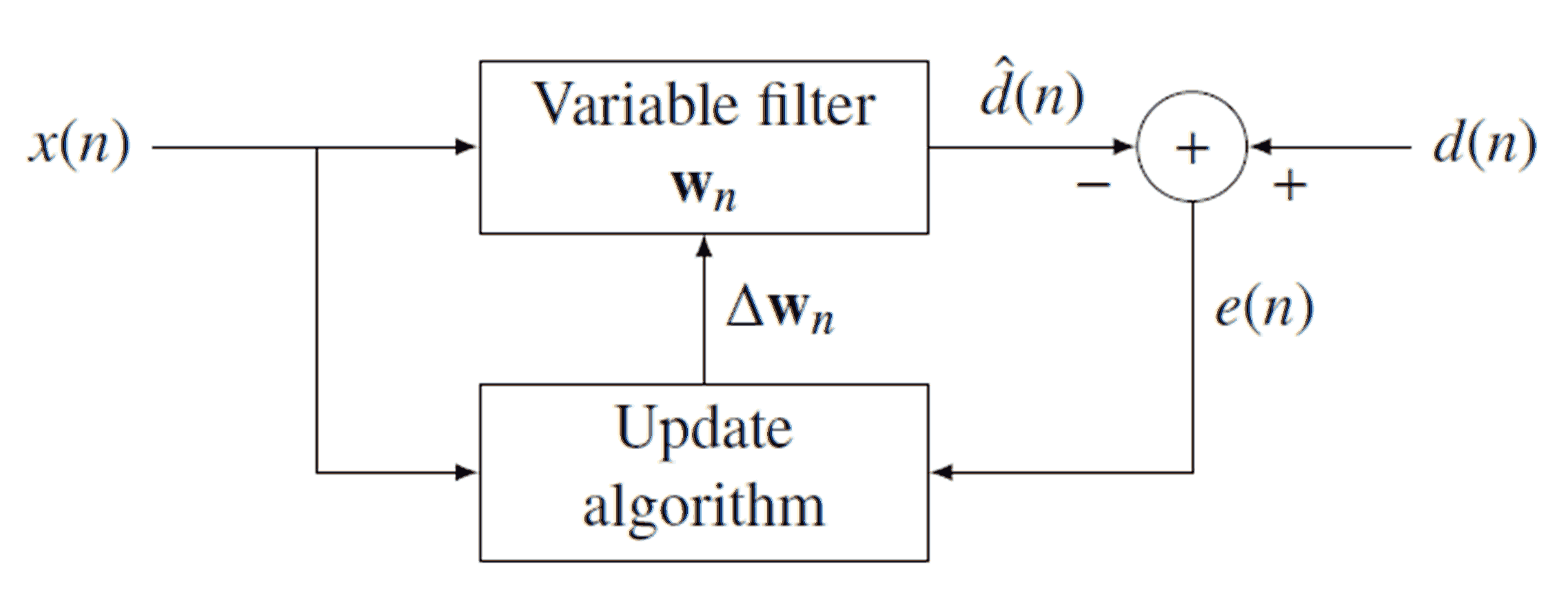

Recursive least squares (RLS) is an adaptive filter algorithm that recursively finds the coefficients that minimize a weighted linear least squares cost function relating to the input signals. This approach is in contrast to other algorithms such as the least mean squares (LMS) that aim to reduce the mean square error. In the derivation of the RLS, the input signals are considered deterministic, while for the LMS and similar algorithms they are considered stochastic. Compared to most of its competitors, the RLS exhibits extremely fast convergence. However, this benefit comes at the cost of high computational complexity. Motivation RLS was discovered by Gauss but lay unused or ignored until 1950 when Plackett rediscovered the original work of Gauss from 1821. In general, the RLS can be used to solve any problem that can be solved by adaptive filters. For example, suppose that a signal d(n) is transmitted over an echoey, noisy channel that causes it to be received as :x(n)=\sum_^ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called ''edges''. Neurons and edges typically have a ''weight'' that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typically, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hash Function

A hash function is any function that can be used to map data of arbitrary size to fixed-size values. The values returned by a hash function are called ''hash values'', ''hash codes'', ''digests'', or simply ''hashes''. The values are usually used to index a fixed-size table called a '' hash table''. Use of a hash function to index a hash table is called ''hashing'' or ''scatter storage addressing''. Hash functions and their associated hash tables are used in data storage and retrieval applications to access data in a small and nearly constant time per retrieval. They require an amount of storage space only fractionally greater than the total space required for the data or records themselves. Hashing is a computationally and storage space-efficient form of data access that avoids the non-constant access time of ordered and unordered lists and structured trees, and the often exponential storage requirements of direct access of state spaces of large or variable-length keys. Use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cerebellum

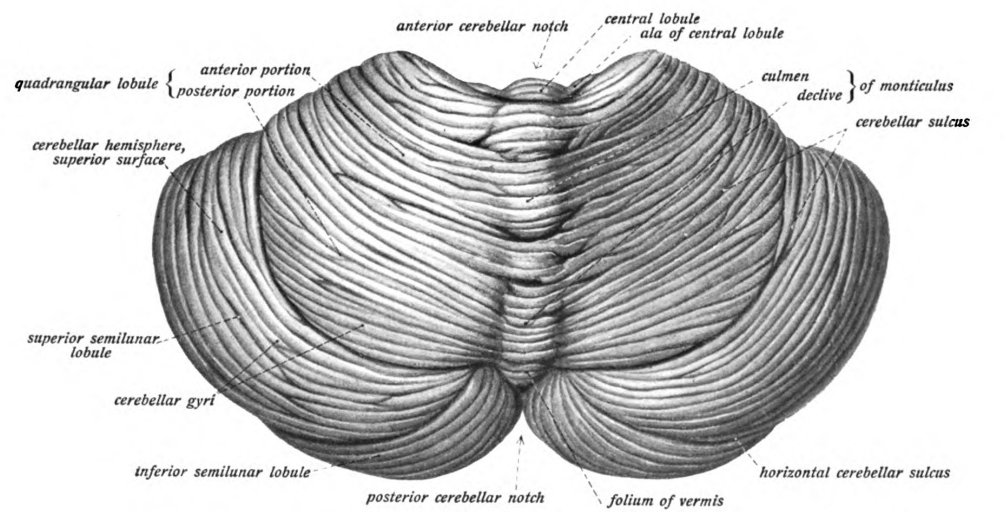

The cerebellum (Latin for "little brain") is a major feature of the hindbrain of all vertebrates. Although usually smaller than the cerebrum, in some animals such as the mormyrid fishes it may be as large as or even larger. In humans, the cerebellum plays an important role in motor control. It may also be involved in some cognitive functions such as attention and language as well as emotional control such as regulating fear and pleasure responses, but its movement-related functions are the most solidly established. The human cerebellum does not initiate movement, but contributes to coordination, precision, and accurate timing: it receives input from sensory systems of the spinal cord and from other parts of the brain, and integrates these inputs to fine-tune motor activity. Cerebellar damage produces disorders in fine movement, equilibrium, posture, and motor learning in humans. Anatomically, the human cerebellum has the appearance of a separate structure attached to th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perceptron

In machine learning, the perceptron (or McCulloch-Pitts neuron) is an algorithm for supervised classification, supervised learning of binary classification, binary classifiers. A binary classifier is a function which can decide whether or not an input, represented by a vector of numbers, belongs to some specific class. It is a type of linear classifier, i.e. a classification algorithm that makes its predictions based on a linear predictor function combining a set of Weighting, weights with the feature vector. History The perceptron was invented in 1943 by McCulloch and Pitts. The first implementation was a machine built in 1958 at the Cornell Aeronautical Laboratory by Frank Rosenblatt, funded by the United States Office of Naval Research. The perceptron was intended to be a machine, rather than a program, and while its first implementation was in software for the IBM 704, it was subsequently implemented in custom-built hardware as the "Mark 1 perceptron". This machine was de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |