|

Calculating Demand Forecast Accuracy

Demand forecasting is known as the process of making future estimations in relation to customer demand over a specific period. Generally, demand forecasting will consider historical data and other analytical information to produce the most accurate predictions. More specifically, the methods of demand forecasting entails using predictive analytics of historical data to understand and predict customer demand in order to understand key economic conditions and assist in making crucial supply decisions to optimise business profitability. Demand forecasting methods are divided into two major categories, qualitative and quantitative methods. Qualitative methods are based on expert opinion and information gathered from the field. It is mostly used in situations when there is minimal data available to analyse. For example, when a business or product is newly being introduced to the market. Quantitative methods however, use data, and analytical tools in order to create predictions. Demand f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predictive Analytics

Predictive analytics encompasses a variety of statistical techniques from data mining, predictive modeling, and machine learning that analyze current and historical facts to make predictions about future or otherwise unknown events. In business, predictive models exploit patterns found in historical and transactional data to identify risks and opportunities. Models capture relationships among many factors to allow assessment of risk or potential associated with a particular set of conditions, guiding decision-making for candidate transactions. The defining functional effect of these technical approaches is that predictive analytics provides a predictive score (probability) for each individual (customer, employee, healthcare patient, product SKU, vehicle, component, machine, or other organizational unit) in order to determine, inform, or influence organizational processes that pertain across large numbers of individuals, such as in marketing, credit risk assessment, fraud detecti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prediction Market

Prediction markets (also known as betting markets, information markets, decision markets, idea futures or event derivatives) are open markets where specific outcomes can be predicted using financial incentives. Essentially, they are exchange-traded markets created for the purpose of trading the outcome of events. The market prices can indicate what the crowd thinks the probability of the event is. A prediction market contract trades between 0 and 100%. The most common form of a prediction market is a binary option market, which will expire at the price of 0 or 100%. Prediction markets can be thought of as belonging to the more general concept of crowdsourcing which is specially designed to aggregate information on particular topics of interest. The main purposes of prediction markets are eliciting aggregating beliefs over an unknown future outcome. Traders with different beliefs trade on contracts whose payoffs are related to the unknown future outcome and the market prices of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Leading Indicator

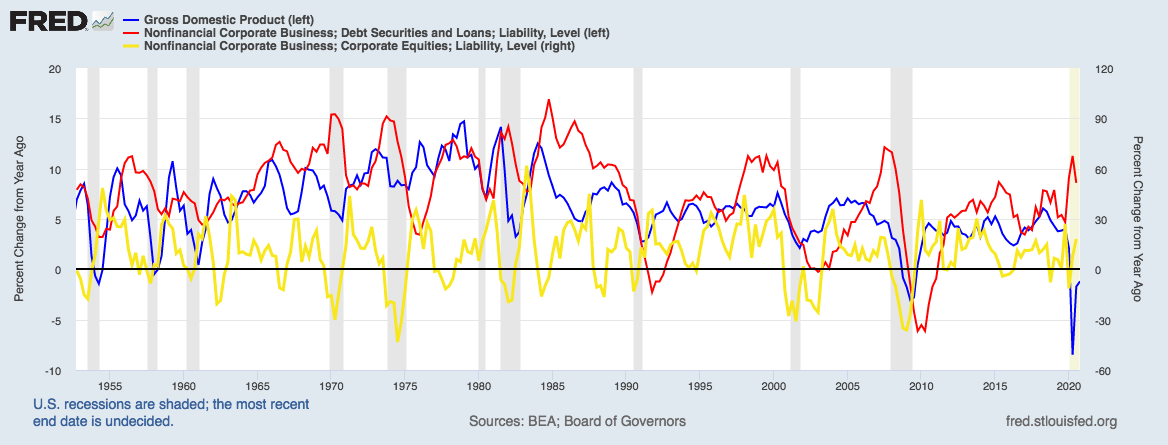

An economic indicator is a statistic about an economic activity. Economic indicators allow analysis of economic performance and predictions of future performance. One application of economic indicators is the study of business cycles. Economic indicators include various indices, earnings reports, and economic summaries: for example, the unemployment rate, quits rate (quit rate in American English), housing starts, consumer price index (a measure for inflation), Inverted yield curve, consumer leverage ratio, industrial production, bankruptcies, gross domestic product, broadband internet penetration, retail sales, price index, and money supply changes. The leading business cycle dating committee in the United States of America is the private National Bureau of Economic Research. The Bureau of Labor Statistics is the principal fact-finding agency for the U.S. government in the field of labor economics and statistics. Other producers of economic indicators includes the United State ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exponential Smoothing

Exponential smoothing is a rule of thumb technique for smoothing time series data using the exponential window function. Whereas in the simple moving average the past observations are weighted equally, exponential functions are used to assign exponentially decreasing weights over time. It is an easily learned and easily applied procedure for making some determination based on prior assumptions by the user, such as seasonality. Exponential smoothing is often used for analysis of time-series data. Exponential smoothing is one of many window functions commonly applied to smooth data in signal processing, acting as low-pass filters to remove high-frequency noise. This method is preceded by Poisson's use of recursive exponential window functions in convolutions from the 19th century, as well as Kolmogorov and Zurbenko's use of recursive moving averages from their studies of turbulence in the 1940s. The raw data sequence is often represented by \ beginning at time t = 0, and the outpu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moving Average

In statistics, a moving average (rolling average or running average) is a calculation to analyze data points by creating a series of averages of different subsets of the full data set. It is also called a moving mean (MM) or rolling mean and is a type of finite impulse response filter. Variations include: simple, cumulative, or weighted forms (described below). Given a series of numbers and a fixed subset size, the first element of the moving average is obtained by taking the average of the initial fixed subset of the number series. Then the subset is modified by "shifting forward"; that is, excluding the first number of the series and including the next value in the subset. A moving average is commonly used with time series data to smooth out short-term fluctuations and highlight longer-term trends or cycles. The threshold between short-term and long-term depends on the application, and the parameters of the moving average will be set accordingly. It is also used in economics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Causal Model

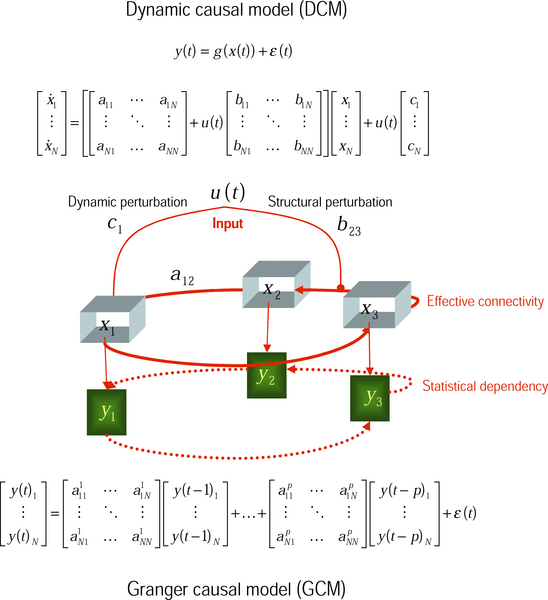

In the philosophy of science, a causal model (or structural causal model) is a conceptual model that describes the causal mechanisms of a system. Causal models can improve study designs by providing clear rules for deciding which independent variables need to be included/controlled for. They can allow some questions to be answered from existing observational data without the need for an interventional study such as a randomized controlled trial. Some interventional studies are inappropriate for ethical or practical reasons, meaning that without a causal model, some hypotheses cannot be tested. Causal models can help with the question of ''external validity'' (whether results from one study apply to unstudied populations). Causal models can allow data from multiple studies to be merged (in certain circumstances) to answer questions that cannot be answered by any individual data set. Causal models have found applications in signal processing, epidemiology and machine learning. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conjoint Analysis (in Marketing)

Conjoint analysis is a survey-based statistical technique used in market research that helps determine how people value different attributes (feature, function, benefits) that make up an individual product or service. The objective of conjoint analysis is to determine what combination of a limited number of attributes is most influential on respondent choice or decision making. A controlled set of potential products or services is shown to survey respondents and by analyzing how they make choices among these products, the implicit valuation of the individual elements making up the product or service can be determined. These implicit valuations (utilities or part-worths) can be used to create market models that estimate market share, revenue and even profitability of new designs. Conjoint analysis originated in mathematical psychology and was developed by marketing professor Paul E. Green at the Wharton School of the University of Pennsylvania. Other prominent conjoint analysis pi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Network

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological neurons, or an artificial neural network, used for solving artificial intelligence (AI) problems. The connections of the biological neuron are modeled in artificial neural networks as weights between nodes. A positive weight reflects an excitatory connection, while negative values mean inhibitory connections. All inputs are modified by a weight and summed. This activity is referred to as a linear combination. Finally, an activation function controls the amplitude of the output. For example, an acceptable range of output is usually between 0 and 1, or it could be −1 and 1. These artificial networks may be used for predictive modeling, adaptive control and applications where they can be trained via a dataset. Self-learning resulting from e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reference Class Forecasting

Reference class forecasting or comparison class forecasting is a method of predicting the future by looking at similar past situations and their outcomes. The theories behind reference class forecasting were developed by Daniel Kahneman and Amos Tversky. The theoretical work helped Kahneman win the Nobel Prize in Economics. Reference class forecasting is so named as it predicts the outcome of a planned action based on actual outcomes in a reference class of similar actions to that being forecast. Discussion of which reference class to use when forecasting a given situation is known as the reference class problem. Overview Kahneman and Tversky Decision Research Technical Report PTR-1042-77-6. In found that human judgment is generally optimistic due to overconfidence and insufficient consideration of distributional information about outcomes. People tend to underestimate the costs, completion times, and risks of planned actions, whereas they tend to overestimate the benefits of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Group Method Of Data Handling

Group method of data handling (GMDH) is a family of inductive algorithms for computer-based mathematical modeling of multi-parametric datasets that features fully automatic structural and parametric optimization of models. GMDH is used in such fields as data mining, knowledge discovery, prediction, complex systems modeling, optimization and pattern recognition. GMDH algorithms are characterized by inductive procedure that performs sorting-out of gradually complicated polynomial models and selecting the best solution by means of the ''external criterion''. A GMDH model with multiple inputs and one output is a subset of components of the ''base function'' (1): : Y(x_1,\dots,x_n)=a_0+\sum\limits_^m a_i f_i where ''fi'' are elementary functions dependent on different sets of inputs, ''ai'' are coefficients and ''m'' is the number of the base function components. In order to find the best solution, GMDH algorithms consider various component subsets of the base function (1) called ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extrapolation

In mathematics, extrapolation is a type of estimation, beyond the original observation range, of the value of a variable on the basis of its relationship with another variable. It is similar to interpolation, which produces estimates between known observations, but extrapolation is subject to greater uncertainty and a higher risk of producing meaningless results. Extrapolation may also mean extension of a method, assuming similar methods will be applicable. Extrapolation may also apply to human experience to project, extend, or expand known experience into an area not known or previously experienced so as to arrive at a (usually conjectural) knowledge of the unknownExtrapolation entry at Merriam–Webster (e.g. a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |