|

Data Analysis

Data analysis is a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, encompassing diverse techniques under a variety of names, and is used in different business, science, and social science domains. In today's business world, data analysis plays a role in making decisions more scientific and helping businesses operate more effectively. Data mining is a particular data analysis technique that focuses on statistical modeling and knowledge discovery for predictive rather than purely descriptive purposes, while business intelligence covers data analysis that relies heavily on aggregation, focusing mainly on business information. In statistical applications, data analysis can be divided into descriptive statistics, exploratory data analysis (EDA), and confirmatory data analysis (CDA). EDA focuses on discoverin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Cleansing

Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data. Data cleansing may be performed interactively with data wrangling tools, or as batch processing through scripting or a data quality firewall. After cleansing, a data set should be consistent with other similar data sets in the system. The inconsistencies detected or removed may have been originally caused by user entry errors, by corruption in transmission or storage, or by different data dictionary definitions of similar entities in different stores. Data cleaning differs from data validation in that validation almost invariably means data is rejected from the system at entry and is performed at the time of entry, rather than on batches of data. The actual pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Raw Data

Raw data, also known as primary data, are ''data'' (e.g., numbers, instrument readings, figures, etc.) collected from a source. In the context of examinations, the raw data might be described as a raw score (after test scores). If a scientist sets up a computerized thermometer which records the temperature of a chemical mixture in a test tube every minute, the list of temperature readings for every minute, as printed out on a spreadsheet or viewed on a computer screen are "raw data". Raw data have not been subjected to processing, "cleaning" by researchers to remove outliers, obvious instrument reading errors or data entry errors, or any analysis (e.g., determining central tendency aspects such as the average or median result). As well, raw data have not been subject to any other manipulation by a software program or a human researcher, analyst or technician. They are also referred to as ''primary'' data. Raw data is a relative term (see data), because even once raw data h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithms

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can perform automated deductions (referred to as automated reasoning) and use mathematical and logical tests to divert the code execution through various routes (referred to as automated decision-making). Using human characteristics as descriptors of machines in metaphorical ways was already practiced by Alan Turing with terms such as "memory", "search" and "stimulus". In contrast, a heuristic is an approach to problem solving that may not be fully specified or may not guarantee correct or optimal results, especially in problem domains where there is no well-defined correct or optimal result. As an effective method, an algorithm can be expressed within a finite amount of space ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

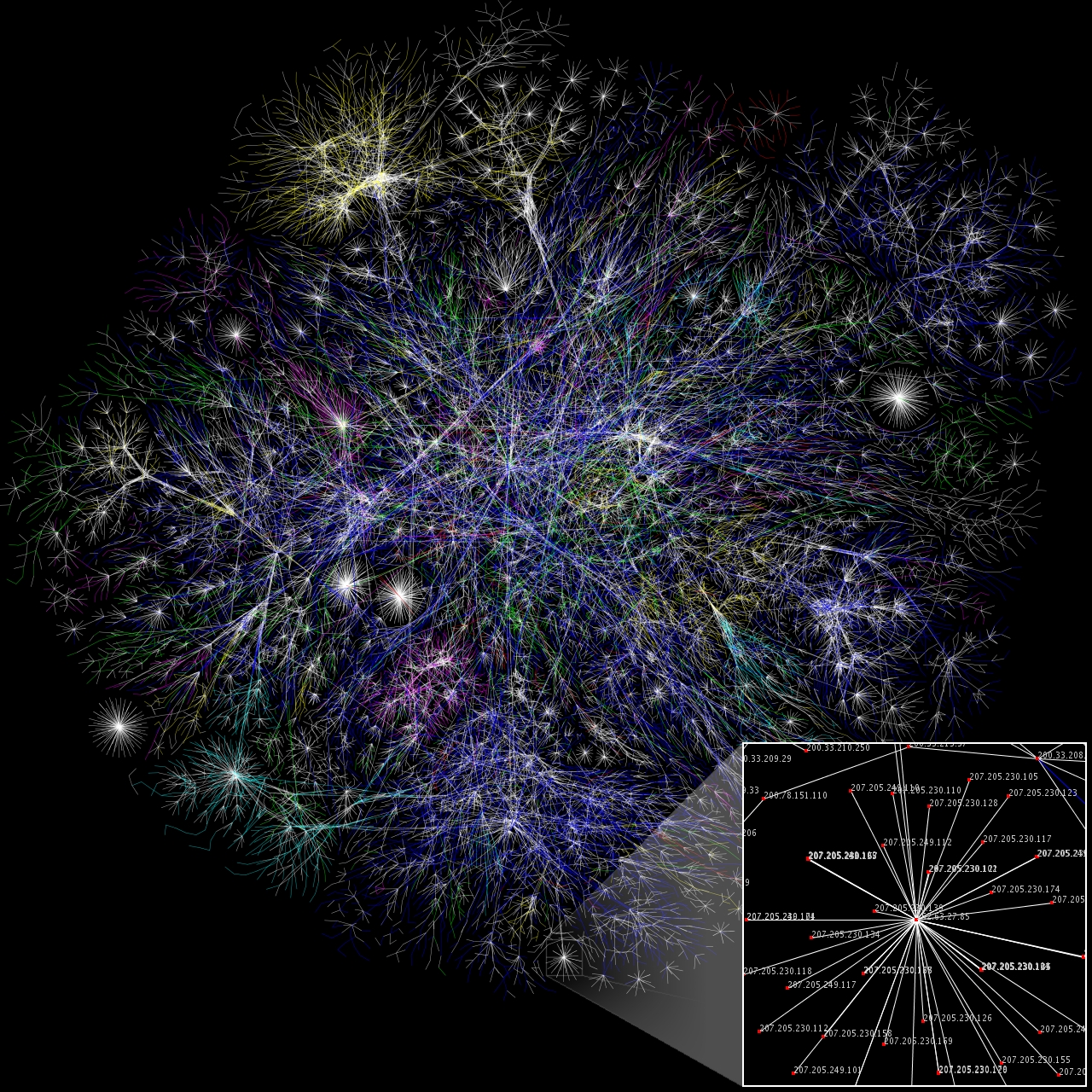

Data Visualization

Data and information visualization (data viz or info viz) is an interdisciplinary field that deals with the graphic representation of data and information. It is a particularly efficient way of communicating when the data or information is numerous as for example a time series. It is also the study of visual representations of abstract data to reinforce human cognition. The abstract data include both numerical and non-numerical data, such as text and geographic information. It is related to infographics and scientific visualization. One distinction is that it's information visualization when the spatial representation (e.g., the page layout of a graphic design) is chosen, whereas it's scientific visualization when the spatial representation is given. From an academic point of view, this representation can be considered as a mapping between the original data (usually numerical) and graphic elements (for example, lines or points in a chart). The mapping determines how the a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Descriptive Statistics

A descriptive statistic (in the count noun sense) is a summary statistic that quantitatively describes or summarizes features from a collection of information, while descriptive statistics (in the mass noun sense) is the process of using and analysing those statistics. Descriptive statistic is distinguished from inferential statistics (or inductive statistics) by its aim to summarize a sample, rather than use the data to learn about the population that the sample of data is thought to represent. This generally means that descriptive statistics, unlike inferential statistics, is not developed on the basis of probability theory, and are frequently nonparametric statistics. Even when a data analysis draws its main conclusions using inferential statistics, descriptive statistics are generally also presented. For example, in papers reporting on human subjects, typically a table is included giving the overall sample size, sample sizes in important subgroups (e.g., for each treatmen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Model

A data model is an abstract model that organizes elements of data and standardizes how they relate to one another and to the properties of real-world entities. For instance, a data model may specify that the data element representing a car be composed of a number of other elements which, in turn, represent the color and size of the car and define its owner. The term data model can refer to two distinct but closely related concepts. Sometimes it refers to an abstract formalization of the objects and relationships found in a particular application domain: for example the customers, products, and orders found in a manufacturing organization. At other times it refers to the set of concepts used in defining such formalizations: for example concepts such as entities, attributes, relations, or tables. So the "data model" of a banking application may be defined using the entity-relationship "data model". This article uses the term in both senses. A data model explicitly determines t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relationship Of Data, Information And Intelligence

Relationship most often refers to: * Family relations and relatives: consanguinity * Interpersonal relationship, a strong, deep, or close association or acquaintance between two or more people * Correlation and dependence, relationships in mathematics and statistics between two variables or sets of data * Semantic relationship, an ontology component * Romance (love), a connection between two people driven by love and/or sexual attraction Relationship or Relationships may also refer to: Arts and media * "Relationship" (song), by Young Thug featuring Future * "Relationships", an episode of the British TV series ''As Time Goes By'' * The Relationship, an American rock band ** ''The Relationship'' (album), their 2010 album * The Relationships, an English band who played at the 2009 Truck Festival * ''Relationships'', a 1994 album by BeBe & CeCe Winans * ''Relationships'', a 2001 album by Georgie Fame * "Relationship", a song by Lakeside on the 1987 album ''Power'' * "Relationsh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Systems Technician

An information systems technician is a technician of an industrial occupation whose responsibility is maintaining communications and computer systems. Description Information systems technicians operate and maintain information systems, facilitating system utilization. In many companies, these technicians assemble data sets and other details needed to build databases. This includes data management, procedure writing, writing job setup instructions, and performing program librarian functions. Information systems technicians assist in designing and coordinating the development of integrated information system databases. Information systems technicians also help maintain Internet and Intranet websites. They decide how information is presented and create digital multimedia and presentation using software and related equipment. Information systems technicians install and maintain multi-platform networking computer environments, a variety of data networks, and a diverse set of teleco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Custodian

In data governance groups, responsibilities for data management are increasingly divided between the business process owners and information technology (IT) departments. Two functional titles commonly used for these roles are data steward and data custodian. Data Stewards are commonly responsible for data content, context, and associated business rules. Data custodians are responsible for the safe custody, transport, storage of the data and implementation of business rules.''Policies, Regulations and Rules: Data Management Procedures - REG 08.00.3 - Information Technology'', NC State University, http://www.ncsu.edu/policies/informationtechnology/REG08.00.3.php Simply put, Data Stewards are responsible for what is stored in a data field, while data custodians are responsible for the technical environment and database structure. Common job titles for data custodians are database administrator (DBA), data modeler and ETL developer. Data custodian responsibilities A data custodia ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Unit

In statistics, a unit is one member of a set of entities being studied. It is the main source for the mathematical abstraction of a "random variable". Common examples of a unit would be a single person, animal, plant, manufactured item, or country that belongs to a larger collection of such entities being studied. Experimental and sampling units Units are often referred to as being either experimental units, sampling units or units of observation: * An "experimental unit" is typically thought of as one member of a set of objects that are initially equal, with each object then subjected to one of several experimental treatments. Put simply, it is the smallest entity to which a treatment is applied. * A "sampling unit" is typically thought of as an object that has been sampled from a statistical population. This term is commonly used in opinion polling and survey sampling. For example, in an experiment on educational methods, methods may be applied to classrooms of students. This ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

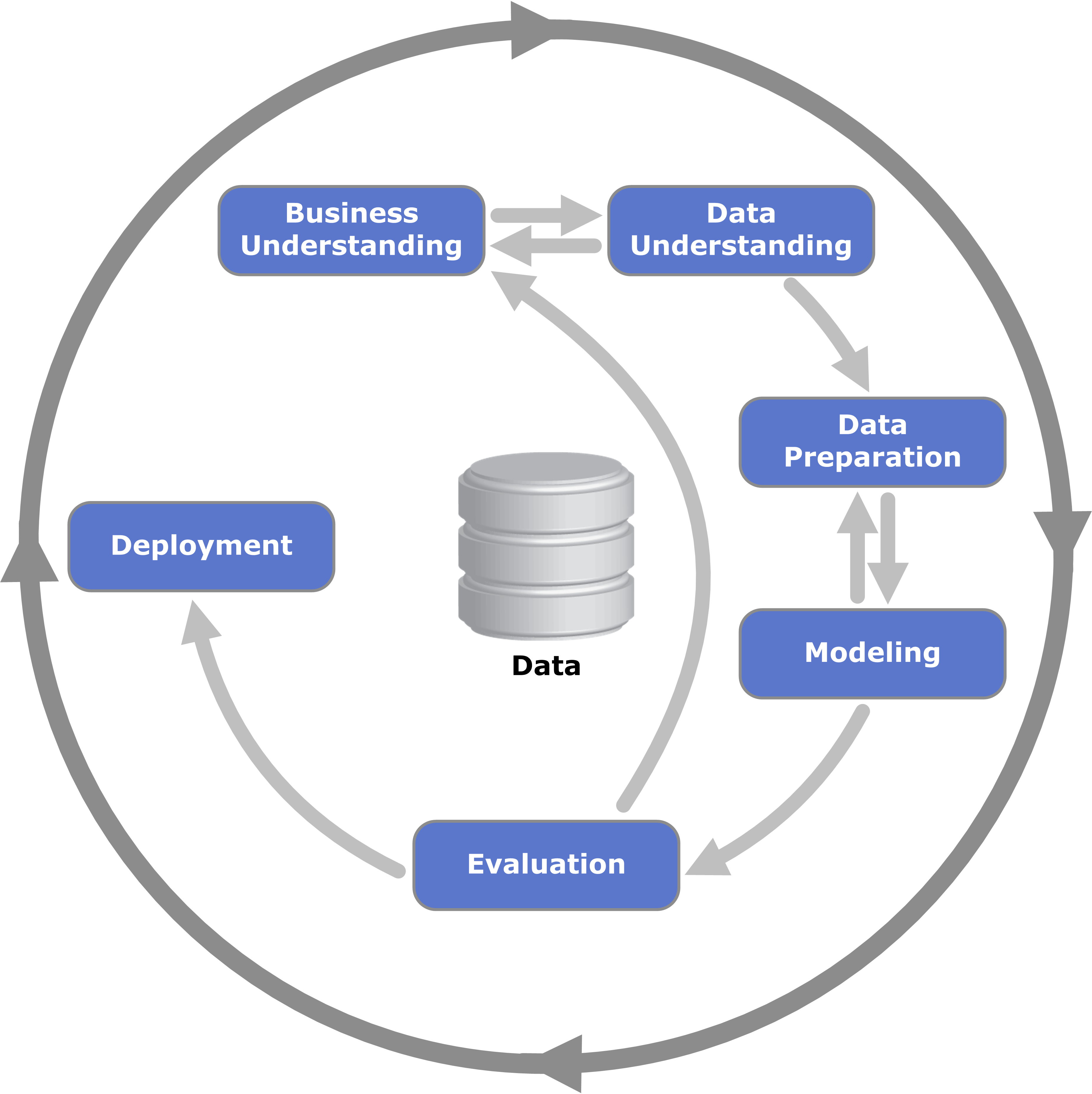

Cross-industry Standard Process For Data Mining

Cross-industry standard process for data mining, known as CRISP-DM,Shearer C., ''The CRISP-DM model: the new blueprint for data mining'', J Data Warehousing (2000); 5:13—22. is an open standard process model that describes common approaches used by data mining experts. It is the most widely-used analytics model. In 2015, IBM released a new methodology called ''Analytics Solutions Unified Method for Data Mining/Predictive Analytics'' (also known as ASUM-DM) which refines and extends CRISP-DM. History CRISP-DM was conceived in 1996 and became a European Union project under the ESPRIT funding initiative in 1997. The project was led by five companies: Integral Solutions Ltd (ISL), Teradata, Daimler AG, NCR Corporation and OHRA, an insurance company. This core consortium brought different experiences to the project: ISL, later acquired and merged into SPSS. The computer giant NCR Corporation produced the Teradata data warehouse In computing, a data warehouse (DW or DWH), a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |