|

Cumulative Accuracy Profile

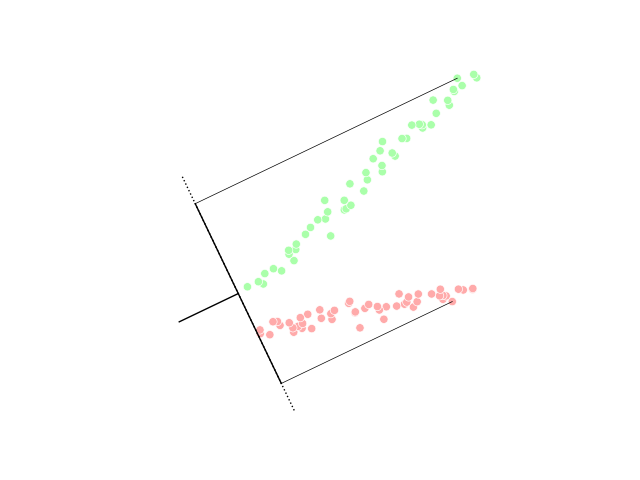

A cumulative accuracy profile (CAP) is a concept utilized in data science Data science is an interdisciplinary field that uses scientific methods, processes, algorithms and systems to extract or extrapolate knowledge and insights from noisy, structured and unstructured data, and apply knowledge from data across a br ... to visualize discrimination power. The CAP of a model represents the cumulative number of positive outcomes along the ''y''-axis versus the corresponding cumulative number of a classifying parameter along the ''x''-axis. The output is called a CAP curve. The CAP is distinct from the receiver operating characteristic (ROC) curve, which plots the true-positive rate against the false-positive rate. CAPs are used in robustness evaluations of classification models. Analyzing a CAP A cumulative accuracy profile can be used to evaluate a model by comparing the current curve to both the 'perfect' and a randomized curve. A good model will have a CAP between the p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Science

Data science is an interdisciplinary field that uses scientific methods, processes, algorithms and systems to extract or extrapolate knowledge and insights from noisy, structured and unstructured data, and apply knowledge from data across a broad range of application domains. Data science is related to data mining, machine learning, big data, computational statistics and analytics. Data science is a "concept to unify statistics, data analysis, informatics, and their related methods" in order to "understand and analyse actual phenomena" with data. It uses techniques and theories drawn from many fields within the context of mathematics, statistics, computer science, information science, and domain knowledge. However, data science is different from computer science and information science. Turing Award winner Jim Gray imagined data science as a "fourth paradigm" of science ( empirical, theoretical, computational, and now data-driven) and asserted that "everything about sc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrimination Power

Linear discriminant analysis (LDA), normal discriminant analysis (NDA), or discriminant function analysis is a generalization of Fisher's linear discriminant, a method used in statistics and other fields, to find a linear combination of features that characterizes or separates two or more classes of objects or events. The resulting combination may be used as a linear classifier, or, more commonly, for dimensionality reduction before later classification. LDA is closely related to analysis of variance (ANOVA) and regression analysis, which also attempt to express one dependent variable as a linear combination of other features or measurements. However, ANOVA uses categorical independent variables and a continuous dependent variable, whereas discriminant analysis has continuous independent variables and a categorical dependent variable (''i.e.'' the class label). Logistic regression and probit regression are more similar to LDA than ANOVA is, as they also explain a catego ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Receiver Operating Characteristic

A receiver operating characteristic curve, or ROC curve, is a graphical plot that illustrates the diagnostic ability of a binary classifier system as its discrimination threshold is varied. The method was originally developed for operators of military radar receivers starting in 1941, which led to its name. The ROC curve is created by plotting the true positive rate (TPR) against the false positive rate (FPR) at various threshold settings. The true-positive rate is also known as sensitivity, recall or ''probability of detection''. The false-positive rate is also known as ''probability of false alarm'' and can be calculated as (1 − specificity). The ROC can also be thought of as a plot of the power as a function of the Type I Error of the decision rule (when the performance is calculated from just a sample of the population, it can be thought of as estimators of these quantities). The ROC curve is thus the sensitivity or recall as a function of fall-out. In general, if the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

True-positive Rate

In medicine and statistics, sensitivity and specificity mathematically describe the accuracy of a test that reports the presence or absence of a medical condition. If individuals who have the condition are considered "positive" and those who do not are considered "negative", then sensitivity is a measure of how well a test can identify true positives and specificity is a measure of how well a test can identify true negatives: * Sensitivity (true positive rate) is the probability of a positive test result, conditioned on the individual truly being positive. * Specificity (true negative rate) is the probability of a negative test result, conditioned on the individual truly being negative. If the true status of the condition cannot be known, sensitivity and specificity can be defined relative to a " gold standard test" which is assumed correct. For all testing, both diagnoses and screening, there is usually a trade-off between sensitivity and specificity, such that higher sensiti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

False-positive Rate

In medicine and statistics, sensitivity and specificity mathematically describe the accuracy of a test that reports the presence or absence of a medical condition. If individuals who have the condition are considered "positive" and those who do not are considered "negative", then sensitivity is a measure of how well a test can identify true positives and specificity is a measure of how well a test can identify true negatives: * Sensitivity (true positive rate) is the probability of a positive test result, conditioned on the individual truly being positive. * Specificity (true negative rate) is the probability of a negative test result, conditioned on the individual truly being negative. If the true status of the condition cannot be known, sensitivity and specificity can be defined relative to a " gold standard test" which is assumed correct. For all testing, both diagnoses and screening, there is usually a trade-off between sensitivity and specificity, such that higher sensiti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)