|

Correspondence Problem

The correspondence problem refers to the problem of ascertaining which parts of one image correspond to which parts of another image, where differences are due to movement of the camera, the elapse of time, and/or movement of objects in the photos. Correspondence is a fundamental problem in computer vision — influential computer vision researcher Takeo Kanade famously once said that the three fundamental problems of computer vision are: “Correspondence, correspondence, and correspondence!” Indeed, correspondence is arguably the key building block in many related applications: optical flow (in which the two images are subsequent in time), dense stereo vision (in which two images are from a stereo camera pair), structure from motion (SfM) and visual SLAM (in which images are from different but partially overlapping views of a scene), and cross-scene correspondence (in which images are from different scenes entirely). Overview Given two or more images of the same 3D scene, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Vision

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanning, 3D scanner, 3D point clouds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Image Rectification

Image rectification is a transformation process used to project images onto a common image plane. This process has several degrees of freedom and there are many strategies for transforming images to the common plane. Image rectification is used in computer stereo vision to simplify the problem of finding matching points between images (i.e. the correspondence problem), and in geographic information systems (GIS) to merge images taken from multiple perspectives into a common map coordinate system. In computer vision Computer stereo vision takes two or more images with known relative camera positions that show an object from different viewpoints. For each pixel it then determines the corresponding scene point's depth (i.e. distance from the camera) by first finding matching pixels (i.e. pixels showing the same scene point) in the other image(s) and then applying triangulation (computer vision), triangulation to the found matches to determine their depth. Finding matches in stereo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scale-invariant Feature Transform

The scale-invariant feature transform (SIFT) is a computer vision algorithm to detect, describe, and match local '' features'' in images, invented by David Lowe in 1999. Applications include object recognition, robotic mapping and navigation, image stitching, 3D modeling, gesture recognition, video tracking, individual identification of wildlife and match moving. SIFT keypoints of objects are first extracted from a set of reference images and stored in a database. An object is recognized in a new image by individually comparing each feature from the new image to this database and finding candidate matching features based on Euclidean distance of their feature vectors. From the full set of matches, subsets of keypoints that agree on the object and its location, scale, and orientation in the new image are identified to filter out good matches. The determination of consistent clusters is performed rapidly by using an efficient hash table implementation of the generalised Hough t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Birchfield–Tomasi Dissimilarity

In computer vision, the Birchfield–Tomasi dissimilarity is a pixelwise image dissimilarity measure that is robust with respect to sampling effects. In the comparison of two image elements, it fits the intensity of one pixel to the linearly interpolated intensity around a corresponding pixel on the other image.Birchfield and Tomasi (1998) It is used as a dissimilarity measure in stereo matching, where one-dimensional search for correspondences is performed to recover a dense disparity map from a stereo image pair.Hirschmüller and Scharstein (2007)Morales et al. (2013) Description When performing pixelwise image matching, the measure of dissimilarity between pairs of pixels from different images is affected by differences in image acquisition such as illumination bias and noise. Even when assuming no difference in these aspects between an image pair, additional inconsistencies are introduced by the pixel sampling process, because each pixel is a sample obtained integrating th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Image Registration

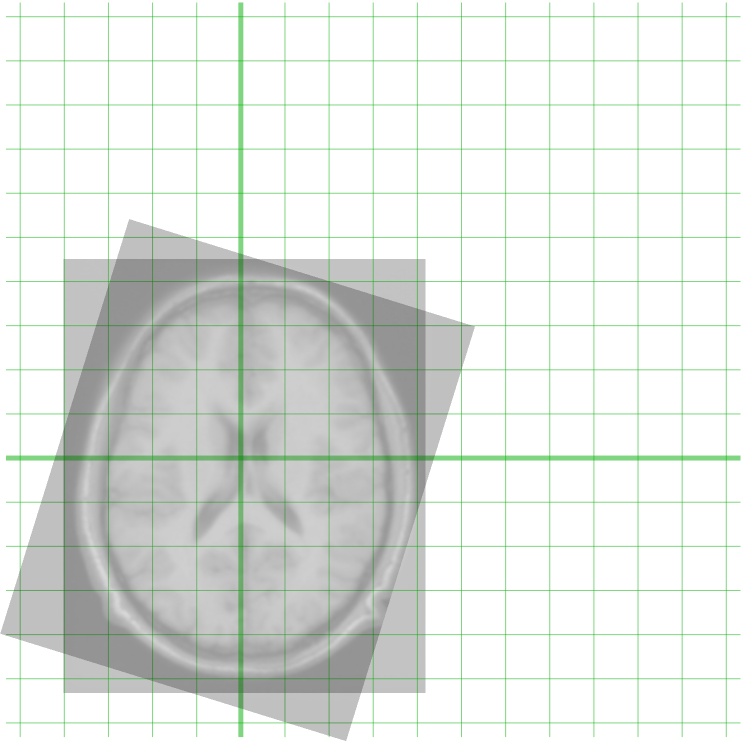

Image registration is the process of transforming different sets of data into one coordinate system. Data may be multiple photographs, data from different sensors, times, depths, or viewpoints. It is used in computer vision, medical imaging, military automatic target recognition, and compiling and analyzing images and data from satellites. Registration is necessary in order to be able to compare or integrate the data obtained from these different measurements. Algorithm classification Intensity-based vs feature-based Image registration or image alignment algorithms can be classified into intensity-based and feature-based.A. Ardeshir Goshtasby2-D and 3-D Image Registration for Medical, Remote Sensing, and Industrial Applications Wiley Press, 2005. One of the images is referred to as the ''target'', ''fixed'' or ''sensed'' image and the others are referred to as the ''moving'' or ''source'' images. Image registration involves spatially transforming the source/moving image(s) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Epipolar Geometry

Epipolar geometry is the geometry of stereo vision#Computer stereo vision, stereo vision. When two cameras view a 3D scene from two distinct positions, there are a number of geometric relations between the 3D points and their projections onto the 2D images that lead to constraints between the image points. These relations are derived based on the assumption that the cameras can be approximated by the pinhole camera model. Definitions The #Epipolar constraint and triangulation, figure below depicts two pinhole cameras looking at point X. In real cameras, the image plane is actually behind the focal center, and produces an image that is symmetric about the focal center of the lens. Here, however, the problem is simplified by placing a ''virtual image plane'' in front of the focal center i.e. optical center of each camera lens to produce an image not transformed by the symmetry. OL and OR represent the centers of symmetry of the two cameras lenses. X represents the point of inter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

JCBB

Joint compatibility branch and bound (JCBB) is an algorithm in computer vision and robotics commonly used for data association in simultaneous localization and mapping Simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an Intelligent agent, agent's location within it. While this initially ap .... JCBB measures the joint compatibility of a set of pairings that successfully rejects spurious matchings and is hence known to be robust in complex environments. References {{reflist Computer vision Robot control ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Fundamental Matrix (computer Vision)

In computer vision, the fundamental matrix \mathbf is a 3×3 matrix which relates corresponding points in stereo images. In epipolar geometry, with homogeneous image coordinates, x and x′, of corresponding points in a stereo image pair, Fx describes a line (an epipolar line) on which the corresponding point x′ on the other image must lie. That means, for all pairs of corresponding points holds : \mathbf'^ \mathbf = 0. Being of rank two and determined only up to scale, the fundamental matrix can be estimated given at least seven point correspondences. Its seven parameters represent the only geometric information about cameras that can be obtained through point correspondences alone. The term "fundamental matrix" was coined by QT Luong in his influential PhD thesis. It is sometimes also referred to as the "bifocal tensor". As a tensor it is a two-point tensor in that it is a bilinear form relating points in distinct coordinate systems. The above relation which defin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Vision

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanning, 3D scanner, 3D point clouds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Stereopsis

Binocular vision is seeing with two eyes, which increases the size of the Visual field, visual field. If the visual fields of the two eyes overlap, binocular #Depth, depth can be seen. This allows objects to be recognized more quickly, camouflage to be detected, spatial relationships to be perceived more quickly and accurately(#Stereopsis, stereopsis) and perception to be less susceptible to optical illusions, optical illusions. In #Medical, medical attention is paid to the occurrence, defects and sharpness of binocular vision. In #Biological, biological the occurrence of binocular vision in animals is described. Geometric terms When the left eye (LE) and the right eye (RE) observe two objects X and Y, the following concepts are important: Egocentric distance The ''egocentric distance'' to object X is the distance from the observer to X. In the figure: Dx. Metric depth The ''metric depth'' between two objects X and Y is the difference of the egocentric distances to X and Y. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Depth Perception

Depth perception is the ability to perceive distance to objects in the world using the visual system and visual perception. It is a major factor in perceiving the world in three dimensions. Depth sensation is the corresponding term for non-human animals, since although it is known that they can sense the distance of an object, it is not known whether they perceive it in the same way that humans do. Depth perception arises from a variety of depth cues. These are typically classified into binocular cues and monocular cues. Binocular cues are based on the receipt of sensory information in three dimensions from both eyes and monocular cues can be observed with just one eye. Binocular cues include retinal disparity, which exploits parallax and vergence. Stereopsis is made possible with binocular vision. Monocular cues include relative size (distant objects subtend smaller visual angles than near objects), texture gradient, occlusion, linear perspective, contrast differences, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Photogrammetry

Photogrammetry is the science and technology of obtaining reliable information about physical objects and the environment through the process of recording, measuring and interpreting photographic images and patterns of electromagnetic radiant imagery and other phenomena. While the invention of the method is attributed to Aimé Laussedat, the term "photogrammetry" was coined by the German architect , which appeared in his 1867 article "Die Photometrographie." There are many variants of photogrammetry. One example is the extraction of three-dimensional measurements from two-dimensional data (i.e. images); for example, the distance between two points that lie on a plane parallel to the photographic image plane can be determined by measuring their distance on the image, if the scale (map), scale of the image is known. Another is the extraction of accurate color ranges and values representing such quantities as albedo, specular reflection, Metallicity#Photometric colors, metallicity ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |