|

Computerized Classification Test

A computerized classification test (CCT) refers to a Performance Appraisal System that is administered by computer for the purpose of classifying examinees. The most common CCT is a mastery test where the test classifies examinees as "Pass" or "Fail," but the term also includes tests that classify examinees into more than two categories. While the term may generally be considered to refer to all computer-administered tests for classification, it is usually used to refer to tests that are interactively administered or of variable-length, similar to computerized adaptive testing (CAT). Like CAT, variable-length CCTs can accomplish the goal of the test (accurate classification) with a fraction of the number of items used in a conventional fixed-form test. A CCT requires several components: # An item bank calibrated with a psychometric model selected by the test designer # A starting point # An item selection algorithm # A termination criterion and scoring procedure The starting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Test (student Assessment)

An examination (exam or evaluation) or test is an educational assessment intended to measure a test-taker's knowledge, skill, aptitude, physical fitness, or classification in many other topics (e.g., beliefs). A test may be administered verbally, on paper, on a computer-adaptive testing, computer, or in a predetermined area that requires a test taker to demonstrate or perform a set of skills. Tests vary in style, rigor and requirements. There is no general consensus or invariable standard for test formats and difficulty. Often, the format and difficulty of the test is dependent upon the educational philosophy of the instructor, subject matter, class size, policy of the educational institution, and requirements of accreditation or governing bodies. A test may be administered formally or informally. An example of an informal test is a reading test administered by a parent to a child. A formal test might be a final examination administered by a teacher in a classroom or an IQ te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Psychometrics

Psychometrics is a field of study within psychology concerned with the theory and technique of measurement. Psychometrics generally covers specialized fields within psychology and education devoted to testing, measurement, assessment, and related activities. Psychometrics is concerned with the objective measurement of latent constructs that cannot be directly observed. Examples of latent constructs include intelligence, introversion, mental disorders, and educational achievement. The levels of individuals on nonobservable latent variables are inferred through mathematical modeling based on what is observed from individuals' responses to items on tests and scales. Practitioners are described as psychometricians, although not all who engage in psychometric research go by this title. Psychometricians usually possess specific qualifications, such as degrees or certifications, and most are psychologists with advanced graduate training in psychometrics and measurement theory. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hypothesis Test

A statistical hypothesis test is a method of statistical inference used to decide whether the data provide sufficient evidence to reject a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. Then a decision is made, either by comparing the test statistic to a critical value or equivalently by evaluating a ''p''-value computed from the test statistic. Roughly 100 specialized statistical tests are in use and noteworthy. History While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Choice of null hypothesis Paul Meehl has argued that the epistemological importance of the choice of null hypothesis has gone largely unacknowledged. When the null hypothesis is predicted by theory, a more precise experiment will be a more severe test of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Decision Theory

In estimation theory and decision theory, a Bayes estimator or a Bayes action is an estimator or decision rule that minimizes the posterior expected value of a loss function (i.e., the posterior expected loss). Equivalently, it maximizes the posterior expectation of a utility function. An alternative way of formulating an estimator within Bayesian statistics is maximum a posteriori estimation. Definition Suppose an unknown parameter \theta is known to have a prior distribution \pi. Let \widehat = \widehat(x) be an estimator of \theta (based on some measurements ''x''), and let L(\theta,\widehat) be a loss function, such as squared error. The Bayes risk of \widehat is defined as E_\pi(L(\theta, \widehat)), where the expectation is taken over the probability distribution of \theta: this defines the risk function as a function of \widehat. An estimator \widehat is said to be a ''Bayes estimator'' if it minimizes the Bayes risk among all estimators. Equivalently, the estimator whi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantities Of Information

The mathematical theory of information is based on probability theory and statistics, and measures information with several quantities of information. The choice of logarithmic base in the following formulae determines the unit of information entropy that is used. The most common unit of information is the ''bit'', or more correctly the shannon, based on the binary logarithm. Although ''bit'' is more frequently used in place of ''shannon'', its name is not distinguished from the bit as used in data processing to refer to a binary value or stream regardless of its entropy (information content). Other units include the nat, based on the natural logarithm, and the hartley, based on the base 10 or common logarithm. In what follows, an expression of the form p \log p \, is considered by convention to be equal to zero whenever p is zero. This is justified because \lim_ p \log p = 0 for any logarithmic base. Self-information Shannon derived a measure of information content cal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Testlet

Multistage testing is an algorithm-based approach to administering tests. It is very similar to computer-adaptive testing in that items are interactively selected for each examinee by the algorithm, but rather than selecting individual items, groups of items are selected, building the test in stages. These groups are called ''testlets'' or ''panels''. While multistage tests could theoretically be administered by a human, the extensive computations required (often using item response theory In psychometrics, item response theory (IRT, also known as latent trait theory, strong true score theory, or modern mental test theory) is a paradigm for the design, analysis, and scoring of Test (student assessment), tests, questionnaires, and sim ...) mean that multistage tests are administered by computer. The number of stages or testlets can vary. If the testlets are relatively small, such as five items, ten or more could easily be used in a test. Some multistage tests are designed with the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequential Probability Ratio Test

The sequential probability ratio test (SPRT) is a specific Sequential analysis, sequential hypothesis test, developed by Abraham Wald and later proven to be optimal by Wald and Jacob Wolfowitz. Neyman–Pearson lemma, Neyman and Pearson's 1933 result inspired Wald to reformulate it as a sequential analysis problem. The Neyman-Pearson lemma, by contrast, offers a rule of thumb for when all the data is collected (and its likelihood ratio known). While originally developed for use in quality control studies in the realm of manufacturing, SPRT has been formulated for use in the computerized testing of human examinees as a termination criterion. Theory As in classical hypothesis testing, SPRT starts with a pair of hypotheses, say H_0 and H_1 for the null hypothesis and alternative hypothesis respectively. They must be specified as follows: :H_0: p=p_0 :H_1: p=p_1 The next step is to calculate the cumulative sum of the log-likelihood-ratio test, likelihood ratio, \log \Lambda_i, as ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer

A computer is a machine that can be Computer programming, programmed to automatically Execution (computing), carry out sequences of arithmetic or logical operations (''computation''). Modern digital electronic computers can perform generic sets of operations known as Computer program, ''programs'', which enable computers to perform a wide range of tasks. The term computer system may refer to a nominally complete computer that includes the Computer hardware, hardware, operating system, software, and peripheral equipment needed and used for full operation; or to a group of computers that are linked and function together, such as a computer network or computer cluster. A broad range of Programmable logic controller, industrial and Consumer electronics, consumer products use computers as control systems, including simple special-purpose devices like microwave ovens and remote controls, and factory devices like industrial robots. Computers are at the core of general-purpose devices ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Specificity (test)

In medicine and statistics, sensitivity and specificity mathematically describe the accuracy of a test that reports the presence or absence of a medical condition. If individuals who have the condition are considered "positive" and those who do not are considered "negative", then sensitivity is a measure of how well a test can identify true positives and specificity is a measure of how well a test can identify true negatives: * Sensitivity (true positive rate) is the probability of a positive test result, conditioned on the individual truly being positive. * Specificity (true negative rate) is the probability of a negative test result, conditioned on the individual truly being negative. If the true status of the condition cannot be known, sensitivity and specificity can be defined relative to a " gold standard test" which is assumed correct. For all testing, both diagnoses and screening, there is usually a trade-off between sensitivity and specificity, such that higher sensiti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

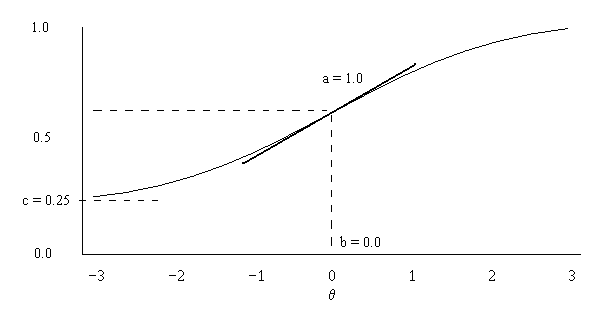

Item Response Theory

In psychometrics, item response theory (IRT, also known as latent trait theory, strong true score theory, or modern mental test theory) is a paradigm for the design, analysis, and scoring of Test (student assessment), tests, questionnaires, and similar instruments measurement, measuring abilities, attitudes, or other variables. It is a theory of testing based on the relationship between individuals' performances on a test item and the test takers' levels of performance on an overall measure of the ability that item was designed to measure. Several different statistical models are used to represent both item and test taker characteristics. Unlike simpler alternatives for creating scales and evaluating questionnaire responses, it does not assume that each item is equally difficult. This distinguishes IRT from, for instance, Likert scaling, in which ''"''All items are assumed to be replications of each other or in other words items are considered to be parallel instruments". By contrast ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |