|

Capsule Neural Network

A capsule neural network (CapsNet) is a machine learning system that is a type of artificial neural network (ANN) that can be used to better model hierarchical relationships. The approach is an attempt to more closely mimic biological neural organization. The idea is to add structures called "capsules" to a convolutional neural network (CNN), and to reuse output from several of those capsules to form more stable (with respect to various perturbations) representations for higher capsules. The output is a vector consisting of the probability of an observation, and a pose for that observation. This vector is similar to what is done for example when doing ''classification with localization'' in CNNs. Among other benefits, capsnets address the "Picasso problem" in image recognition: images that have all the right parts but that are not in the correct spatial relationship (e.g., in a "face", the positions of the mouth and one eye are switched). For image recognition, capsnets exploit t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Artificial Neural Network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Artificial Neuron

An artificial neuron is a mathematical function conceived as a model of a biological neuron in a neural network. The artificial neuron is the elementary unit of an ''artificial neural network''. The design of the artificial neuron was inspired by biological neural circuitry. Its inputs are analogous to excitatory postsynaptic potentials and inhibitory postsynaptic potentials at neural dendrites, or . Its weights are analogous to synaptic weights, and its output is analogous to a neuron's action potential which is transmitted along its axon. Usually, each input is separately weighted, and the sum is often added to a term known as a ''bias'' (loosely corresponding to the threshold potential), before being passed through a nonlinear function known as an activation function. Depending on the task, these functions could have a sigmoid shape (e.g. for binary classification), but they may also take the form of other nonlinear functions, piecewise linear functions, or step fun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cerebral Cortex

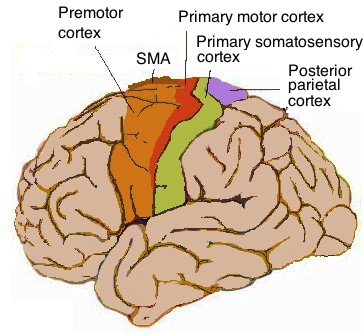

The cerebral cortex, also known as the cerebral mantle, is the outer layer of neural tissue of the cerebrum of the brain in humans and other mammals. It is the largest site of Neuron, neural integration in the central nervous system, and plays a key role in attention, perception, awareness, thought, memory, language, and consciousness. The six-layered neocortex makes up approximately 90% of the Cortex (anatomy), cortex, with the allocortex making up the remainder. The cortex is divided into left and right parts by the longitudinal fissure, which separates the two cerebral hemispheres that are joined beneath the cortex by the corpus callosum and other commissural fibers. In most mammals, apart from small mammals that have small brains, the cerebral cortex is folded, providing a greater surface area in the confined volume of the neurocranium, cranium. Apart from minimising brain and cranial volume, gyrification, cortical folding is crucial for the Neural circuit, brain circuitry ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Chandelier Cell

Chandelier cells or chandelier neurons are a subset of GABAergic cortical interneurons. They are described as parvalbumin-containing and fast-spiking to distinguish them from other subtypes of GABAergic neurons, although some studies have suggested that only a subset of chandelier cells test positive for parvalbumin by immunostaining. The name comes from the specific shape of their axon arbors, with the terminals forming distinct arrays called "''cartridges''". The cartridges are immunoreactive to an isoform of the GABA membrane transporter, GAT-1, and this serves as their identifying feature. GAT-1 is involved in the process of GABA reuptake into nerve terminals, thus helping to terminate its synaptic activity. Chandelier neurons synapse exclusively to the axonal initial segment of pyramidal neurons, near the site where action potential is generated. It is believed that they provide inhibitory input to the pyramidal neurons, but there is data showing that in some circumstances ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Winner-take-all (computing)

Winner-take-all is a computational principle applied in computational models of neural networks by which neurons compete with each other for activation. In the classical form, only the neuron with the highest activation stays active while all other neurons shut down; however, other variations allow more than one neuron to be active, for example the soft winner take-all, by which a Exponentiation#Power functions, power function is applied to the neurons. Neural networks In the theory of artificial neural networks, winner-take-all networks are a case of competitive learning in recurrent neural networks. Output nodes in the network mutually inhibit each other, while simultaneously activating themselves through reflexive connections. After some time, only one node in the output layer will be active, namely the one corresponding to the strongest input. Thus the network uses nonlinear inhibition to pick out the largest of a set of inputs. Winner-take-all is a general computational primiti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Prior Probability

A prior probability distribution of an uncertain quantity, simply called the prior, is its assumed probability distribution before some evidence is taken into account. For example, the prior could be the probability distribution representing the relative proportions of voters who will vote for a particular politician in a future election. The unknown quantity may be a parameter of the model or a latent variable rather than an observable variable. In Bayesian statistics, Bayes' rule prescribes how to update the prior with new information to obtain the posterior probability distribution, which is the conditional distribution of the uncertain quantity given new data. Historically, the choice of priors was often constrained to a conjugate family of a given likelihood function, so that it would result in a tractable posterior of the same family. The widespread availability of Markov chain Monte Carlo methods, however, has made this less of a concern. There are many ways to const ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Log Probability

In probability theory and computer science, a log probability is simply a logarithm of a probability. The use of log probabilities means representing probabilities on a logarithmic scale (-\infty, 0], instead of the standard , 1/math> unit interval. Since the probabilities of independent events multiply, and logarithms convert multiplication to addition, log probabilities of independent events add. Log probabilities are thus practical for computations, and have an intuitive interpretation in terms of information theory: the negative expected value of the log probabilities is the information entropy of an event. Similarly, likelihoods are often transformed to the log scale, and the corresponding log-likelihood can be interpreted as the degree to which an event supports a statistical model. The log probability is widely used in implementations of computations with probability, and is studied as a concept in its own right in some applications of information theory, such as natural lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Logit

In statistics, the logit ( ) function is the quantile function associated with the standard logistic distribution. It has many uses in data analysis and machine learning, especially in Data transformation (statistics), data transformations. Mathematically, the logit is the inverse function, inverse of the logistic function, standard logistic function \sigma(x) = 1/(1+e^), so the logit is defined as : \operatorname p = \sigma^(p) = \ln \frac \quad \text \quad p \in (0,1). Because of this, the logit is also called the log-odds since it is equal to the logarithm of the odds \frac where is a probability. Thus, the logit is a type of function that maps probability values from (0, 1) to real numbers in (-\infty, +\infty), akin to the probit, probit function. Definition If is a probability, then is the corresponding odds; the of the probability is the logarithm of the odds, i.e.: : \operatorname(p)=\ln\left( \frac \right) =\ln(p)-\ln(1-p)=-\ln\left( \frac-1\right)=2\operatornam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Softmax Function

The softmax function, also known as softargmax or normalized exponential function, converts a tuple of real numbers into a probability distribution of possible outcomes. It is a generalization of the logistic function to multiple dimensions, and is used in multinomial logistic regression. The softmax function is often used as the last activation function of a neural network to normalize the output of a network to a probability distribution over predicted output classes. Definition The softmax function takes as input a tuple of real numbers, and normalizes it into a probability distribution consisting of probabilities proportional to the exponentials of the input numbers. That is, prior to applying softmax, some tuple components could be negative, or greater than one; and might not sum to 1; but after applying softmax, each component will be in the interval (0, 1), and the components will add up to 1, so that they can be interpreted as probabilities. Furthermore, the la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Dot Product

In mathematics, the dot product or scalar productThe term ''scalar product'' means literally "product with a Scalar (mathematics), scalar as a result". It is also used for other symmetric bilinear forms, for example in a pseudo-Euclidean space. Not to be confused with scalar multiplication. is an algebraic operation that takes two equal-length sequences of numbers (usually coordinate vectors), and returns a single number. In Euclidean geometry, the dot product of the Cartesian coordinates of two Euclidean vector, vectors is widely used. It is often called the inner product (or rarely the projection product) of Euclidean space, even though it is not the only inner product that can be defined on Euclidean space (see ''Inner product space'' for more). It should not be confused with the cross product. Algebraically, the dot product is the sum of the Product (mathematics), products of the corresponding entries of the two sequences of numbers. Geometrically, it is the product of the Euc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Backpropagation

In machine learning, backpropagation is a gradient computation method commonly used for training a neural network to compute its parameter updates. It is an efficient application of the chain rule to neural networks. Backpropagation computes the gradient of a loss function with respect to the weights of the network for a single input–output example, and does so efficiently, computing the gradient one layer at a time, iterating backward from the last layer to avoid redundant calculations of intermediate terms in the chain rule; this can be derived through dynamic programming. Strictly speaking, the term ''backpropagation'' refers only to an algorithm for efficiently computing the gradient, not how the gradient is used; but the term is often used loosely to refer to the entire learning algorithm – including how the gradient is used, such as by stochastic gradient descent, or as an intermediate step in a more complicated optimizer, such as Adaptive Moment Estimation. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Digital Image Processing

Digital image processing is the use of a digital computer to process digital images through an algorithm. As a subcategory or field of digital signal processing, digital image processing has many advantages over analog image processing. It allows a much wider range of algorithms to be applied to the input data and can avoid problems such as the build-up of Noise (signal processing), noise and distortion during processing. Since images are defined over two dimensions (perhaps more), digital image processing may be modeled in the form of Multidimensional system, multidimensional systems. The generation and development of digital image processing are mainly affected by three factors: first, the development of computers; second, the development of mathematics (especially the creation and improvement of discrete mathematics, discrete mathematics theory); and third, the demand for a wide range of applications in environment, agriculture, military, industry and medical science has incre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |