|

CURE Data Clustering Algorithm

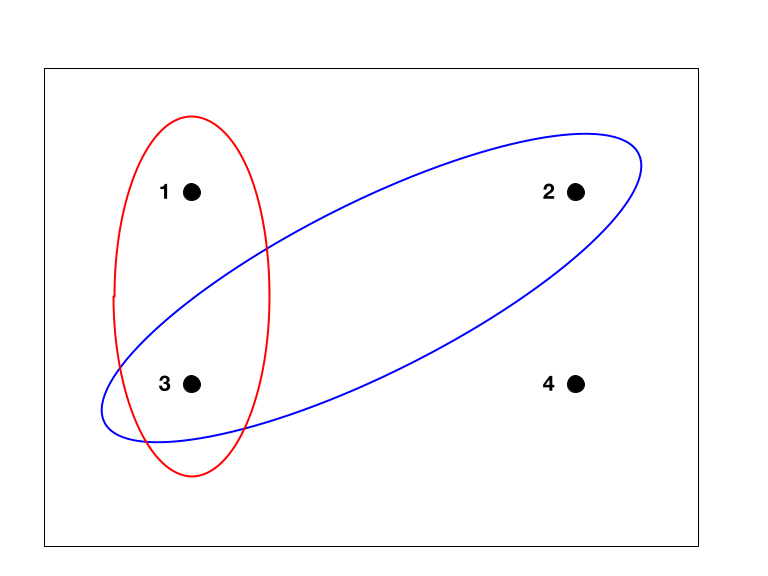

CURE (Clustering Using REpresentatives) is an efficient data clustering algorithm for large databases. Compared with K-means clustering it is more robust to outliers and able to identify clusters having non-spherical shapes and size variances. Drawbacks of traditional algorithms The popular K-means clustering algorithm minimizes the sum of squared errors criterion: : E = \sum_^ \sum_ (p-m_i)^, Given large differences in sizes or geometries of different clusters, the square error method could split the large clusters to minimize the square error, which is not always correct. Also, with hierarchic clustering algorithms these problems exist as none of the distance measures between clusters (d_, d_) tend to work with different cluster shapes. Also the running time is high when n is large. The problem with the BIRCH algorithm is that once the clusters are generated after step 3, it uses centroids of the clusters and assigns each data point to the cluster with the closest cen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Clustering

Cluster analysis or clustering is the task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some sense) to each other than to those in other groups (clusters). It is a main task of exploratory data analysis, and a common technique for statistical data analysis, used in many fields, including pattern recognition, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Cluster analysis itself is not one specific algorithm, but the general task to be solved. It can be achieved by various algorithms that differ significantly in their understanding of what constitutes a cluster and how to efficiently find them. Popular notions of clusters include groups with small distances between cluster members, dense areas of the data space, intervals or particular statistical distributions. Clustering can therefore be formulated as a multi-objective optimization problem. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). One of the roles of compu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BFR Algorithm

The BFR algorithm, named after its inventors Bradley, Fayyad and Reina, is a variant of k-means clustering, k-means algorithm that is designed to cluster data in a high-dimensional Euclidean space. It makes a very strong assumption about the shape of clusters: they must be Normal distribution, normally distributed about a centroid. The mean and standard deviation for a cluster may differ for different dimensions, but the dimensions must be independent. References {{Reflist Cluster analysis algorithms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-means Clustering

''k''-means clustering is a method of vector quantization, originally from signal processing, that aims to partition ''n'' observations into ''k'' clusters in which each observation belongs to the cluster with the nearest mean (cluster centers or cluster centroid), serving as a prototype of the cluster. This results in a partitioning of the data space into Voronoi cells. ''k''-means clustering minimizes within-cluster variances ( squared Euclidean distances), but not regular Euclidean distances, which would be the more difficult Weber problem: the mean optimizes squared errors, whereas only the geometric median minimizes Euclidean distances. For instance, better Euclidean solutions can be found using k-medians and k-medoids. The problem is computationally difficult (NP-hard); however, efficient heuristic algorithms converge quickly to a local optimum. These are usually similar to the expectation-maximization algorithm for mixtures of Gaussian distributions via an iterative refi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kd-tree

In computer science, a ''k''-d tree (short for ''k-dimensional tree'') is a space-partitioning data structure for organizing points in a ''k''-dimensional space. ''k''-d trees are a useful data structure for several applications, such as searches involving a multidimensional search key (e.g. range searches and nearest neighbor searches) and creating point clouds. ''k''-d trees are a special case of binary space partitioning trees. Description The ''k''-d tree is a binary tree in which ''every'' node is a ''k''-dimensional point. Every non-leaf node can be thought of as implicitly generating a splitting hyperplane that divides the space into two parts, known as half-spaces. Points to the left of this hyperplane are represented by the left subtree of that node and points to the right of the hyperplane are represented by the right subtree. The hyperplane direction is chosen in the following way: every node in the tree is associated with one of the ''k'' dimensions, with the h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sample Space

In probability theory, the sample space (also called sample description space, possibility space, or outcome space) of an experiment or random trial is the set of all possible outcomes or results of that experiment. A sample space is usually denoted using set notation, and the possible ordered outcomes, or sample points, are listed as elements in the set. It is common to refer to a sample space by the labels ''S'', Ω, or ''U'' (for " universal set"). The elements of a sample space may be numbers, words, letters, or symbols. They can also be finite, countably infinite, or uncountably infinite. A subset of the sample space is an event, denoted by E. If the outcome of an experiment is included in E, then event E has occurred. For example, if the experiment is tossing a single coin, the sample space is the set \, where the outcome H means that the coin is heads and the outcome T means that the coin is tails. The possible events are E=\, E = \, and E = \. For tossing two coins, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trade-off

A trade-off (or tradeoff) is a situational decision that involves diminishing or losing one quality, quantity, or property of a set or design in return for gains in other aspects. In simple terms, a tradeoff is where one thing increases, and another must decrease. Tradeoffs stem from limitations of many origins, including simple physics – for instance, only a certain volume of objects can fit into a given space, so a full container must remove some items in order to accept any more, and vessels can carry a few large items or multiple small items. Tradeoffs also commonly refer to different configurations of a single item, such as the tuning of strings on a guitar to enable different notes to be played, as well as an allocation of time and attention towards different tasks. The concept of a tradeoff suggests a tactical or strategic choice made with full comprehension of the advantages and disadvantages of each setup. An economic example is the decision to invest in stocks, which a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Primary Storage

Computer data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers. The central processing unit (CPU) of a computer is what manipulates data by performing computations. In practice, almost all computers use a storage hierarchy, which puts fast but expensive and small storage options close to the CPU and slower but less expensive and larger options further away. Generally, the fast volatile technologies (which lose data when off power) are referred to as "memory", while slower persistent technologies are referred to as "storage". Even the first computer designs, Charles Babbage's Analytical Engine and Percy Ludgate's Analytical Machine, clearly distinguished between processing and memory (Babbage stored numbers as rotations of gears, while Ludgate stored numbers as displacements of rods in shuttles). This distinction was extended in the Von Neuman ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Sample

In statistics, quality assurance, and survey methodology, sampling is the selection of a subset (a statistical sample) of individuals from within a statistical population to estimate characteristics of the whole population. Statisticians attempt to collect samples that are representative of the population in question. Sampling has lower costs and faster data collection than measuring the entire population and can provide insights in cases where it is infeasible to measure an entire population. Each observation measures one or more properties (such as weight, location, colour or mass) of independent objects or individuals. In survey sampling, weights can be applied to the data to adjust for the sample design, particularly in stratified sampling. Results from probability theory and statistical theory are employed to guide the practice. In business and medical research, sampling is widely used for gathering information about a population. Acceptance sampling is used to determine ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sampling (statistics)

In statistics, quality assurance, and survey methodology, sampling is the selection of a subset (a statistical sample) of individuals from within a statistical population to estimate characteristics of the whole population. Statisticians attempt to collect samples that are representative of the population in question. Sampling has lower costs and faster data collection than measuring the entire population and can provide insights in cases where it is infeasible to measure an entire population. Each observation measures one or more properties (such as weight, location, colour or mass) of independent objects or individuals. In survey sampling, weights can be applied to the data to adjust for the sample design, particularly in stratified sampling. Results from probability theory and statistical theory are employed to guide the practice. In business and medical research, sampling is widely used for gathering information about a population. Acceptance sampling is used to determi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database

In computing, a database is an organized collection of data stored and accessed electronically. Small databases can be stored on a file system, while large databases are hosted on computer clusters or cloud storage. The design of databases spans formal techniques and practical considerations, including data modeling, efficient data representation and storage, query languages, security and privacy of sensitive data, and distributed computing issues, including supporting concurrent access and fault tolerance. A database management system (DBMS) is the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS software additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an appli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |