|

Acid Catalysts

In computer science, ACID ( atomicity, consistency, isolation, durability) is a set of properties of database transactions intended to guarantee data validity despite errors, power failures, and other mishaps. In the context of databases, a sequence of database operations that satisfies the ACID properties (which can be perceived as a single logical operation on the data) is called a ''transaction''. For example, a transfer of funds from one bank account to another, even involving multiple changes such as debiting one account and crediting another, is a single transaction. In 1983, Andreas Reuter and Theo Härder coined the acronym ''ACID'', building on earlier work by Jim Gray who named atomicity, consistency, and durability, but not isolation, when characterizing the transaction concept. These four properties are the major guarantees of the transaction paradigm, which has influenced many aspects of development in database systems. According to Gray and Reuter, the IBM Inform ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to Applied science, practical disciplines (including the design and implementation of Computer architecture, hardware and Computer programming, software). Computer science is generally considered an area of research, academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing Vulnerability (computing), security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Progr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integrity Constraints

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire life-cycle and is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The term is broad in scope and may have widely different meanings depending on the specific context even under the same general umbrella of computing. It is at times used as a proxy term for data quality, while data validation is a prerequisite for data integrity. Data integrity is the opposite of data corruption. The overall intent of any data integrity technique is the same: ensure data is recorded exactly as intended (such as a database correctly rejecting mutually exclusive possibilities). Moreover, upon later retrieval, ensure the data is the same as when it was originally recorded. In short, data integrity aims to prevent unintentional changes to information. Data integrity is not to be confused with data security, the discipline of protect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Disk Buffer

In computer storage, disk buffer (often ambiguously called disk cache or cache buffer) is the embedded memory in a hard disk drive (HDD) or solid state drive (SSD) acting as a buffer between the rest of the computer and the physical hard disk platter or flash memory that is used for storage. Modern hard disk drives come with 8 to 256 MiB of such memory, and solid-state drives come with up to 4 GB of cache memory. Since the late 1980s, nearly all disks sold have embedded microcontrollers and either an ATA, Serial ATA, SCSI, or Fibre Channel interface. The drive circuitry usually has a small amount of memory, used to store the data going to and coming from the disk platters. The disk buffer is physically distinct from and is used differently from the page cache typically kept by the operating system in the computer's main memory. The disk buffer is controlled by the microcontroller in the hard disk drive, and the page cache is controlled by the computer to which that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Consistency

Data consistency refers to whether the same data kept at different places do or do not match. Point-in-time consistency Point-in-time consistency is an important property of backup files and a critical objective of software that creates backups. It is also relevant to the design of disk memory systems, specifically relating to what happens when they are unexpectedly shut down. As a relevant backup example, consider a website with a database such as the online encyclopedia Wikipedia, which needs to be operational around the clock, but also must be backed up with regularity to protect against disaster. Portions of Wikipedia are constantly being updated every minute of every day, meanwhile, Wikipedia's database is stored on servers in the form of one or several very large files which require minutes or hours to back up. These large files—as with any database—contain numerous data structures which reference each other by location. For example, some structures are indexes which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-volatile Memory

Non-volatile memory (NVM) or non-volatile storage is a type of computer memory that can retain stored information even after power is removed. In contrast, volatile memory needs constant power in order to retain data. Non-volatile memory typically refers to storage in semiconductor memory chips, which store data in floating-gate memory cells consisting of floating-gate MOSFETs (metal–oxide–semiconductor field-effect transistors), including flash memory storage such as NAND flash and solid-state drives (SSD). Other examples of non-volatile memory include read-only memory (ROM), EPROM (erasable programmable ROM) and EEPROM (electrically erasable programmable ROM), ferroelectric RAM, most types of computer data storage devices (e.g. disk storage, hard disk drives, optical discs, floppy disks, and magnetic tape), and early computer storage methods such as punched tape and cards. Overview Non-volatile memory is typically used for the task of secondary storage or long-term ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

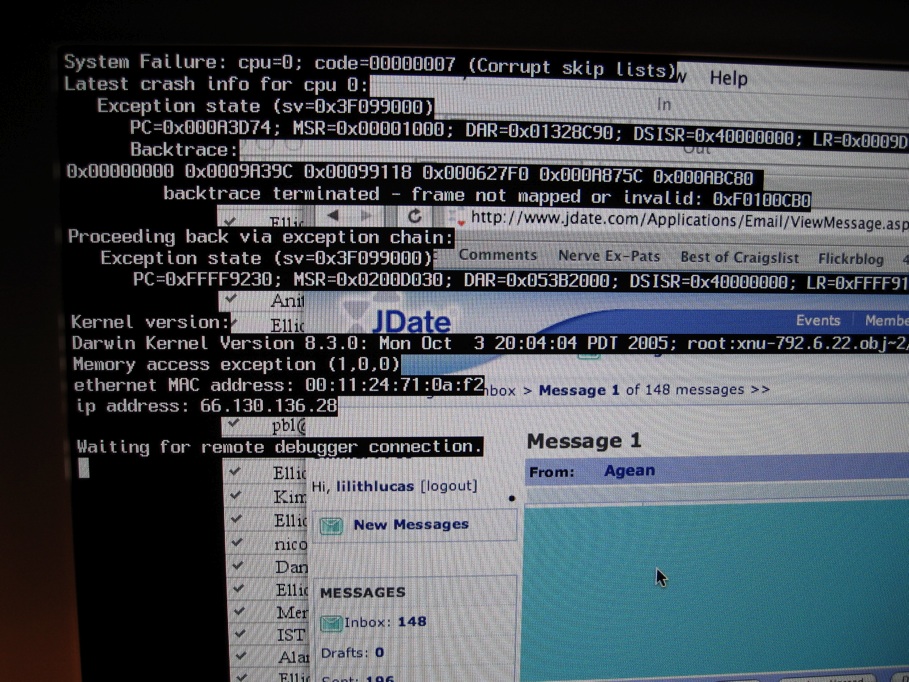

Crash (computing)

In computing, a crash, or system crash, occurs when a computer program such as a software application or an operating system stops functioning properly and exits. On some operating systems or individual applications, a crash reporting service will report the crash and any details relating to it (or give the user the option to do so), usually to the developer(s) of the application. If the program is a critical part of the operating system, the entire system may crash or hang, often resulting in a kernel panic or fatal system error. Most crashes are the result of a software bug. Typical causes include accessing invalid memory addresses, incorrect address values in the program counter, buffer overflow, overwriting a portion of the affected program code due to an earlier bug, executing invalid machine instructions (an illegal opcode), or triggering an unhandled exception. The original software bug that started this chain of events is typically considered to be the cause of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Durability (computer Science)

In database systems, durability is the ACID property which guarantees that transactions that have committed will survive permanently. For example, if a flight booking reports that a seat has successfully been booked, then the seat will remain booked even if the system crashes. Durability can be achieved by flushing the transaction's log records to non-volatile storage before acknowledging commitment. In distributed transactions, all participating servers must coordinate before commit can be acknowledged. This is usually done by a two-phase commit protocol. Many DBMSs implement durability by writing transactions into a transaction log that can be reprocessed to recreate the system state right before any later failure. A transaction is deemed committed only after it is entered in the log. See also * Atomicity * Consistency In classical deductive logic, a consistent theory is one that does not lead to a logical contradiction. The lack of contradiction can be defined in ei ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concurrency Control

In information technology and computer science, especially in the fields of computer programming, operating systems, multiprocessors, and databases, concurrency control ensures that correct results for Concurrent computing, concurrent operations are generated, while getting those results as quickly as possible. Computer systems, both software and computer hardware, hardware, consist of modules, or components. Each component is designed to operate correctly, i.e., to obey or to meet certain consistency rules. When components that operate concurrently interact by messaging or by sharing accessed data (in Computer memory, memory or Computer data storage, storage), a certain component's consistency may be violated by another component. The general area of concurrency control provides rules, methods, design methodologies, and Scientific theory, theories to maintain the consistency of components operating concurrently while interacting, and thus the consistency and correctness of the who ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concurrent Computing

Concurrent computing is a form of computing in which several computations are executed '' concurrently''—during overlapping time periods—instead of ''sequentially—''with one completing before the next starts. This is a property of a system—whether a program, computer, or a network—where there is a separate execution point or "thread of control" for each process. A ''concurrent system'' is one where a computation can advance without waiting for all other computations to complete. Concurrent computing is a form of modular programming. In its paradigm an overall computation is factored into subcomputations that may be executed concurrently. Pioneers in the field of concurrent computing include Edsger Dijkstra, Per Brinch Hansen, and C.A.R. Hoare. Introduction The concept of concurrent computing is frequently confused with the related but distinct concept of parallel computing, Pike, Rob (2012-01-11). "Concurrency is not Parallelism". ''Waza conference'', 11 January ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Foreign Key

A foreign key is a set of attributes in a table that refers to the primary key of another table. The foreign key links these two tables. Another way to put it: In the context of relational databases, a foreign key is a set of attributes subject to a certain kind of inclusion dependency constraints, specifically a constraint that the tuples consisting of the foreign key attributes in one relation, R, must also exist in some other (not necessarily distinct) relation, S, and furthermore that those attributes must also be a candidate key in S. In simpler words, a foreign key is a set of attributes that ''references'' a candidate key. For example, a table called TEAM may have an attribute, MEMBER_NAME, which is a foreign key referencing a candidate key, PERSON_NAME, in the PERSON table. Since MEMBER_NAME is a foreign key, any value existing as the name of a member in TEAM must also exist as a person's name in the PERSON table; in other words, every member of a TEAM is also a PERSON. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unique Key

In relational database management systems, a unique key is a candidate key that is not the primary key of the relation. All the candidate keys of a relation can uniquely identify the records of the relation, but only one of them is used as the primary key of the relation. The remaining candidate keys are called unique keys because they can uniquely identify a record in a relation. Unique keys can consist of multiple columns. Unique keys are also called alternate keys. Unique keys are an alternative to the primary key of the relation. Generally, the unique keys have a UNIQUE constraint assigned to it in order to prevent duplicates (a duplicate entry is not valid in a unique column). Alternate keys may be used like the primary key when doing a single-table select or when filtering in a ''where'' clause, but are not typically used to join multiple tables. Summary Keys provide the means for database users and application software to identify, access and update information in a databas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |