|

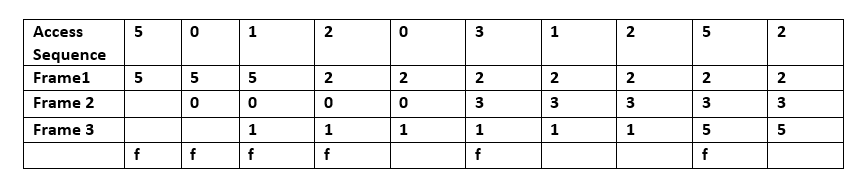

Bélády's Anomaly

In computer storage, Bélády's anomaly is the phenomenon in which increasing the number of page frames results in an increase in the number of page faults for certain memory access patterns. This phenomenon is commonly experienced when using the first-in first-out ( FIFO) page replacement algorithm. In FIFO, the page fault may or may not increase as the page frames increase, but in optimal and stack-based algorithms like LRU, as the page frames increase, the page fault decreases. László Bélády demonstrated this in 1969. Background In common computer memory management, information is loaded in specific-sized chunks. Each chunk is referred to as a ''page Page most commonly refers to: * Page (paper), one side of a leaf of paper, as in a book Page, PAGE, pages, or paging may also refer to: Roles * Page (assistance occupation), a professional occupation * Page (servant), traditionally a young mal ...''. Main memory can hold only a limited number of pages at a time. It req ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Storage

Computer data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers. The central processing unit (CPU) of a computer is what manipulates data by performing computations. In practice, almost all computers use a storage hierarchy, which puts fast but expensive and small storage options close to the CPU and slower but less expensive and larger options further away. Generally, the fast volatile technologies (which lose data when off power) are referred to as "memory", while slower persistent technologies are referred to as "storage". Even the first computer designs, Charles Babbage's Analytical Engine and Percy Ludgate's Analytical Machine, clearly distinguished between processing and memory (Babbage stored numbers as rotations of gears, while Ludgate stored numbers as displacements of rods in shuttles). This distinction was extended in the Von Neumann a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Page Fault

In computing, a page fault (sometimes called PF or hard fault) is an exception that the memory management unit (MMU) raises when a process accesses a memory page without proper preparations. Accessing the page requires a mapping to be added to the process's virtual address space. Besides, the actual page contents may need to be loaded from a backing store, such as a disk. The MMU detects the page fault, but the operating system's kernel handles the exception by making the required page accessible in the physical memory or denying an illegal memory access. Valid page faults are common and necessary to increase the amount of memory available to programs in any operating system that uses virtual memory, such as Windows, macOS, and the Linux kernel. Types Minor If the page is loaded in memory at the time the fault is generated, but is not marked in the memory management unit as being loaded in memory, then it is called a minor or soft page fault. The page fault handler in the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

FIFO (computing And Electronics)

Representation of a FIFO queue In computing and in systems theory, FIFO is an acronym for first in, first out (the first in is the first out), a method for organizing the manipulation of a data structure (often, specifically a data buffer) where the oldest (first) entry, or "head" of the queue, is processed first. Such processing is analogous to servicing people in a queue area on a first-come, first-served (FCFS) basis, i.e. in the same sequence in which they arrive at the queue's tail. FCFS is also the jargon term for the FIFO operating system scheduling algorithm, which gives every process central processing unit (CPU) time in the order in which it is demanded. FIFO's opposite is LIFO, last-in-first-out, where the youngest entry or "top of the stack" is processed first. A priority queue is neither FIFO or LIFO but may adopt similar behaviour temporarily or by default. Queueing theory encompasses these methods for processing data structures, as well as interactions between s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Page Replacement Algorithm

In a computer operating system that uses paging for virtual memory management, page replacement algorithms decide which memory pages to page out, sometimes called swap out, or write to disk, when a page of memory needs to be allocated. Page replacement happens when a requested page is not in memory (page fault) and a free page cannot be used to satisfy the allocation, either because there are none, or because the number of free pages is lower than some threshold. When the page that was selected for replacement and paged out is referenced again it has to be paged in (read in from disk), and this involves waiting for I/O completion. This determines the ''quality'' of the page replacement algorithm: the less time waiting for page-ins, the better the algorithm. A page replacement algorithm looks at the limited information about accesses to the pages provided by hardware, and tries to guess which pages should be replaced to minimize the total number of page misses, while balancing thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Replacement Policies

In computing, cache algorithms (also frequently called cache replacement algorithms or cache replacement policies) are optimizing instructions, or algorithms, that a computer program or a hardware-maintained structure can utilize in order to manage a cache of information stored on the computer. Caching improves performance by keeping recent or often-used data items in memory locations that are faster or computationally cheaper to access than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for the new ones. Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make a main memory access when there is a miss (or, with multi-level cache, average memory reference time for the next-lower cache) : T_h= the latency: the time to reference the cache (should be the same for hits and misses) : E = various secondary effects, such as queuing effects in mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

László Bélády

László "Les" Bélády (born April 29, 1928, in Budapest; died November 6, 2021) was a Hungarian computer scientist notable for devising the Bélády's Min theoretical memory caching algorithm in 1966 while working at IBM Research. He also demonstrated the existence of a Bélády's anomaly. During the 1980s, he was the editor-in-chief of the IEEE Transactions on Software Engineering. Education Bélády earned B.S. in Mechanical Engineering, then an M.S. in Aeronautical Engineering at the Technical University of Budapest in 1950. Life and career He left Hungary after the Hungarian Revolution of 1956. Then he worked as a draftsman at Ford Motor Company in Cologne and as an aerodynamics engineer at Dassault in Paris. In 1961, he immigrated to the United States. In the 1960s and 1970s, he primarily lived in New York City with stints in California and England, where he joined International Business Machines and did early work in operating systems, virtual machine architectures, pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Management

Memory management is a form of resource management applied to computer memory. The essential requirement of memory management is to provide ways to dynamically allocate portions of memory to programs at their request, and free it for reuse when no longer needed. This is critical to any advanced computer system where more than a single process might be underway at any time. Several methods have been devised that increase the effectiveness of memory management. Virtual memory systems separate the memory addresses used by a process from actual physical addresses, allowing separation of processes and increasing the size of the virtual address space beyond the available amount of RAM using paging or swapping to secondary storage. The quality of the virtual memory manager can have an extensive effect on overall system performance. In some operating systems, e.g. OS/360 and successors, memory is managed by the operating system. In other operating systems, e.g. Unix-like operating sy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Page (computing)

A page, memory page, or virtual page is a fixed-length contiguous block of virtual memory, described by a single entry in the page table. It is the smallest unit of data for memory management in a virtual memory operating system. Similarly, a page frame is the smallest fixed-length contiguous block of physical memory into which memory pages are mapped by the operating system. A transfer of pages between main memory and an auxiliary store, such as a hard disk drive, is referred to as paging or swapping. Page size trade-off Page size is usually determined by the processor architecture. Traditionally, pages in a system had uniform size, such as 4,096 bytes. However, processor designs often allow two or more, sometimes simultaneous, page sizes due to its benefits. There are several points that can factor into choosing the best page size. Page table size A system with a smaller page size uses more pages, requiring a page table that occupies more space. For example, if a 232 vi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |