|

Box–Cox Transformation

In statistics, a power transform is a family of functions applied to create a monotonic transformation of data using power functions. It is a data transformation technique used to stabilize variance, make the data more normal distribution-like, improve the validity of measures of association (such as the Pearson correlation between variables), and for other data stabilization procedures. Power transforms are used in multiple fields, including multi-resolution and wavelet analysis, statistical data analysis, medical research, modeling of physical processes, geochemical data analysis, epidemiology and many other clinical, environmental and social research areas. Definition The power transformation is defined as a continuously varying function, with respect to the power parameter ''λ'', in a piece-wise function form that makes it continuous at the point of singularity (''λ'' = 0). For data vectors (''y''1,..., ''y''''n'') in which each ''y''''i'' > 0, th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood Function

The likelihood function (often simply called the likelihood) represents the probability of random variable realizations conditional on particular values of the statistical parameters. Thus, when evaluated on a given sample, the likelihood function indicates which parameter values are more ''likely'' than others, in the sense that they would have made the observed data more probable. Consequently, the likelihood is often written as \mathcal(\theta\mid X) instead of P(X \mid \theta), to emphasize that it is to be understood as a function of the parameters \theta instead of the random variable X. In maximum likelihood estimation, the arg max of the likelihood function serves as a point estimate for \theta, while local curvature (approximated by the likelihood's Hessian matrix) indicates the estimate's precision. Meanwhile in Bayesian statistics, parameter estimates are derived from the converse of the likelihood, the so-called posterior probability, which is calculated via Bayes' r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Profile Likelihood

The likelihood function (often simply called the likelihood) represents the probability of Realization (probability), random variable realizations conditional on particular values of the statistical parameters. Thus, when evaluated on a Sample (statistics), given sample, the likelihood function indicates which parameter values are more ''likely'' than others, in the sense that they would have made the observed data more probable. Consequently, the likelihood is often written as \mathcal(\theta\mid X) instead of P(X \mid \theta), to emphasize that it is to be understood as a function of the parameters \theta instead of the random variable X. In maximum likelihood estimation, the arg max of the likelihood function serves as a Point estimation, point estimate for \theta, while local curvature (approximated by the likelihood's Hessian matrix) indicates the estimate's Accuracy and precision, precision. Meanwhile in Bayesian statistics, parameter estimates are derived from the converse o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cramér–Rao Bound

In estimation theory and statistics, the Cramér–Rao bound (CRB) expresses a lower bound on the variance of unbiased estimators of a deterministic (fixed, though unknown) parameter, the variance of any such estimator is at least as high as the inverse of the Fisher information. Equivalently, it expresses an upper bound on the precision (the inverse of variance) of unbiased estimators: the precision of any such estimator is at most the Fisher information. The result is named in honor of Harald Cramér and C. R. Rao, but has independently also been derived by Maurice Fréchet, Georges Darmois, as well as Alexander Aitken and Harold Silverstone. An unbiased estimator that achieves this lower bound is said to be (fully) '' efficient''. Such a solution achieves the lowest possible mean squared error among all unbiased methods, and is therefore the minimum variance unbiased (MVU) estimator. However, in some cases, no unbiased technique exists which achieves the bound. This may occur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Local Asymptotic Normality

In statistics, local asymptotic normality is a property of a sequence of statistical models, which allows this sequence to be asymptotically approximated by a normal location model, after a rescaling of the parameter. An important example when the local asymptotic normality holds is in the case of i.i.d sampling from a regular parametric model. The notion of local asymptotic normality was introduced by . Definition A sequence of parametric statistical models is said to be locally asymptotically normal (LAN) at ''θ'' if there exist matrices ''rn'' and ''Iθ'' and a random vector such that, for every converging sequence , : \ln \frac = h'\Delta_ - \frac12 h'I_\theta\,h + o_(1), where the derivative here is a Radon–Nikodym derivative, which is a formalised version of the likelihood ratio, and where ''o'' is a type of big O in probability notation. In other words, the local likelihood ratio must converge in distribution to a normal random variable whose mean is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistent Estimator

In statistics, a consistent estimator or asymptotically consistent estimator is an estimator—a rule for computing estimates of a parameter ''θ''0—having the property that as the number of data points used increases indefinitely, the resulting sequence of estimates converges in probability to ''θ''0. This means that the distributions of the estimates become more and more concentrated near the true value of the parameter being estimated, so that the probability of the estimator being arbitrarily close to ''θ''0 converges to one. In practice one constructs an estimator as a function of an available sample of size ''n'', and then imagines being able to keep collecting data and expanding the sample ''ad infinitum''. In this way one would obtain a sequence of estimates indexed by ''n'', and consistency is a property of what occurs as the sample size “grows to infinity”. If the sequence of estimates can be mathematically shown to converge in probability to the true value ''� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sign Function

In mathematics, the sign function or signum function (from '' signum'', Latin for "sign") is an odd mathematical function that extracts the sign of a real number. In mathematical expressions the sign function is often represented as . To avoid confusion with the sine function, this function is usually called the signum function. Definition The signum function of a real number is a piecewise function which is defined as follows: \sgn x :=\begin -1 & \text x 0. \end Properties Any real number can be expressed as the product of its absolute value and its sign function: x = , x, \sgn x. It follows that whenever is not equal to 0 we have \sgn x = \frac = \frac\,. Similarly, for ''any'' real number , , x, = x\sgn x. We can also ascertain that: \sgn x^n=(\sgn x)^n. The signum function is the derivative of the absolute value function, up to (but not including) the indeterminacy at zero. More formally, in integration theory it is a weak derivative, and in convex function ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Truncated Distribution

In statistics, a truncated distribution is a conditional distribution that results from restricting the domain of some other probability distribution. Truncated distributions arise in practical statistics in cases where the ability to record, or even to know about, occurrences is limited to values which lie above or below a given threshold or within a specified range. For example, if the dates of birth of children in a school are examined, these would typically be subject to truncation relative to those of all children in the area given that the school accepts only children in a given age range on a specific date. There would be no information about how many children in the locality had dates of birth before or after the school's cutoff dates if only a direct approach to the school were used to obtain information. Where sampling is such as to retain knowledge of items that fall outside the required range, without recording the actual values, this is known as censoring, as opposed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Box–Cox Distribution

In statistics, the Box–Cox distribution (also known as the power-normal distribution) is the distribution of a random variable ''X'' for which the Box–Cox transformation on ''X'' follows a truncated normal distribution. It is a continuous probability distribution having probability density function (pdf) given by : f(y) = \frac \exp\left\ for ''y'' > 0, where ''m'' is the location parameter of the distribution, ''s'' is the dispersion, ''ƒ'' is the family parameter, ''I'' is the indicator function, Φ is the cumulative distribution function of the standard normal distribution, and sgn is the sign function. Special cases * ''ƒ'' = 1 gives a truncated normal distribution In probability and statistics, the truncated normal distribution is the probability distribution derived from that of a normally distributed random variable by bounding the random variable from either below or above (or both). The truncated no .... References * Continuous distr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Truncated Normal Distribution

In probability and statistics, the truncated normal distribution is the probability distribution derived from that of a normally distributed random variable by bounding the random variable from either below or above (or both). The truncated normal distribution has wide applications in statistics and econometrics. Definitions Suppose X has a normal distribution with mean \mu and variance \sigma^2 and lies within the interval (a,b), \text \; -\infty \leq a < b \leq \infty . Then conditional on has a truncated normal distribution. Its , , for , is given by : and by otherwise. Here, : is t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Econometrics

Econometrics is the application of Statistics, statistical methods to economic data in order to give Empirical evidence, empirical content to economic relationships.M. Hashem Pesaran (1987). "Econometrics," ''The New Palgrave: A Dictionary of Economics'', v. 2, p. 8 [pp. 8–22]. Reprinted in J. Eatwell ''et al.'', eds. (1990). ''Econometrics: The New Palgrave''p. 1[pp. 1–34].Abstract (The New Palgrave Dictionary of Economics, 2008 revision by J. Geweke, J. Horowitz, and H. P. Pesaran). More precisely, it is "the quantitative analysis of actual economic Phenomenon, phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference". An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships". Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used toda ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

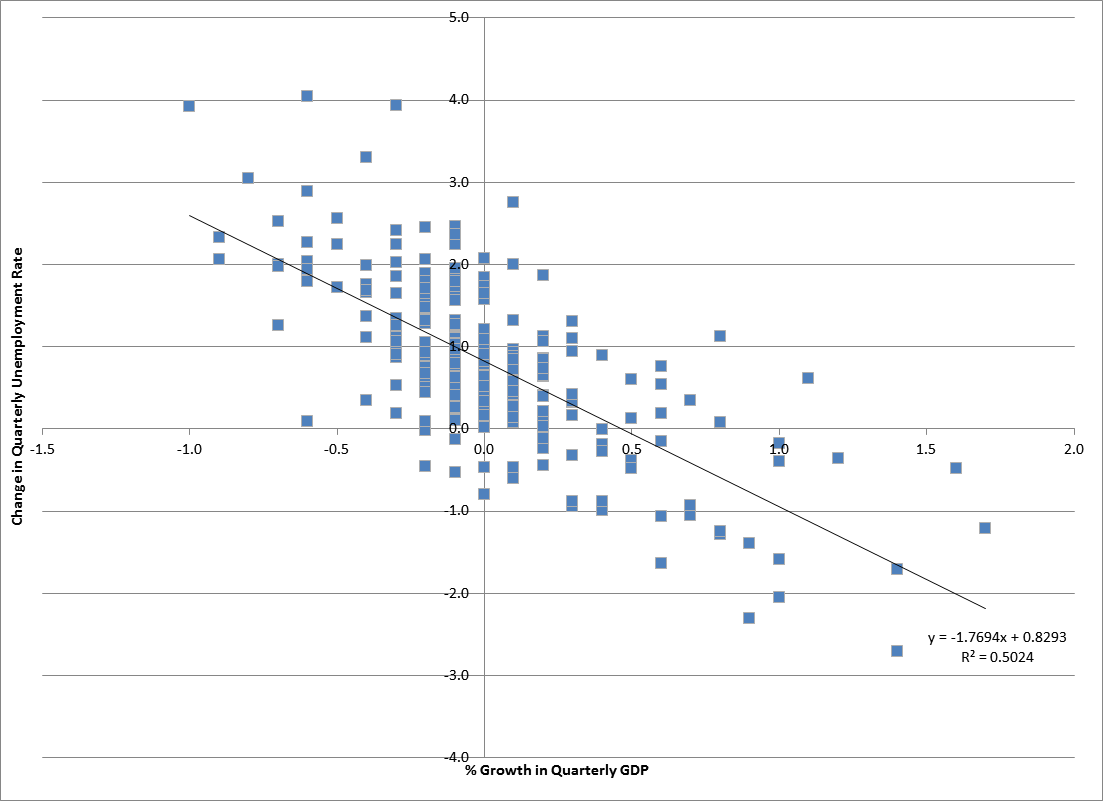

Regression Analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |