|

Application Delivery Controller

An application delivery controller (ADC) is a computer network device in a datacenter, often part of an application delivery network (ADN), that helps perform common tasks, such as those done by web accelerators to remove load from the web servers themselves. Many also provide load balancing. ADCs are often placed in the DMZ, between the outer firewall or router and a web farm. Features An Application Delivery Controller (ADC) is a type of server that provides a variety of services designed to optimize the distribution of load being handled by backend content servers. An ADC directs web request traffic to optimal data sources in order to remove unnecessary load from web servers. To accomplish this, an ADC includes many OSI layer 3-7 services, including load-balancing. ADCs are intended to be deployed within the DMZ of a computer server cluster hosting web applications and/or services. In this sense, an ADC can be envisioned as a drop-in load balancer replacement. But that is w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Network

A computer network is a set of computers sharing resources located on or provided by network nodes. The computers use common communication protocols over digital interconnections to communicate with each other. These interconnections are made up of telecommunication network technologies, based on physically wired, optical, and wireless radio-frequency methods that may be arranged in a variety of network topologies. The nodes of a computer network can include personal computers, servers, networking hardware, or other specialised or general-purpose hosts. They are identified by network addresses, and may have hostnames. Hostnames serve as memorable labels for the nodes, rarely changed after initial assignment. Network addresses serve for locating and identifying the nodes by communication protocols such as the Internet Protocol. Computer networks may be classified by many criteria, including the transmission medium used to carry signals, bandwidth, communications pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

A/B Testing

A/B testing (also known as bucket testing, split-run testing, or split testing) is a user experience research methodology. A/B tests consist of a randomized experiment that usually involves two variants (A and B), although the concept can be also extended to multiple variants of the same variable. It includes application of statistical hypothesis testing or " two-sample hypothesis testing" as used in the field of statistics. A/B testing is a way to compare multiple versions of a single variable, for example by testing a subject's response to variant A against variant B, and determining which of the variants is more effective. Overview "A/B testing" is a shorthand for a simple randomized controlled experiment, in which a number of samples (e.g. A and B) of a single vector-variable are compared. These values are similar except for one variation which might affect a user's behavior. A/B tests are widely considered the simplest form of controlled experiment, especially when they ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

F5 Networks

F5, Inc. is an American technology company specializing in application security, multi-cloud management, online fraud prevention, application delivery networking (ADN), application availability & performance, network security, and access & authorization. F5 is headquartered in Seattle, Washington in F5 Tower, with an additional 75 offices in 43 countries focusing on account management, global services support, product development, manufacturing, software engineering, and administrative jobs. Notable office locations include Spokane, Washington; New York, New York; Boulder, Colorado; London, England; San Jose, California; and San Francisco, California. F5's originally offered application delivery controller (ADC) technology, but expanded into application layer, automation, multi-cloud, and security services. As ransomware, data leaks, DDoS, and other attacks on businesses of all sizes are arising, companies such as F5 have continued to reinvent themselves. While the majority of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

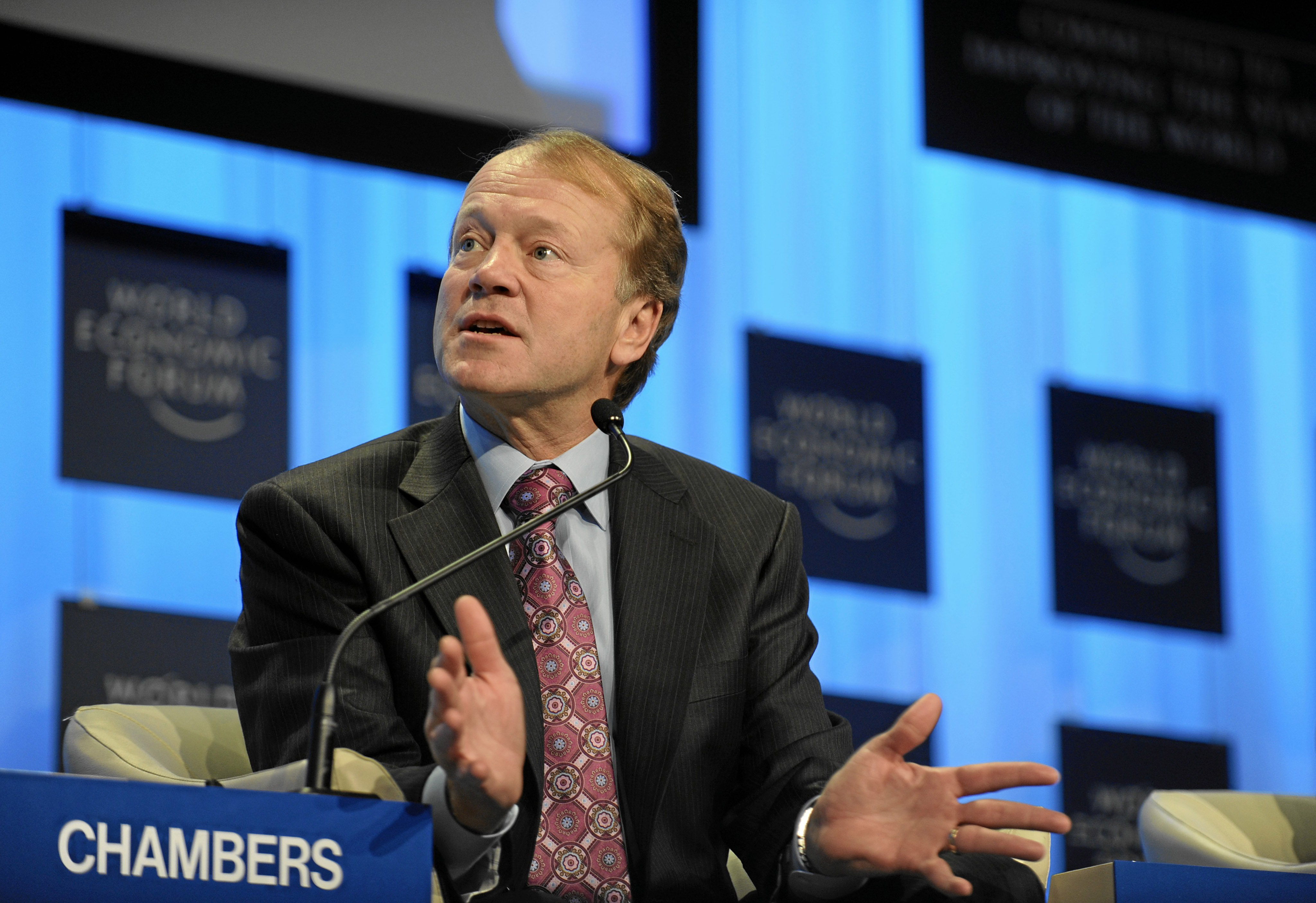

Cisco Systems

Cisco Systems, Inc., commonly known as Cisco, is an American-based multinational corporation, multinational digital communications technology conglomerate (company), conglomerate corporation headquartered in San Jose, California. Cisco develops, manufactures, and sells networking hardware, software, telecommunications equipment and other high-technology services and products. Cisco specializes in specific tech markets, such as the Internet of Things (IoT), internet domain, domain security, videoconferencing, and energy management with List of Cisco products, leading products including Webex, OpenDNS, XMPP, Jabber, Duo Security, and Cisco Jasper, Jasper. Cisco is one of the List of largest technology companies by revenue, largest technology companies in the world ranking 74 on the Fortune 100 with over $51 billion in revenue and nearly 80,000 employees. Cisco Systems was founded in December 1984 by Leonard Bosack and Sandy Lerner, two Stanford University computer scientists who ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Content Switch

A multilayer switch (MLS) is a computer networking device that switches on Data link layer, OSI layer 2 like an ordinary network switch and provides extra functions on higher OSI model, OSI layers. The MLS was invented by engineers at Digital Equipment Corporation. Switching technologies are crucial to network design, as they allow traffic to be sent only where it is needed in most cases, using fast, hardware-based methods. Switching uses different kinds of network switches. A standard switch is known as a ''layer 2 switch'' and is commonly found in nearly any LAN. ''Layer 3'' or ''layer 4'' switches require advanced technology (see Network switch#Configuration options, managed switch) and are more expensive and thus are usually only found in larger LANs or in special network environments. Multilayer switch Multi-layer switching combines layer 2, 3 and 4 switching technologies and provides high-speed scalability with low latency. Multi-layer switching can move traffic at wire sp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SSL Acceleration

TLS acceleration (formerly known as SSL acceleration) is a method of offloading processor-intensive public-key encryption for Transport Layer Security (TLS) and its predecessor Secure Sockets Layer (SSL) to a hardware accelerator. Typically this means having a separate card that plugs into a PCI slot in a computer that contains one or more coprocessors able to handle much of the SSL processing. TLS accelerators may use off-the-shelf CPUs, but most use custom ASIC and RISC chips to do most of the difficult computational work. Principle of TLS acceleration operation The most computationally expensive part of a TLS session is the TLS handshake, where the TLS server (usually a webserver) and the TLS client (usually a web browser) agree on a number of parameters that establish the security of the connection. During the TLS handshake the server and the client establish session keys (symmetric keys, used for the duration of a given session), but the encryption and signature of the TLS ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Application Firewall

An application firewall is a form of firewall that controls input/output or system calls of an application or service. It operates by monitoring and blocking communications based on a configured policy, generally with predefined rule sets to choose from. The application firewall can control communications up to the application layer of the OSI model, which is the highest operating layer, and where it gets its name. The two primary categories of application firewalls are ''network-based'' and ''host-based''. History Gene Spafford of Purdue University, Bill Cheswick at AT&T Laboratories, and Marcus Ranum described a third-generation firewall known as an application layer firewall. Marcus Ranum's work, based on the firewall created by Paul Vixie, Brian Reid, and Jeff Mogul, spearheaded the creation of the first commercial product. The product was released by DEC, named the DEC SEAL by Geoff Mulligan - Secure External Access Link. DEC's first major sale was on June 13, 1991, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Traffic Shaping

Traffic shaping is a bandwidth management technique used on computer networks which delays some or all datagrams to bring them into compliance with a desired ''traffic profile''. Traffic shaping is used to optimize or guarantee performance, improve latency, or increase usable bandwidth for some kinds of packets by delaying other kinds. It is often confused with traffic policing, the distinct but related practice of packet dropping and packet marking. The most common type of traffic shaping is application-based traffic shaping. In application-based traffic shaping, fingerprinting tools are first used to identify applications of interest, which are then subject to shaping policies. Some controversial cases of application-based traffic shaping include bandwidth throttling of peer-to-peer file sharing traffic. Many application protocols use encryption to circumvent application-based traffic shaping. Another type of traffic shaping is route-based traffic shaping. Route-based traffi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiplexing

In telecommunications and computer networking, multiplexing (sometimes contracted to muxing) is a method by which multiple analog or digital signals are combined into one signal over a shared medium. The aim is to share a scarce resource - a physical transmission medium. For example, in telecommunications, several telephone calls may be carried using one wire. Multiplexing originated in telegraphy in the 1870s, and is now widely applied in communications. In telephony, George Owen Squier is credited with the development of telephone carrier multiplexing in 1910. The multiplexed signal is transmitted over a communication channel such as a cable. The multiplexing divides the capacity of the communication channel into several logical channels, one for each message signal or data stream to be transferred. A reverse process, known as demultiplexing, extracts the original channels on the receiver end. A device that performs the multiplexing is called a multiplexer (MUX), and a dev ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Caching (computing)

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A ''cache hit'' occurs when the requested data can be found in a cache, while a ''cache miss'' occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective and to enable efficient use of data, caches must be relatively small. Nevertheless, caches have proven themselves in many areas of computing, because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested already, and spatial locality, where d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding; encoding done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a signal. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Load Balancing (computing)

In computing, load balancing is the process of distributing a set of tasks over a set of resources (computing units), with the aim of making their overall processing more efficient. Load balancing can optimize the response time and avoid unevenly overloading some compute nodes while other compute nodes are left idle. Load balancing is the subject of research in the field of parallel computers. Two main approaches exist: static algorithms, which do not take into account the state of the different machines, and dynamic algorithms, which are usually more general and more efficient but require exchanges of information between the different computing units, at the risk of a loss of efficiency. Problem overview A load-balancing algorithm always tries to answer a specific problem. Among other things, the nature of the tasks, the algorithmic complexity, the hardware architecture on which the algorithms will run as well as required error tolerance, must be taken into account. Therefore c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |