|

Adjusted Mutual Information

In probability theory and information theory, adjusted mutual information, a variation of mutual information may be used for comparing clusterings. It corrects the effect of agreement solely due to chance between clusterings, similar to the way the adjusted rand index corrects the Rand index. It is closely related to variation of information: when a similar adjustment is made to the VI index, it becomes equivalent to the AMI. The adjusted measure however is no longer metrical. Mutual information of two partitions Given a set ''S'' of ''N'' elements S=\, consider two partitions of ''S'', namely U=\ with ''R'' clusters, and V=\ with ''C'' clusters. It is presumed here that the partitions are so-called ''hard clusters;'' the partitions are pairwise disjoint: :U_i\cap U_j = \varnothing = V_i\cap V_j for all i\ne j, and complete: :\cup_^RU_i=\cup_^C V_j=S The mutual information of cluster overlap between ''U'' and ''V'' can be summarized in the form of an ''R''x''C'' contingency table M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering (field), information engineering, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a dice, die (with six equally likely outcomes). Some other important measures in information theory are mutual informat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mutual Information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the " amount of information" (in units such as shannons (bits), nats or hartleys) obtained about one random variable by observing the other random variable. The concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies the expected "amount of information" held in a random variable. Not limited to real-valued random variables and linear dependence like the correlation coefficient, MI is more general and determines how different the joint distribution of the pair (X,Y) is from the product of the marginal distributions of X and Y. MI is the expected value of the pointwise mutual information (PMI). The quantity was defined and analyzed by Claude Shannon in his landmark paper "A Mathemati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cluster Analysis

Cluster analysis or clustering is the task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some sense) to each other than to those in other groups (clusters). It is a main task of exploratory data analysis, and a common technique for statistics, statistical data analysis, used in many fields, including pattern recognition, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Cluster analysis itself is not one specific algorithm, but the general task to be solved. It can be achieved by various algorithms that differ significantly in their understanding of what constitutes a cluster and how to efficiently find them. Popular notions of clusters include groups with small Distance function, distances between cluster members, dense areas of the data space, intervals or particular statistical distributions. Clustering can therefore be formulated as a multi-object ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Adjusted Rand Index

Adjustment may refer to: *Adjustment (law), with several meanings *Adjustment (psychology), the process of balancing conflicting needs *Adjustment of observations, in mathematics, a method of solving an overdetermined system of equations *Calibration, in metrology *Spinal adjustment, in chiropractic practice *In statistics, compensation for confounding variables See also *Setting (other) Setting may refer to: * A location (geography) where something is set * Set construction in theatrical scenery * Setting (narrative), the place and time in a work of narrative, especially fiction * Setting up to fail a manipulative technique to eng ... {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rand Index

The RAND Corporation (from the phrase "research and development") is an American nonprofit global policy think tank created in 1948 by Douglas Aircraft Company to offer research and analysis to the United States Armed Forces. It is financed by the U.S. government and private endowment, corporations, universities and private individuals. The company assists other governments, international organizations, private companies and foundations with a host of defense and non-defense issues, including healthcare. RAND aims for interdisciplinary and quantitative problem solving by translating theoretical concepts from formal economics and the physical sciences into novel applications in other areas, using applied science and operations research. Overview RAND has approximately 1,850 employees. Its American locations include: Santa Monica, California (headquarters); Arlington, Virginia; Pittsburgh, Pennsylvania; and Boston, Massachusetts. The RAND Gulf States Policy Institute has an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variation Of Information

In probability theory and information theory, the variation of information or shared information distance is a measure of the distance between two clusterings ( partitions of elements). It is closely related to mutual information; indeed, it is a simple linear expression involving the mutual information. Unlike the mutual information, however, the variation of information is a true metric, in that it obeys the triangle inequality.Marina Meila, "Comparing Clusterings by the Variation of Information", Learning Theory and Kernel Machines (2003), vol. 2777, pp. 173–187, , Lecture Notes in Computer Science, Definition Suppose we have two partitions X and Y of a set A into disjoint subsets, namely X = \ and Y = \. Let: :n = \sum_ , X_, = \sum_ , Y_, =, A, :p_ = , X_, / n and q_ = , Y_, / n :r_ = , X_i\cap Y_, / n Then the variation of information between the two partitions is: :\mathrm(X; Y ) = - \sum_ r_ \left log(r_/p_i)+\log(r_/q_j) \right/math>. This is equivalent to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partition Of A Set

In mathematics, a partition of a set is a grouping of its elements into non-empty subsets, in such a way that every element is included in exactly one subset. Every equivalence relation on a set defines a partition of this set, and every partition defines an equivalence relation. A set equipped with an equivalence relation or a partition is sometimes called a setoid, typically in type theory and proof theory. Definition and Notation A partition of a set ''X'' is a set of non-empty subsets of ''X'' such that every element ''x'' in ''X'' is in exactly one of these subsets (i.e., ''X'' is a disjoint union of the subsets). Equivalently, a family of sets ''P'' is a partition of ''X'' if and only if all of the following conditions hold: *The family ''P'' does not contain the empty set (that is \emptyset \notin P). *The union of the sets in ''P'' is equal to ''X'' (that is \textstyle\bigcup_ A = X). The sets in ''P'' are said to exhaust or cover ''X''. See also collectively exhaus ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Contingency Table

In statistics, a contingency table (also known as a cross tabulation or crosstab) is a type of table in a matrix format that displays the (multivariate) frequency distribution of the variables. They are heavily used in survey research, business intelligence, engineering, and scientific research. They provide a basic picture of the interrelation between two variables and can help find interactions between them. The term ''contingency table'' was first used by Karl Pearson in "On the Theory of Contingency and Its Relation to Association and Normal Correlation", part of the ''Drapers' Company Research Memoirs Biometric Series I'' published in 1904. A crucial problem of multivariate statistics is finding the (direct-)dependence structure underlying the variables contained in high-dimensional contingency tables. If some of the conditional independences are revealed, then even the storage of the data can be done in a smarter way (see Lauritzen (2002)). In order to do this one can use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to , 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper "A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Similarity Measure

In statistics and related fields, a similarity measure or similarity function or similarity metric is a real-valued function that quantifies the similarity between two objects. Although no single definition of a similarity exists, usually such measures are in some sense the inverse of distance metrics: they take on large values for similar objects and either zero or a negative value for very dissimilar objects. Though, in more broad terms, a similarity function may also satisfy metric axioms. Cosine similarity is a commonly used similarity measure for real-valued vectors, used in (among other fields) information retrieval to score the similarity of documents in the vector space model. In machine learning, common kernel functions such as the RBF kernel can be viewed as similarity functions. Use in clustering In spectral clustering, a similarity, or affinity, measure is used to transform data to overcome difficulties related to lack of convexity in the shape of the data distribut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

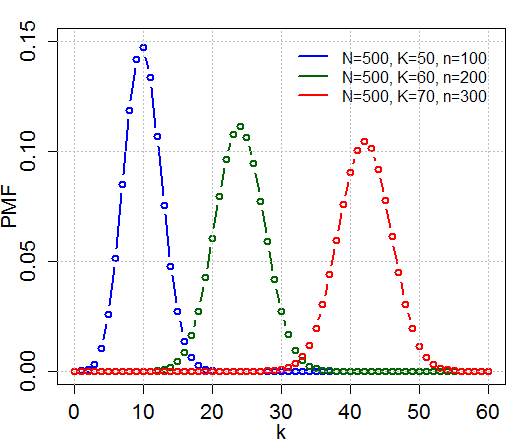

Hypergeometric Distribution

In probability theory and statistics, the hypergeometric distribution is a discrete probability distribution that describes the probability of k successes (random draws for which the object drawn has a specified feature) in n draws, ''without'' replacement, from a finite population of size N that contains exactly K objects with that feature, wherein each draw is either a success or a failure. In contrast, the binomial distribution describes the probability of k successes in n draws ''with'' replacement. Definitions Probability mass function The following conditions characterize the hypergeometric distribution: * The result of each draw (the elements of the population being sampled) can be classified into one of two mutually exclusive categories (e.g. Pass/Fail or Employed/Unemployed). * The probability of a success changes on each draw, as each draw decreases the population (''sampling without replacement'' from a finite population). A random variable X follows the hyperg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |