|

Activation Function

The activation function of a node in an artificial neural network is a function that calculates the output of the node based on its individual inputs and their weights. Nontrivial problems can be solved using only a few nodes if the activation function is ''nonlinear''. Modern activation functions include the logistic ( sigmoid) function used in the 2012 speech recognition model developed by Hinton et al; the ReLU used in the 2012 AlexNet computer vision model and in the 2015 ResNet model; and the smooth version of the ReLU, the GELU, which was used in the 2018 BERT model. Comparison of activation functions Aside from their empirical performance, activation functions also have different mathematical properties: ; Nonlinear: When the activation function is non-linear, then a two-layer neural network can be proven to be a universal function approximator. This is known as the Universal Approximation Theorem. The identity activation function does not satisfy this property. W ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heaviside Function

The Heaviside step function, or the unit step function, usually denoted by or (but sometimes , or ), is a step function named after Oliver Heaviside, the value of which is zero for negative arguments and one for positive arguments. Different conventions concerning the value are in use. It is an example of the general class of step functions, all of which can be represented as linear combinations of translations of this one. The function was originally developed in operational calculus for the solution of differential equations, where it represents a signal that switches on at a specified time and stays switched on indefinitely. Heaviside developed the operational calculus as a tool in the analysis of telegraphic communications and represented the function as . Formulation Taking the convention that , the Heaviside function may be defined as: * a piecewise function: H(x) := \begin 1, & x \geq 0 \\ 0, & x * an indicator function: H(x) := \mathbf_=\mathbf 1_(x) For the alt ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polyharmonic Spline

In applied mathematics, polyharmonic splines are used for function approximation and data interpolation. They are very useful for interpolating and fitting scattered data in many dimensions. Special cases include thin plate splines and natural cubic splines in one dimension. Definition A polyharmonic spline is a linear combination of polyharmonic radial basis functions (RBFs) denoted by \varphi plus a polynomial term: where * \mathbf = _1 \ x_2 \ \cdots \ x_ (\textrm denotes matrix transpose, meaning \mathbf is a column vector) is a real-valued vector of d independent variables, * \mathbf_i = _ \ c_ \ \cdots \ c_ are N vectors of the same size as \mathbf (often called centers) that the curve or surface must interpolate, * \mathbf = _1 \ w_2 \ \cdots \ w_N are the N weights of the RBFs, * \mathbf = _1 \ v_2 \ \cdots \ v_ are the d+1 weights of the polynomial. The polynomial with the coefficients \mathbf improves fitting accuracy for polyharmonic smoothing splines and al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Function

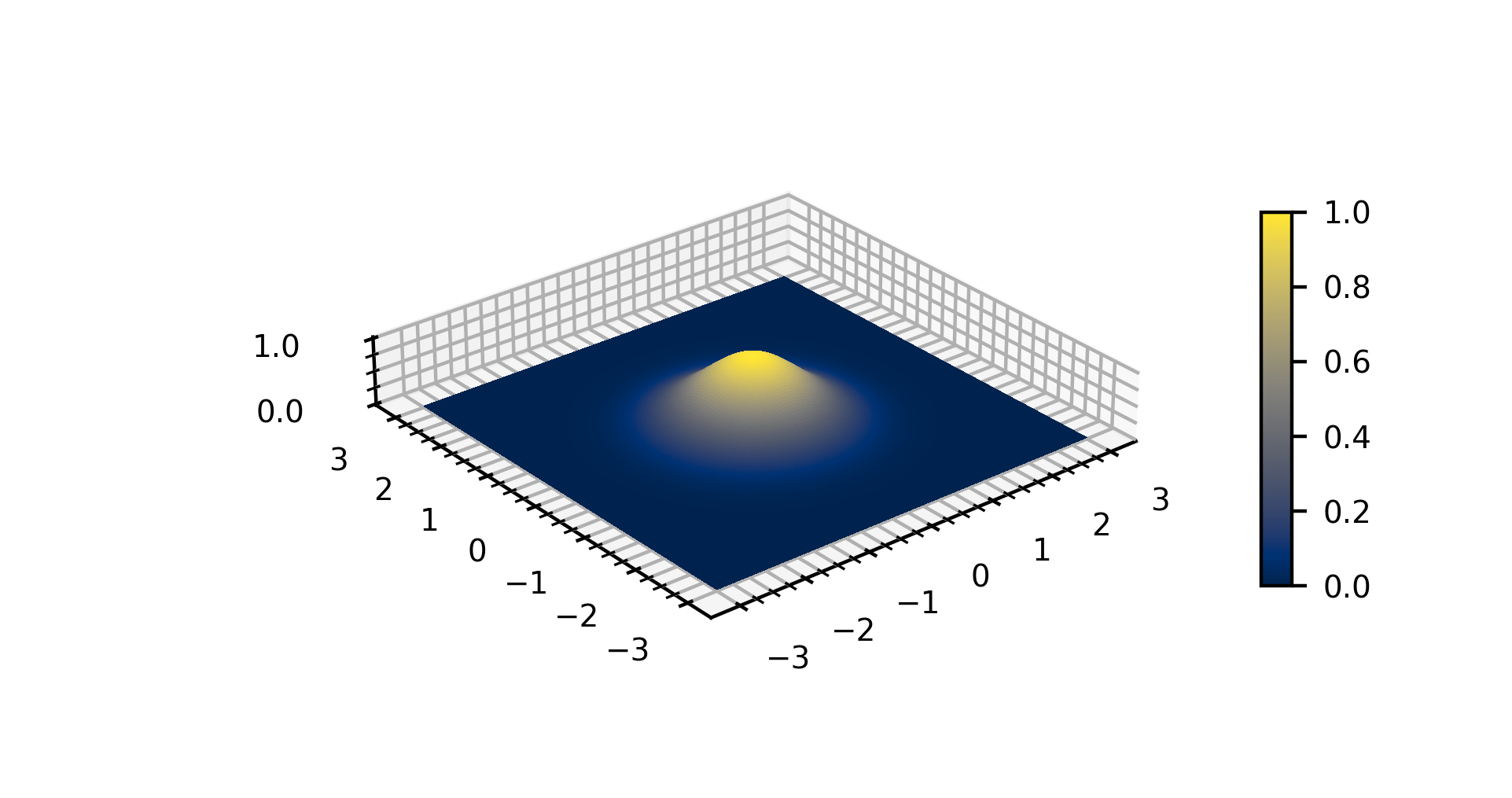

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function (mathematics), function of the base form f(x) = \exp (-x^2) and with parametric extension f(x) = a \exp\left( -\frac \right) for arbitrary real number, real constants , and non-zero . It is named after the mathematician Carl Friedrich Gauss. The graph of a function, graph of a Gaussian is a characteristic symmetric "Normal distribution, bell curve" shape. The parameter is the height of the curve's peak, is the position of the center of the peak, and (the standard deviation, sometimes called the Gaussian Root mean square, RMS width) controls the width of the "bell". Gaussian functions are often used to represent the probability density function of a normal distribution, normally distributed random variable with expected value and variance . In this case, the Gaussian is of the form g(x) = \frac \exp\left( -\frac \frac \right). Gaussian functions are widely used in statistics to describ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Radial Basis Function Network

In the field of mathematical modeling, a radial basis function network is an artificial neural network that uses radial basis functions as activation functions. The output of the network is a linear combination of radial basis functions of the inputs and neuron parameters. Radial basis function networks have many uses, including function approximation, time series prediction, classification, and system control. They were first formulated in a 1988 paper by Broomhead and Lowe, both researchers at the Royal Signals and Radar Establishment. Network architecture Radial basis function (RBF) networks typically have three layers: an input layer, a hidden layer with a non-linear RBF activation function and a linear output layer. The input can be modeled as a vector of real numbers \mathbf \in \mathbb^n. The output of the network is then a scalar function of the input vector, \varphi : \mathbb^n \to \mathbb , and is given by :\varphi(\mathbf) = \sum_^N a_i \rho(, , \mathbf-\mathbf_i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Radial Basis Function

In mathematics a radial basis function (RBF) is a real-valued function \varphi whose value depends only on the distance between the input and some fixed point, either the origin, so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ), or some other fixed point \mathbf, called a ''center'', so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf-\mathbf\right\, ). Any function \varphi that satisfies the property \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ) is a radial function. The distance is usually Euclidean distance, although other metrics are sometimes used. They are often used as a collection \_k which forms a basis for some function space of interest, hence the name. Sums of radial basis functions are typically used to approximate given functions. This approximation process can also be interpreted as a simple kind of neural network; this was the context in which they were originally applied to machine learning, in work by David Broomhead and David Low ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ReLU And GELU

In the context of artificial neural networks, the rectifier or ReLU (rectified linear unit) activation function is an activation function defined as the non-negative part of its argument, i.e., the ramp function: :\operatorname(x) = x^+ = \max(0, x) = \frac = \begin x & \text x > 0, \\ 0 & x \le 0 \end where x is the input to a neuron. This is analogous to half-wave rectification in electrical engineering. ReLU is one of the most popular activation functions for artificial neural networks, and finds application in computer vision and speech recognitionAndrew L. Maas, Awni Y. Hannun, Andrew Y. Ng (2014)Rectifier Nonlinearities Improve Neural Network Acoustic Models using deep neural nets and computational neuroscience. History The ReLU was first used by Alston Householder in 1941 as a mathematical abstraction of biological neural networks. Kunihiko Fukushima in 1969 used ReLU in the context of visual feature extraction in hierarchical neural networks. Thirty years ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Slope

In mathematics, the slope or gradient of a Line (mathematics), line is a number that describes the direction (geometry), direction of the line on a plane (geometry), plane. Often denoted by the letter ''m'', slope is calculated as the ratio of the vertical change to the horizontal change ("rise over run") between two distinct points on the line, giving the same number for any choice of points. The line may be physical – as set by a Surveying, road surveyor, pictorial as in a diagram of a road or roof, or Pure mathematics, abstract. An application of the mathematical concept is found in the grade (slope), grade or gradient in geography and civil engineering. The ''steepness'', incline, or grade of a line is the absolute value of its slope: greater absolute value indicates a steeper line. The line trend is defined as follows: *An "increasing" or "ascending" line goes from left to right and has positive slope: m>0. *A "decreasing" or "descending" line goes from left to right ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heaviside Step Function

The Heaviside step function, or the unit step function, usually denoted by or (but sometimes , or ), is a step function named after Oliver Heaviside, the value of which is zero for negative arguments and one for positive arguments. Different conventions concerning the value are in use. It is an example of the general class of step functions, all of which can be represented as linear combinations of translations of this one. The function was originally developed in operational calculus for the solution of differential equations, where it represents a signal that switches on at a specified time and stays switched on indefinitely. Heaviside developed the operational calculus as a tool in the analysis of telegraphic communications and represented the function as . Formulation Taking the convention that , the Heaviside function may be defined as: * a piecewise function: H(x) := \begin 1, & x \geq 0 \\ 0, & x * an indicator function: H(x) := \mathbf_=\mathbf 1_(x) For the al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sigmoid Function

A sigmoid function is any mathematical function whose graph of a function, graph has a characteristic S-shaped or sigmoid curve. A common example of a sigmoid function is the logistic function, which is defined by the formula :\sigma(x) = \frac = \frac = 1 - \sigma(-x). Other sigmoid functions are given in the #Examples, Examples section. In some fields, most notably in the context of artificial neural networks, the term "sigmoid function" is used as a synonym for "logistic function". Special cases of the sigmoid function include the Gompertz curve (used in modeling systems that saturate at large values of ''x'') and the ogee curve (used in the spillway of some dams). Sigmoid functions have domain of all real numbers, with return (response) value commonly monotonically increasing but could be decreasing. Sigmoid functions most often show a return value (''y'' axis) in the range 0 to 1. Another commonly used range is from −1 to 1. A wide variety of sigmoid functions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neuron

A neuron (American English), neurone (British English), or nerve cell, is an membrane potential#Cell excitability, excitable cell (biology), cell that fires electric signals called action potentials across a neural network (biology), neural network in the nervous system. They are located in the nervous system and help to receive and conduct impulses. Neurons communicate with other cells via synapses, which are specialized connections that commonly use minute amounts of chemical neurotransmitters to pass the electric signal from the presynaptic neuron to the target cell through the synaptic gap. Neurons are the main components of nervous tissue in all Animalia, animals except sponges and placozoans. Plants and fungi do not have nerve cells. Molecular evidence suggests that the ability to generate electric signals first appeared in evolution some 700 to 800 million years ago, during the Tonian period. Predecessors of neurons were the peptidergic secretory cells. They eventually ga ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Function

In mathematics, a binary function (also called bivariate function, or function of two variables) is a function that takes two inputs. Precisely stated, a function f is binary if there exists sets X, Y, Z such that :\,f \colon X \times Y \rightarrow Z where X \times Y is the Cartesian product of X and Y. Alternative definitions Set-theoretically, a binary function can be represented as a subset of the Cartesian product X \times Y \times Z, where (x,y,z) belongs to the subset if and only if f(x,y) = z. Conversely, a subset R defines a binary function if and only if for any x \in X and y \in Y, there exists a unique z \in Z such that (x,y,z) belongs to R. f(x,y) is then defined to be this z. Alternatively, a binary function may be interpreted as simply a function from X \times Y to Z. Even when thought of this way, however, one generally writes f(x,y) instead of f((x,y)). (That is, the same pair of parentheses is used to indicate both function application and the formation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |