|

ANCOVA

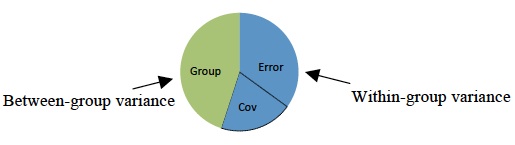

Analysis of covariance (ANCOVA) is a general linear model which blends ANOVA and regression. ANCOVA evaluates whether the means of a dependent variable (DV) are equal across levels of a categorical independent variable (IV) often called a treatment, while statistically controlling for the effects of other continuous variables that are not of primary interest, known as covariates (CV) or nuisance variables. Mathematically, ANCOVA decomposes the variance in the DV into variance explained by the CV(s), variance explained by the categorical IV, and residual variance. Intuitively, ANCOVA can be thought of as 'adjusting' the DV by the group means of the CV(s). The ANCOVA model assumes a linear relationship between the response (DV) and covariate (CV): y_ = \mu + \tau_i + \Beta(x_ - \overline) + \epsilon_. In this equation, the DV, y_ is the jth observation under the ith categorical group; the CV, x_ is the ''j''th observation of the covariate under the ''i''th group. Variables in th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ANCOVA - Partitioning Variance

Analysis of covariance (ANCOVA) is a general linear model which blends ANOVA and regression. ANCOVA evaluates whether the means of a dependent variable (DV) are equal across levels of a categorical independent variable (IV) often called a treatment, while statistically controlling for the effects of other continuous variables that are not of primary interest, known as covariates (CV) or nuisance variables. Mathematically, ANCOVA decomposes the variance in the DV into variance explained by the CV(s), variance explained by the categorical IV, and residual variance. Intuitively, ANCOVA can be thought of as 'adjusting' the DV by the group means of the CV(s). The ANCOVA model assumes a linear relationship between the response (DV) and covariate (CV): y_ = \mu + \tau_i + \Beta(x_ - \overline) + \epsilon_. In this equation, the DV, y_ is the jth observation under the ith categorical group; the CV, x_ is the ''j''th observation of the covariate under the ''i''th group. Variables in th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MANCOVA

Multivariate analysis of covariance (MANCOVA) is an extension of analysis of covariance (ANCOVA) methods to cover cases where there is more than one dependent variable and where the control of concomitant continuous independent variables – covariates – is required. The most prominent benefit of the MANCOVA design over the simple MANOVA is the 'factoring out' of noise or error that has been introduced by the covariant. A commonly used multivariate version of the ANOVA F-statistic is Wilks' Lambda (Λ), which represents the ratio between the error variance (or covariance) and the effect variance (or covariance). Statsoft Textbook, ANOVA/MANOVA. Goals Similarly to all tests in the family, the primary ...[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

General Linear Model

The general linear model or general multivariate regression model is a compact way of simultaneously writing several multiple linear regression models. In that sense it is not a separate statistical linear model. The various multiple linear regression models may be compactly written as : \mathbf = \mathbf\mathbf + \mathbf, where Y is a matrix with series of multivariate measurements (each column being a set of measurements on one of the dependent variables), X is a matrix of observations on independent variables that might be a design matrix (each column being a set of observations on one of the independent variables), B is a matrix containing parameters that are usually to be estimated and U is a matrix containing errors (noise). The errors are usually assumed to be uncorrelated across measurements, and follow a multivariate normal distribution. If the errors do not follow a multivariate normal distribution, generalized linear models may be used to relax assumptions about Y and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ANOVA

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician Ronald Fisher. ANOVA is based on the law of total variance, where the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. In other words, the ANOVA is used to test the difference between two or more means. History While the analysis of variance reached fruition in the 20th century, antecedents extend centuries into the past according to Stigler. These include hypothesis testing, the partitioning of sums of squares, experimental techniques and the additive model. Laplace was performing hypothesis testing i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Variance

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician Ronald Fisher. ANOVA is based on the law of total variance, where the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. In other words, the ANOVA is used to test the difference between two or more means. History While the analysis of variance reached fruition in the 20th century, antecedents extend centuries into the past according to Stigler. These include hypothesis testing, the partitioning of sums of squares, experimental techniques and the additive model. Laplace was performing hypothesis testing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Variable

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function), on the values of other variables. Independent variables, in turn, are not seen as depending on any other variable in the scope of the experiment in question. In this sense, some common independent variables are time, space, density, mass, fluid flow rate, and previous values of some observed value of interest (e.g. human population size) to predict future values (the dependent variable). Of the two, it is always the dependent variable whose variation is being studied, by altering inputs, also known as regressors in a statistical context. In an experiment, any variable that can be attributed a value without attributing a value to any other variable is called an ind ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moderation (statistics)

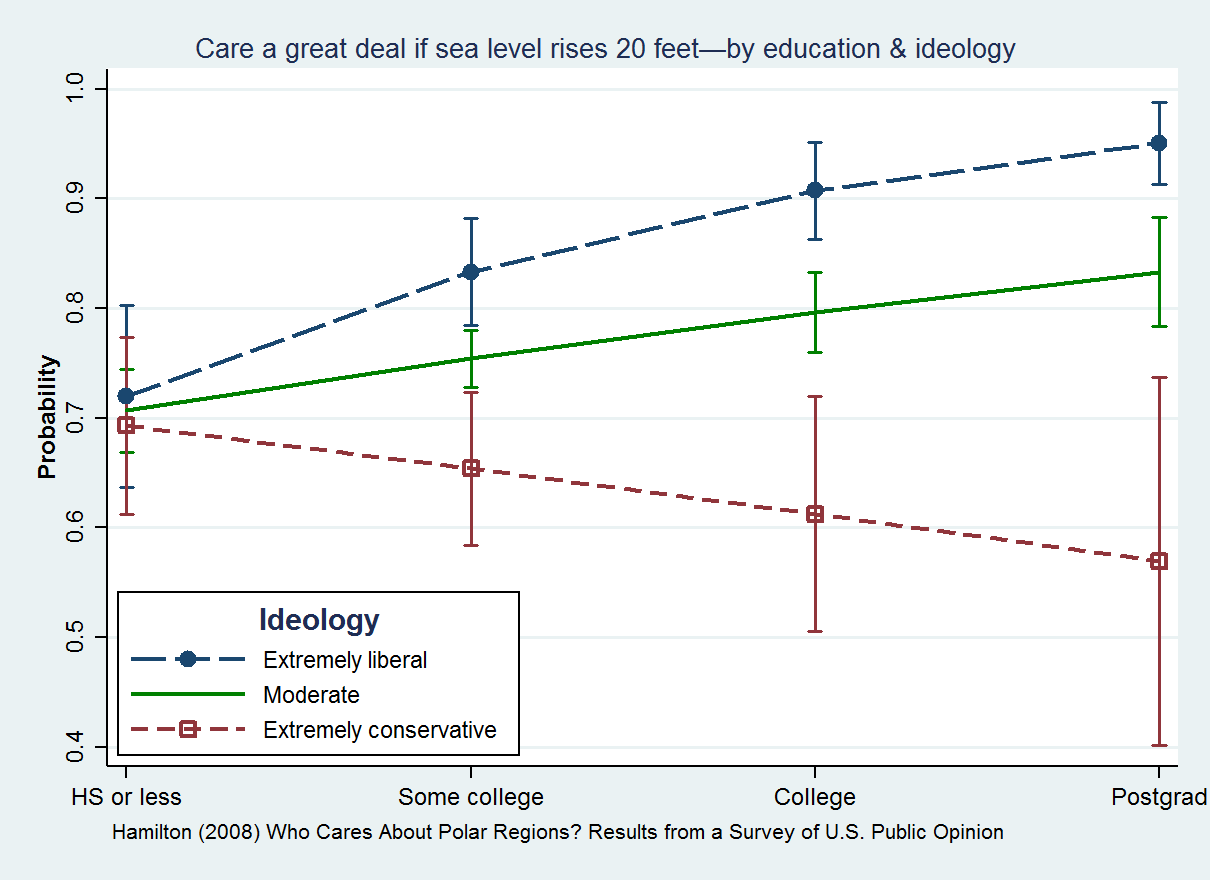

In statistics and regression analysis, moderation (also known as effect modification) occurs when the relationship between two variables depends on a third variable. The third variable is referred to as the moderator variable (or effect modifier) or simply the moderator (or modifier). The effect of a moderating variable is characterized statistically as an interaction; that is, a categorical (e.g., sex, ethnicity, class) or continuous (e.g., age, level of reward) variable that is associated with the direction and/or magnitude of the relation between dependent and independent variables. Specifically within a correlational analysis framework, a moderator is a third variable that affects the zero-order correlation between two other variables, or the value of the slope of the dependent variable on the independent variable. In analysis of variance (ANOVA) terms, a basic moderator effect can be represented as an interaction between a focal independent variable and a factor that specifie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degrees Of Freedom (statistics)

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter are equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself. For example, if the variance is to be estimated from a random sample of ''N'' independent scores, then the degrees of freedom is equal to the number of independent scores (''N'') minus the number of parameters estimated as intermediate steps (one, namely, the sample mean) and is therefore equal to ''N'' − 1. Mathematically, degrees of freedom is the number of dimensions of the domain o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factor Analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, persona ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interaction (statistics)

In statistics, an interaction may arise when considering the relationship among three or more variables, and describes a situation in which the effect of one causal variable on an outcome depends on the state of a second causal variable (that is, when effects of the two causes are not additive). Although commonly thought of in terms of causal relationships, the concept of an interaction can also describe non-causal associations (then also called moderation or effect modification). Interactions are often considered in the context of regression analyses or factorial experiments. The presence of interactions can have important implications for the interpretation of statistical models. If two variables of interest interact, the relationship between each of the interacting variables and a third "dependent variable" depends on the value of the other interacting variable. In practice, this makes it more difficult to predict the consequences of changing the value of a variable, particu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Main Effect

In the design of experiments and analysis of variance, a main effect is the effect of an independent variable on a dependent variable averaged across the levels of any other independent variables. The term is frequently used in the context of factorial designs and regression models to distinguish main effects from interaction Interaction is action that occurs between two or more objects, with broad use in philosophy and the sciences. It may refer to: Science * Interaction hypothesis, a theory of second language acquisition * Interaction (statistics) * Interactions o ... effects. Relative to a factorial design, under an analysis of variance, a main effect test will test the hypotheses expected such as H0, the null hypothesis. Running a hypothesis for a main effect will test whether there is evidence of an effect of different treatments. However a main effect test is nonspecific and will not allow for a localization of specific mean pairwise comparisons (simple effects). A main e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |