|

Analysis Of Variance

Analysis of variance (ANOVA) is a family of statistical methods used to compare the Mean, means of two or more groups by analyzing variance. Specifically, ANOVA compares the amount of variation ''between'' the group means to the amount of variation ''within'' each group. If the between-group variation is substantially larger than the within-group variation, it suggests that the group means are likely different. This comparison is done using an F-test. The underlying principle of ANOVA is based on the law of total variance, which states that the total variance in a dataset can be broken down into components attributable to different sources. In the case of ANOVA, these sources are the variation between groups and the variation within groups. ANOVA was developed by the statistician Ronald Fisher. In its simplest form, it provides a statistical test of whether two or more population means are equal, and therefore generalizes the Student's t-test#Independent two-sample t-test, ''t''- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Methods

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

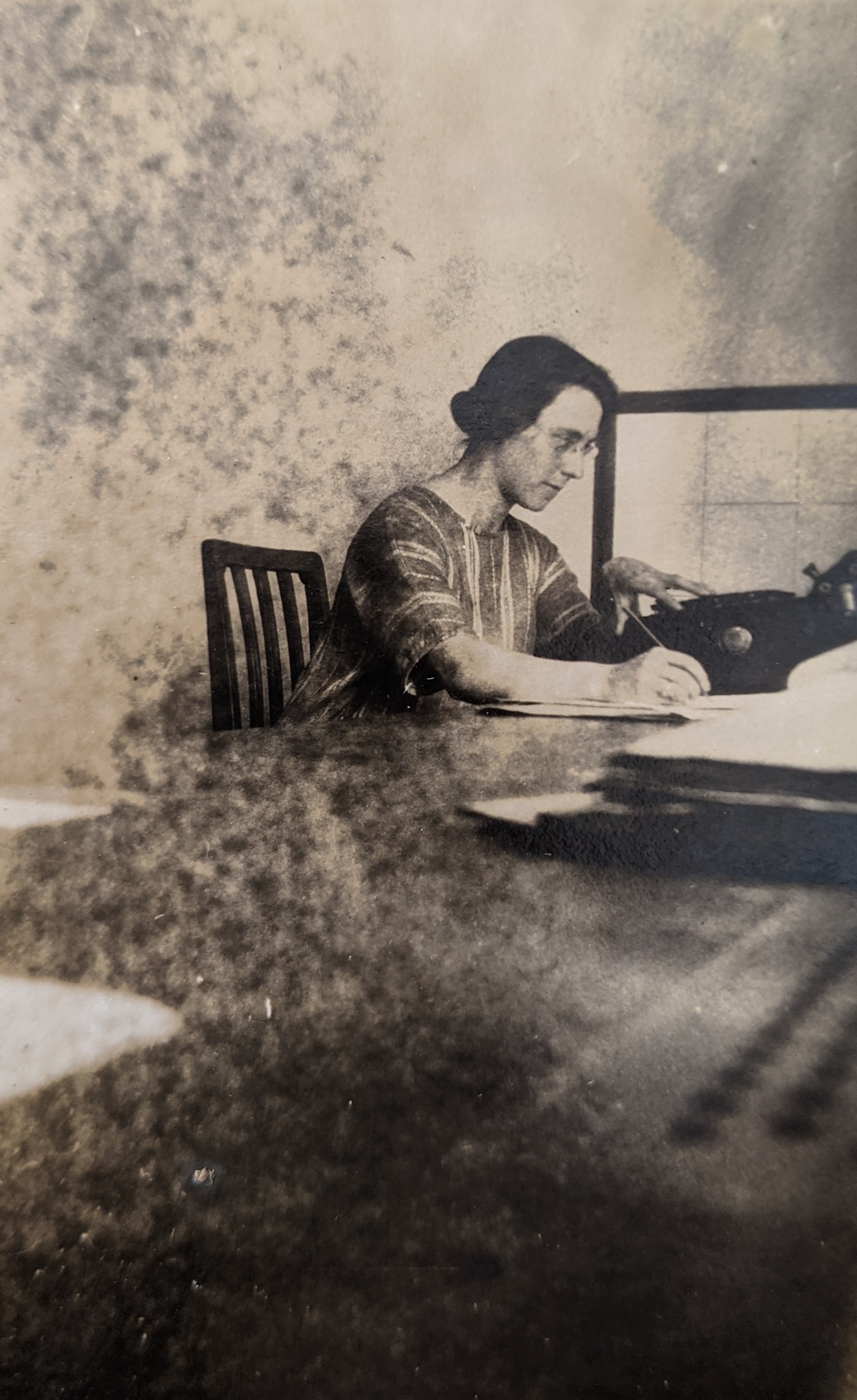

Winifred Mackenzie

Winifred Alice Mackenzie (21 November 1896 – 29 November 1954) was an English statistician, pupil of Arthur Bowley, first winner of the Royal Statistical Society’s Frances Wood Memorial Prize and Ronald Fisher’s first assistant in the Statistics Department at Rothamsted Experimental Station. She was subsequently a missionary in the Belgian Congo. Early life Winifred was born in London, the fifth of six girls. Her father, Samuel Henry Mackenzie, had a cutlers and jewellery shop and, before marrying, her mother, Louisa (née Chatterton), had worked in the Civil Service Telegraph Department. Winifred attended the highly regarded North London Collegiate School founded by Frances Buss whose then headmistress was the mathematician Sophie Bryant. The statistician Clara Collet was an earlier student. In 1916 Winifred went to the London School of Economics (LSE) and studied statistics under the economic statistician Arthur Bowley. In 1921 she published an essay on a favour ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Residual (statistics)

In statistics and optimization, errors and residuals are two closely related and easily confused measures of the deviation of an observed value of an element of a statistical sample from its "true value" (not necessarily observable). The error of an observation is the deviation of the observed value from the true value of a quantity of interest (for example, a population mean). The residual is the difference between the observed value and the '' estimated'' value of the quantity of interest (for example, a sample mean). The distinction is most important in regression analysis, where the concepts are sometimes called the regression errors and regression residuals and where they lead to the concept of studentized residuals. In econometrics, "errors" are also called disturbances. Introduction Suppose there is a series of observations from a univariate distribution and we want to estimate the mean of that distribution (the so-called location model). In this case, the errors are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Independence

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Model

In statistics, the term linear model refers to any model which assumes linearity in the system. The most common occurrence is in connection with regression models and the term is often taken as synonymous with linear regression model. However, the term is also used in time series analysis with a different meaning. In each case, the designation "linear" is used to identify a subclass of models for which substantial reduction in the complexity of the related statistical theory is possible. Linear regression models For the regression case, the statistical model is as follows. Given a (random) sample (Y_i, X_, \ldots, X_), \, i = 1, \ldots, n the relation between the observations Y_i and the independent variables X_ is formulated as :Y_i = \beta_0 + \beta_1 \phi_1(X_) + \cdots + \beta_p \phi_p(X_) + \varepsilon_i \qquad i = 1, \ldots, n where \phi_1, \ldots, \phi_p may be nonlinear functions. In the above, the quantities \varepsilon_i are random variables representing err ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a Mathematics, mathematical formalization of a quantity or object which depends on randomness, random events. The term 'random variable' in its mathematical definition refers to neither randomness nor variability but instead is a mathematical function (mathematics), function in which * the Domain of a function, domain is the set of possible Outcome (probability), outcomes in a sample space (e.g. the set \ which are the possible upper sides of a flipped coin heads H or tails T as the result from tossing a coin); and * the Range of a function, range is a measurable space (e.g. corresponding to the domain above, the range might be the set \ if say heads H mapped to -1 and T mapped to 1). Typically, the range of a random variable is a subset of the Real number, real numbers. Informally, randomness typically represents some fundamental element of chance, such as in the roll of a dice, d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fixed Effects Vs Random Effects

Fixed may refer to: * ''Fixed'' (EP), EP by Nine Inch Nails * ''Fixed'' (film), an upcoming animated film directed by Genndy Tartakovsky * Fixed (typeface), a collection of monospace bitmap fonts that is distributed with the X Window System * Fixed, subjected to neutering * Fixed point (mathematics), a point that is mapped to itself by the function * Fixed line telephone, landline See also * * * Fix (other) * Fixer (other) * Fixing (other) Fixing may refer to: * The present participle of the verb "to fix", an action meaning maintenance, repair, and operations * "fixing someone up" in the context of arranging or finding a social date for someone * "Fixing", craving an addictive drug, ... * Fixture (other) {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Response Variable

A variable is considered dependent if it depends on (or is hypothesized to depend on) an independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function), on the values of other variables. Independent variables, on the other hand, are not seen as depending on any other variable in the scope of the experiment in question. Rather, they are controlled by the experimenter. In pure mathematics In mathematics, a function is a rule for taking an input (in the simplest case, a number or set of numbers)Carlson, Robert. A concrete introduction to real analysis. CRC Press, 2006. p.183 and providing an output (which may also be a number). A symbol that stands for an arbitrary input is called an independent variable, while a symbol that stands for an arbitrary output is called a dependent variable. The most common symbol for the input is , and the most common symbol for the output is ; the function ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ANOVA Very Good Fit

Analysis of variance (ANOVA) is a family of statistical methods used to compare the means of two or more groups by analyzing variance. Specifically, ANOVA compares the amount of variation ''between'' the group means to the amount of variation ''within'' each group. If the between-group variation is substantially larger than the within-group variation, it suggests that the group means are likely different. This comparison is done using an F-test. The underlying principle of ANOVA is based on the law of total variance, which states that the total variance in a dataset can be broken down into components attributable to different sources. In the case of ANOVA, these sources are the variation between groups and the variation within groups. ANOVA was developed by the statistician Ronald Fisher. In its simplest form, it provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. History While the analysis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |