|

Adaptive Resonance Theory

Adaptive resonance theory (ART) is a theory developed by Stephen Grossberg and Gail Carpenter on aspects of how the brain processes information. It describes a number of neural network models which use supervised and unsupervised learning methods, and address problems such as pattern recognition and prediction. The primary intuition behind the ART model is that object identification and recognition generally occur as a result of the interaction of 'top-down' observer expectations with 'bottom-up' sensory information. The model postulates that 'top-down' expectations take the form of a memory template or prototype that is then compared with the actual features of an object as detected by the senses. This comparison gives rise to a measure of category belongingness. As long as this difference between sensation and expectation does not exceed a set threshold called the 'vigilance parameter', the sensed object will be considered a member of the expected class. The system thus offer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stephen Grossberg

Stephen Grossberg (born December 31, 1939) is a cognitive scientist, theoretical and computational psychologist, neuroscientist, mathematician, biomedical engineer, and neuromorphic technologist. He is the Wang Professor of Cognitive and Neural Systems and a Professor Emeritus of Mathematics & Statistics, Psychological & Brain Sciences, and Biomedical Engineering at Boston University.Faculty page at Boston University Career Early life and education Grossberg first lived in Woodside, Queens, in New York City. His father died from[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive Science (journal)

''Cognitive Science'' is a multidisciplinary peer-reviewed academic journal published by John Wiley & Sons on behalf of the Cognitive Science Society The Cognitive Science Society is a professional society for the interdisciplinary field of cognitive science. It brings together researchers from many fields who hold the common goal of understanding the nature of the human mind. The society prom .... It was established in 1977 and covers all aspects of cognitive science. External links * English-language journals Publications established in 1977 Cognitive science journals Wiley (publisher) academic journals Academic journals associated with learned and professional societies of the United States {{Psychology-journal-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistent Estimator

In statistics, a consistent estimator or asymptotically consistent estimator is an estimator—a rule for computing estimates of a parameter ''θ''0—having the property that as the number of data points used increases indefinitely, the resulting sequence of estimates converges in probability to ''θ''0. This means that the distributions of the estimates become more and more concentrated near the true value of the parameter being estimated, so that the probability of the estimator being arbitrarily close to ''θ''0 converges to one. In practice one constructs an estimator as a function of an available sample of size ''n'', and then imagines being able to keep collecting data and expanding the sample ''ad infinitum''. In this way one would obtain a sequence of estimates indexed by ''n'', and consistency is a property of what occurs as the sample size “grows to infinity”. If the sequence of estimates can be mathematically shown to converge in probability to the true value ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

L2 Norm

In mathematics, a norm is a function from a real or complex vector space to the non-negative real numbers that behaves in certain ways like the distance from the origin: it commutes with scaling, obeys a form of the triangle inequality, and is zero only at the origin. In particular, the Euclidean distance of a vector from the origin is a norm, called the Euclidean norm, or 2-norm, which may also be defined as the square root of the inner product of a vector with itself. A seminorm satisfies the first two properties of a norm, but may be zero for vectors other than the origin. A vector space with a specified norm is called a normed vector space. In a similar manner, a vector space with a seminorm is called a ''seminormed vector space''. The term pseudonorm has been used for several related meanings. It may be a synonym of "seminorm". A pseudonorm may satisfy the same axioms as a norm, with the equality replaced by an inequality "\,\leq\," in the homogeneity axiom. It can als ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Gas

Neural gas is an artificial neural network, inspired by the self-organizing map and introduced in 1991 by Thomas Martinetz and Klaus Schulten. The neural gas is a simple algorithm for finding optimal data representations based on feature vectors. The algorithm was coined "neural gas" because of the dynamics of the feature vectors during the adaptation process, which distribute themselves like a gas within the data space. It is applied where data compression or vector quantization is an issue, for example speech recognition, image processing or pattern recognition. As a robustly converging alternative to the k-means clustering it is also used for cluster analysis. Algorithm Given a probability distribution P(x) of data vectors x and a finite number of feature vectors w_i, i = 1,\cdots,N. With each time step t, a data vector x randomly chosen from P(x) is presented. Subsequently, the distance order of the feature vectors to the given data vector x is determined. Let i_0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

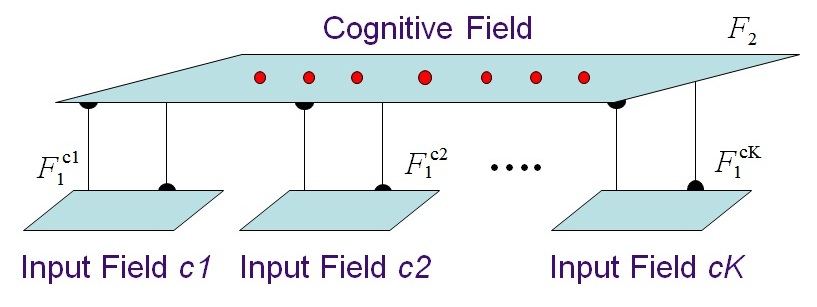

Fusion Adaptive Resonance Theory

Fusion adaptive resonance theory (fusion ART) is a generalization of self-organizing neural networks known as the original Adaptive Resonance TheoryCarpenter, G.A. & Grossberg, S. (2003)Adaptive Resonance Theory, In Michael A. Arbib (Ed.), The Handbook of Brain Theory and Neural Networks, Second Edition (pp. 87-90). Cambridge, MA: MIT Press models for learning recognition categories across multiple pattern channels. There is a separate stream of work on fusion ARTMAP,Y.R. Asfour, G.A. Carpenter, S. Grossberg, and G.W. Lesher. (1993) Fusion ARTMAP: an adaptive fuzzy network for multi-channel classification. In Proceedings of the Third International Conference on Industrial Fuzzy Control and Intelligent Systems (IFIS).R.F. Harrison and J.M. Borges. (1995) Fusion ARTMAP: Clarification, Implementation and Developments. Research Report No. 589, Department of Automatic Control and Systems Engineering, The University of Sheffield. that extends fuzzy ARTMAP consisting of two fuzzy ART modul ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixture Models

In statistics, a mixture model is a probabilistic model for representing the presence of subpopulations within an overall population, without requiring that an observed data set should identify the sub-population to which an individual observation belongs. Formally a mixture model corresponds to the mixture distribution that represents the probability distribution of observations in the overall population. However, while problems associated with "mixture distributions" relate to deriving the properties of the overall population from those of the sub-populations, "mixture models" are used to make statistical inferences about the properties of the sub-populations given only observations on the pooled population, without sub-population identity information. Mixture models should not be confused with models for compositional data, i.e., data whose components are constrained to sum to a constant value (1, 100%, etc.). However, compositional models can be thought of as mixture models, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

In statistics, classification is the problem of identifying which of a set of categories (sub-populations) an observation (or observations) belongs to. Examples are assigning a given email to the "spam" or "non-spam" class, and assigning a diagnosis to a given patient based on observed characteristics of the patient (sex, blood pressure, presence or absence of certain symptoms, etc.). Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE Transactions On Neural Networks

''IEEE Transactions on Neural Networks and Learning Systems'' is a monthly peer-reviewed scientific journal published by the IEEE Computational Intelligence Society. It covers the theory, design, and applications of neural networks and related learning systems. According to the ''Journal Citation Reports'', the journal had a 2021 impact factor of 14.255. The journal was established in 1990 by the IEEE Neural Networks Council. Editors-in-chief * Yongduan Song (Chongqing University), 2022–present * Haibo He (University of Rhode Island), 2016–2021 * Derong Liu (University of Illinois), 2010–2015 * Marios M. Polycarpou ( University of Cyprus), 2004–2009 * Jacek M. Zurada (University of Louisville), 1998–2003 * Robert J. Marks II (Baylor University), 1992–1997 * Michael W. Roth (Johns Hopkins University Johns Hopkins University (Johns Hopkins, Hopkins, or JHU) is a private research university in Baltimore, Maryland. Founded in 1876, Johns Hopkins is the oldest resear ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

L1 Norm

In mathematics, the spaces are function spaces defined using a natural generalization of the -norm for finite-dimensional vector spaces. They are sometimes called Lebesgue spaces, named after Henri Lebesgue , although according to the Bourbaki group they were first introduced by Frigyes Riesz . spaces form an important class of Banach spaces in functional analysis, and of topological vector spaces. Because of their key role in the mathematical analysis of measure and probability spaces, Lebesgue spaces are used also in the theoretical discussion of problems in physics, statistics, economics, finance, engineering, and other disciplines. Applications Statistics In statistics, measures of central tendency and statistical dispersion, such as the mean, median, and standard deviation, are defined in terms of metrics, and measures of central tendency can be characterized as solutions to variational problems. In penalized regression, "L1 penalty" and "L2 penalty" refer to p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synapse

In the nervous system, a synapse is a structure that permits a neuron (or nerve cell) to pass an electrical or chemical signal to another neuron or to the target effector cell. Synapses are essential to the transmission of nervous impulses from one neuron to another. Neurons are specialized to pass signals to individual target cells, and synapses are the means by which they do so. At a synapse, the plasma membrane of the signal-passing neuron (the ''presynaptic'' neuron) comes into close apposition with the membrane of the target (''postsynaptic'') cell. Both the presynaptic and postsynaptic sites contain extensive arrays of molecular machinery that link the two membranes together and carry out the signaling process. In many synapses, the presynaptic part is located on an axon and the postsynaptic part is located on a dendrite or soma. Astrocytes also exchange information with the synaptic neurons, responding to synaptic activity and, in turn, regulating neurotransmission. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |